Day 2551 / shredding entire directory recursively

security - How do I recursively shred an entire directory tree? - Unix & Linux Stack Exchange:

find <directory_name> -depth -type f -exec shred -v -n 1 -z -u {} \;

My name is Serhii Hamotskyi, welcome to my personal website!

It was created as a way to organize information about all the things I'm interested in. The main parts are listed on the right.

security - How do I recursively shred an entire directory tree? - Unix & Linux Stack Exchange:

find <directory_name> -depth -type f -exec shred -v -n 1 -z -u {} \;

TIL: Indico - Home, allegedly used by ScaDs and CERN, open sourcer

The effortless open-source tool for event organisation, archival and collaboration

Looks really really nice and professional

(Shown somewhere in examples d but I always have to look for it)

<Header value="xxx" />

<!-- {

"data": {

"index": 4,

}

} -->

Since spaces don’t matter, this is even better for copypasting new versions:

<Header value="xxx" />

<!-- { "data":

{

"index": 4,

}

} -->

Applying stuff to multiple names: comma-separate them 1

EDIT: this doesn’t result in an error but quietly drops annotations and makes them not-editable, TODO bug report!

<Header value="Which text is better?" size="6" />

<Choices ... toName="name_a,name_b">

Choices by radio button instead of checkmarks for single choice: `

Inline choices: layout="inline"

Value is shown in the screen, alias can override that in json output:

<Choice alias="1" value="Completely mismatched tone/content"/> will be 1 in json

`Formatting text with info — couldn’t find anything better than this abomination:

<View style="display: flex;">

<Header value="How well is this advertisement tailored for group '" size="6" />

<Header value="$target_group" size="6" />

<Header value="'?" size="6" />

</View>

Or:

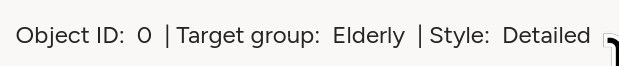

<View style="display: flex;">

<Text name="l_obj_id" value="Object ID:" /> <Text name="t_object_id" value="$object_id"/>

<Text name="l_target_group" value="| Target group:" /> <Text name="t_target_group" value="$target_group"/>

<Text name="l_style" value="| Style:" /> <Text name="sty" value="$text_style"/>

</View>

The <Table> tag generates a table with headers based on dict with key/value pairs.

This compresses it:

<Style>

.ant-table-cell {

padding: 0;

font-size: 0.75rem;

line-height: 1; /* Removes vertical space added by line height */

}

.ant-table-tbody td {

padding: 10px !important;

}

/* And this sets the column widths */

.ant-table-thead th:first-child,

.ant-table-tbody tr td:first-child {

width: 40%;

}

.ant-table-thead th:last-child,

.ant-table-tbody tr td:last-child {

width: 60%;

}

</Style>

The native tag has very few options for config, in most cases rolling our own is a good idea.

This quick undocumented ugly function generates a label-studio-like table structure (except it’s missing missing <thead> that I don’t need) from a key/value dictionary.

def poi_to_html_table(poi: dict[str, str]) -> str:

DIVS_OPEN = '<div class="ant-table ant-table-bordered"><div class="ant-table-container"> <div class="ant-table-content">'

TABLE_OPEN = '<table style="table-layout: auto;">'

TBODY_OPEN = '<tbody class="ant-table-tbody">'

TR_OPEN = '<tr class="ant-table-row">'

TD_OPEN = '<td class="ant-table-cell">'

TABLE_DIVS_CLOSE = "</tbody></table></div></div></div>"

res = f"{DIVS_OPEN}{TABLE_OPEN}{TBODY_OPEN}"

for k, v in poi.items():

res += f"{TR_OPEN}{TD_OPEN}{k}</td>{TD_OPEN}{v}</td></tr>"

res += TABLE_DIVS_CLOSE

return res

TO STYLE THEM, SEE BELOW

inline<HyperText name="poi_table" value="$poi_html" inline="true" />

If you want to generate HTML and apply styles to it, the HyperText should be inline, otherwise it’ll be an iframe. Above, $poi_html is a table that then gets styled to look like native tables through .ant-table-... classes, but the style lives in the template, not in $poi_html.

Very very handy for texts of unknown length, especially inside columns:

<View style="height: 500px; overflow: auto;">

<Text name="text" value="$text" />

</View>

EDIT: max-height for multiple stacked windows is golden!

Don’t ask how or why, but this works as of 1.21.0. I don’t know enough tailwind to say why does the approach from this fiddle with !important fails. Or why does the background of the scrollbar gets set always on chrome, but only on hover for firefox.

.scroll {

scrollbar-color: crimson blue !important; /* scrollbar, background */

scrollbar-width: auto !important; /* Use OS default instead of thin, only Chrome */

}

Related: scrollbar-color - CSS | MDN, Scrollbar Styling with Tailwind and daisyUI - Scott Spence

<View style="display: grid; grid-template: auto/1fr 1fr; column-gap: 1em">`

(or)

<Style>

.threecols {display: grid; grid-template: auto/1fr 1fr 1fr;}

.twocols {display: grid; grid-template: auto/1fr 1fr;}

</Style>

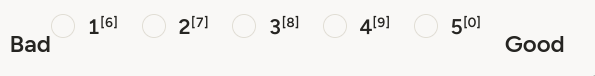

<Choices name="xxxx" choice="single-radio" toName="obj_desc_a" layout="inline">

<View style="display: flex;">

<Header size="6" value="Bad" />

<Choice value="1" />

<Choice value="2" />

<Choice value="3" />

<Choice value="4" />

<Choice value="5" />

<Header size="6" value="Good" />

</View>

</Choices>

(Preserving l/r width)

(Preserving l/r width)

<View style="display: grid; grid-template: auto/300px 300px 300px; place-items: center;">

<Header size="6" value="Bad" />

<Rating name="444" toName="obj_desc_a" maxRating="5" />

<Header size="6" value="Good" />

</View>

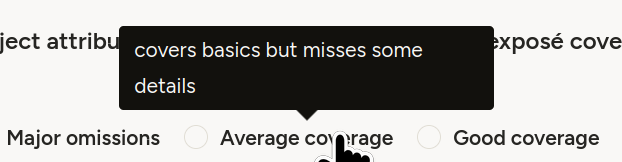

You can make the options short and place additional info in hints!

<Choice alias="3" value="Average coverage" hint="covers basics but misses some details"/>

Seen here: Label Studio — Pairwise Tag to Compare Objects ↩︎

TIL, mentioned by HC&BB: Rclone “is a command-line program to manage files on cloud storage”.

S3, dropbox, onedrive, [next|own]cloud, sftp, synology, and ~70 more storage backends i’ve never heard about.

Previously-ish: 250121-1602 Kubernetes copying files with rsync and kubectl without ssh access

Previously: 231018-1924 Speedtest-cli and friends

TIL about:

I especially like fast.com, I’ll remember the URI forever.

Refs:

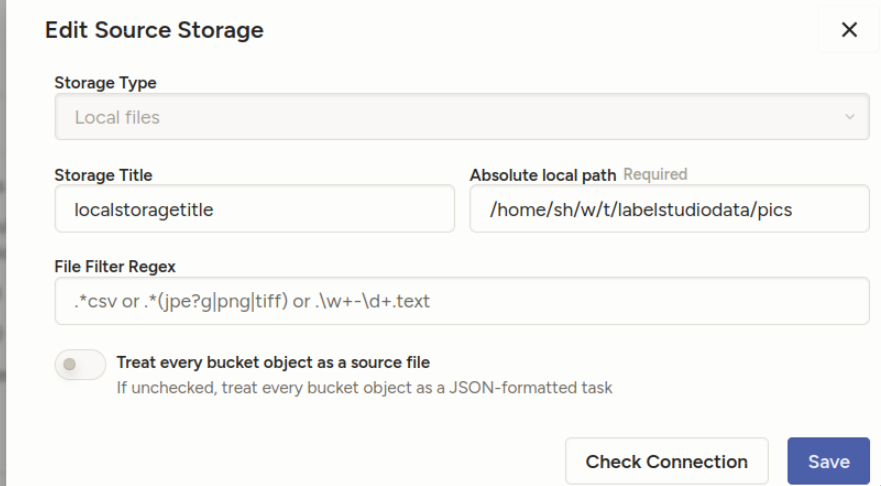

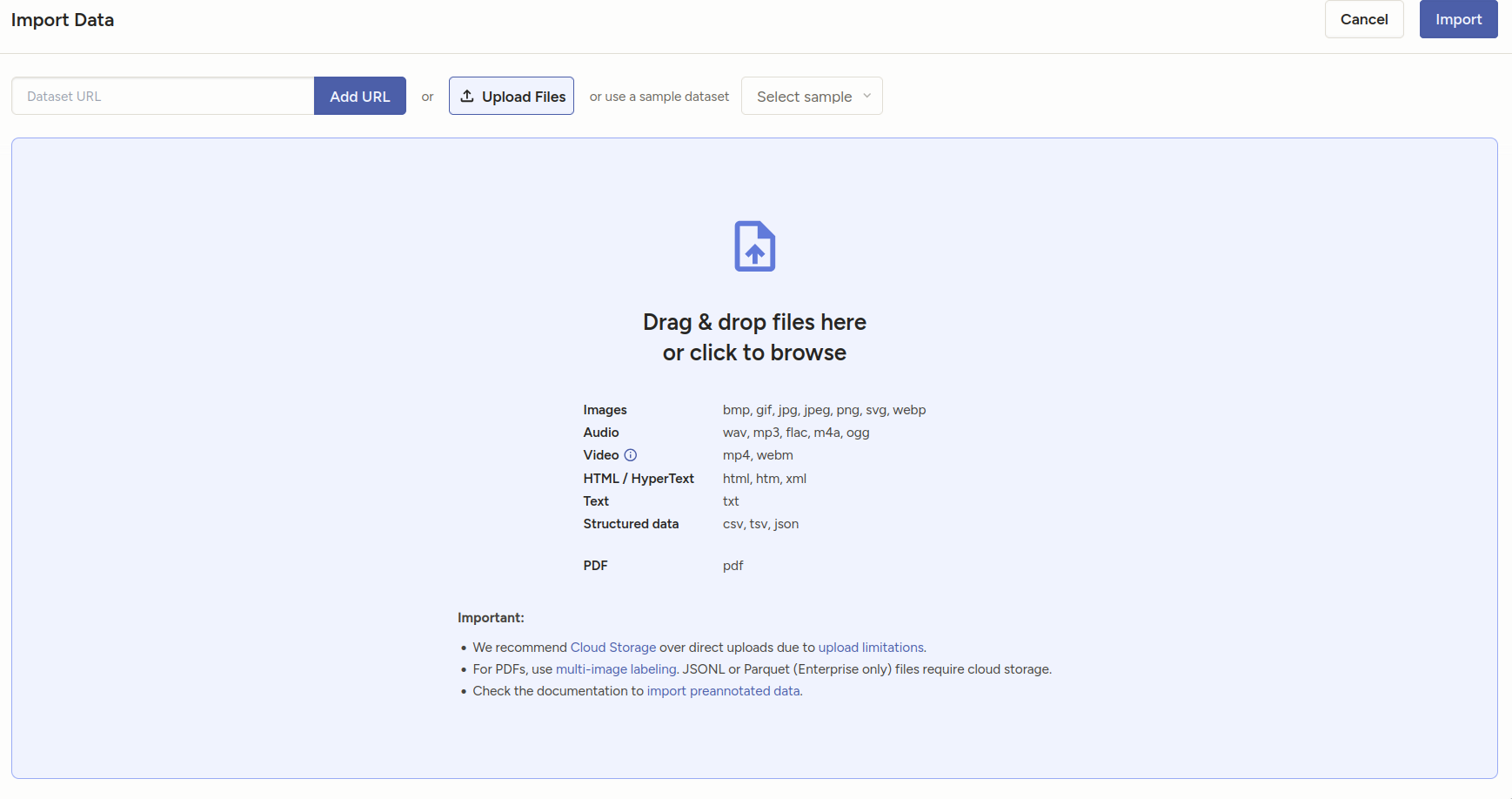

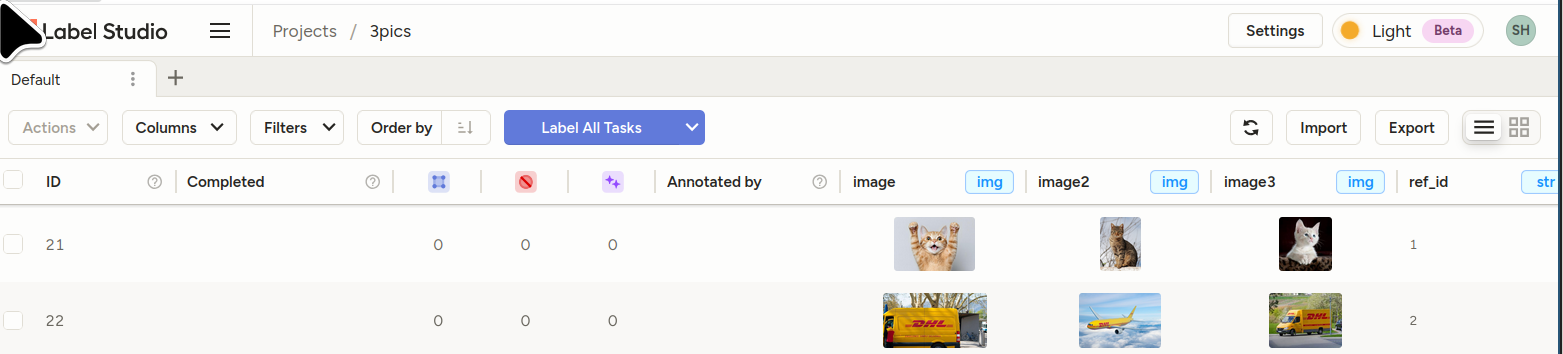

The steps are:

/home/sh/w/t/labelstudiodatapics, making the full path /home/sh/w/t/labelstudiodata/pics[

{

"data": {

"ref_id": 1,

"image": "/data/local-files/?d=pics/cat1.png",

"image2": "/data/local-files/?d=pics/cat2.png",

"image3": "/data/local-files/?d=pics/cat3.png"

}

},

{

"data": {

"ref_id": 2,

"image": "/data/local-files/?d=pics/dhl1.png",

"image2": "/data/local-files/?d=pics/dhl2.png",

"image3": "/data/local-files/?d=pics/dhl3.png"

}

}

]

In the data, the paths are /data/local-files/?d=pics/cat1.png — start with /data/local-files/?d=, then the subdir, then the path to the file itself (here it’s flat: cat3.jpg)

LABEL_STUDIO_LOCAL_FILES_SERVING_ENABLED=true LABEL_STUDIO_LOCAL_FILES_DOCUMENT_ROOT=/home/sh/w/t/labelstudiodata label-studio

LABEL_STUDIO_LOCAL_FILES_DOCUMENT_ROOT should point to your folder, WITHOUT the subfolder (no pics/) , and be absolute.

Create your project as usual, and open its settings. The absolute local path is the SUBFOLDER of the document root:

“Treat every bucket as source” should be unchecked — in the documentation, they describe it differently from the screenshots, but it’s equivalent:

- 8. Import method - select “Tasks” (because you will specify file references inside your JSON task definitions)

“Check connection” should tell you if everything’s OK.

You should see your tasks.

<View> <View style="display: grid; grid-template-columns: 1fr 1fr 1fr; max-height: 300px; width: 900px"> <Image name="image1" value="$image"/>

<Image name="image2" value="$image2"/>

<Image name="image3" value="$image3"/> </View>

<Choices name="choice2" toName="image2">

<Choice value="Adult content"/>

<Choice value="Weapons"/>

<Choice value="Violence"/>

</Choices>

</View>

[Tried it, realized that it’ll replace 80% of my use-cases of jupyter / jq etc., and improve viewing of random csv/json files as well!

(Previously: 250902-1905 Jless as less for jq json data)

$somelist | where ($it in (open somefile.json)) | lengthConfig in ~/.config/nushell/config.nu, editable by config nu, for now:

$env.config.edit_mode = 'vi'

$env.config.buffer_editor = "nvim" # `config nu`

alias vim = nvim

alias v = nvim

alias g = git

alias k = kubectl

alias c = clear

alias l = ls

alias o = ^open

[]

allenai/olmocr: Toolkit for linearizing PDFs for LLM datasets/training

Online demo: https://olmocr.allenai.org/

curl -X 'POST' \

'http://localhost:8001/tokenize' \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-d '{

"model": "mistralai/Mistral-Small-24B-Instruct-2501",

"prompt": "my prompt",

"add_special_tokens": true,

"additionalProp1": {}

}'

uv add jupyterlab-vim

uv run jupyter labextension list

uv run jupyter labextension enable jupyterlab_vim

uv run jupyter lab

Оригінал: .:: Phrack Magazine ::.

Контекст: Маніфест хакера — Вікіпедія / Hacker Manifesto - Wikipedia

Існуючий дуже класний переклад, не відкривав, поки не закінчив свій: Маніфест хакера | Hacker’s Manifesto | webKnjaZ: be a LifeHacker

==Phrack Inc.==

Том I, випуск 7, Phайл[0] 3 з 10

=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=

Наступне було написано невдовзі після мого арешту...

\/\Совість хакера/\/

автор:

+++The Mentor+++

8 січня 1986р.

=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=

Сьогодні ще одного спіймали, пишуть у всіх газетах. "Кібер-скандал:

підлітку повідомили про підозру", "Кіберзлочинця затримали після

проникнення в систему банку".

Тупа школота[1], вони всі однакові.

Та чи ви, з вашою трафаретною психологією[2] та знаннями

інформатики зразка першої половини пʼятидесятих[3], коли-небудь дивилися

в душу хакера? [4] Чи вас колись цікавило, що ним рухає[5],

які сили на нього впливали, що його сформувало?

Я хакер, ласкаво прошу у мій світ...

Мій світ почався ще зі школи... Я розумніший за більшість інших

дітей, і дурниці, які нам викладають, мені набридають.

Тупий трієчник[6], вони всі однакові.

Восьмий, девʼятий, десятий, одинадцятий клас[7]. В пʼятнадцятий

раз слухаю, як вчителька пояснює, як скорочувати дроби. Мені все ясно. "Ні,

Вікторія Миколаївна[8], я не написав проміжні кроки, я розвʼязав все усно..."

Тупий підліток. Мабуть списав. Всі вони такі.

Сьогодні я зробив відкриття. Я знайшов компʼютер. Ха, почекай-но, це круто.

Він робить те, що я від нього хочу. І якщо він помиляється, це тому, що

помилився я. А не тому що він мене не любить...

Або відчуває від мене загрозу...

Або вважає мене самозакоханим всезнайком[9]...

Або ненавидить себе, дітей, та педагогіку[10]...

Тупий підліток. Він постійно тільки грає в свої ігри. Всі вони такі...

Потім це відбулось... відчинились двері в світ... несучись телефонною

лінією як героїн венами наркомана, надсилається електронний пульс,

шукається спасіння від ідіотизму навколо...[11] Знаходиться борд[12].

"Це воно... це те, до чого я належу..."

Я з усіма тут знайомий... попри те, що я з ними ніколи не

зустрічався, не розмовляв, і колись можливо більше нічого не чутиму про

них... Я їх всіх знаю...

Тупий підліток. Знову займає телефонну лінію... Вони всі однакові.

Та ще й як — ми всі однакові! [13] Нас годували

дитячими сумішами з ложки, коли ми хотіли стейк... а ті куски мʼяса, які

до нас все ж потрапляли, були вже пережовані і без смаку. Над нами

панували садисти, або нас ігнорували байдужі. Для тих, хто хотіли чомусь

нас навчити, ми були вдячними учнями, але їх було як краплин дощу в

пустелі.

Цей світ зараз наш... світ електрона і комутатора, світ краси

бода[14]. Ми користуємося існуючою послугою не платячи за те, що могло б

бути дуже дешевим, якби ним не завідували жадібні бариги[15], і ви

називаєте нас злочинцями. Ми досліджуємо... і ви називаєте нас

злочинцями. Ми шукаємо знання... і ви називаєте нас злочинцями. Ми

існуємо без кольору шкіри, без національності і без релігійної

нетерпимості... і ви називаєте нас злочинцями. Ви будуєте атомні бомби,

ви ведете війни, ви вбиваєте, обманюєте, і брешете нам, намагаючись

змусити нас повірити, що ви це робите для нашого блага, але злочинці тут — ми.

Так, я злочинець. Мій злочин - моя допитливість. Мій злочин -

оцінювати людей по тому, що вони кажуть і думають, а не по тому, як

вони виглядають. Мій злочин в тому, що я вас перехитрив, і ви мене

ніколи не пробачите за це.

Я хакер, і це мій маніфест. Можливо ви зупините мене як особу, але ви

ніколи не зупините нас всіх... зрештою, ми всі однакові.

Замітки:

Hat tip to:

Головне, щоб слова не були занадто новими: КОМП’ЮТЕР — СЛОВОВЖИВАННЯ | Горох — українські словники:

Компʼютер

Правильніше: верстак

(Хоча можна ще цікавіше: Комп’ютер - Як перекладається слово Комп’ютер українською - Словотвір)

Random:

- you bet your ass we’re all alike: як же складно підбирати такі речі. Умовні embeddings з ML тут були б в тему. “Дай мені щось на кшталт ‘авжеж’ тільки більш emphatical”

09/11/2025, в світі, де це вже можливо, я повернувся до цього тексту.

Змінив наступне:

Дякую ChatGPT за

[0]: Ph-айл => Phайл[5]: what makes him tick: “що є причиною його поведінки” ➔ “що ним рухає”[6]: “тупий відстаючий” ➔ “тупий трієчник”[15] “ненажерливі бариги” ➔ “жадібні бариги”[2]: трафаретна ментальність ➔ психологія

[10]: “shouldn’t be teaching”: таки використав ідеальне “ненавидить себе, дітей та педагогіку” (було: “не любить викладати”)

[11] `невігластва навколо” ➔ “ідіотизму навколо”

[13]: “Та можете не сумніватись, що ми всі однакові…” ➔ “Та ще й як — ми всі однакові!”

(1) Букви on X: “@donikroman Пане, Ви знову з матами, як школота ¯_(ツ)_/¯ Зауваження, до слова, правильне, українською мовою, а не “на українській мові”, це калька з російської. Може б варто почати з такого? https://t.co/LEEEIX9Bwd” / X ↩︎

„підліток“ синоніми, антоніми, відмінки та тлумачення слова ↩︎

ВСЕЗНАЙКО — СЛОВОВЖИВАННЯ зслається на “Мова – не калька: словник української мови”. Частота звідси. ↩︎ ↩︎