Active training and active testing in ML

- [2103.05331] Active Testing: Sample-Efficient Model Evaluation

- <_(@kossenactivetesting2021) “Active Testing: Sample-Efficient Model Evaluation” (2021) / Jannik Kossen, Sebastian Farquhar, Yarin Gal, Tom Rainforth: z / http://arxiv.org/abs/2103.05331 /

10.48550/arXiv.2103.05331_>1 - Introduces active testing

- <_(@kossenactivetesting2021) “Active Testing: Sample-Efficient Model Evaluation” (2021) / Jannik Kossen, Sebastian Farquhar, Yarin Gal, Tom Rainforth: z / http://arxiv.org/abs/2103.05331 /

- [2101.11665] On Statistical Bias In Active Learning: How and When To Fix It the paper describing the estimator for AL in detail, the one that removes bias when sampling

- [2508.09093] Scaling Up Active Testing to Large Language Models

- <_(@berradascalingactive2025) “Scaling Up Active Testing to Large Language Models” (2025) / Gabrielle Berrada, Jannik Kossen, Muhammed Razzak, Freddie Bickford Smith, Yarin Gal, Tom Rainforth: z / http://arxiv.org/abs/2508.09093 /

10.48550/arXiv.2508.09093_> 2 - adapts it to LLMs to LLMs

- <_(@berradascalingactive2025) “Scaling Up Active Testing to Large Language Models” (2025) / Gabrielle Berrada, Jannik Kossen, Muhammed Razzak, Freddie Bickford Smith, Yarin Gal, Tom Rainforth: z / http://arxiv.org/abs/2508.09093 /

OK, so.

Active testing1

-

Active learning: picking the most useful training instances to the model (e.g. because annotation is expensive, and we pick what to annotate)

-

Active testing: pick the most useful subset of testing instances that approximates the score of the model on the full testing set.

-

We can test(=I use label below) only a subset of the full test set, $D_{test}^{observed}$ from $D_{test}$

-

We decide to label only a subset $N>M$ of the test instances, and not all together, but one at a time, because then we can use the information of the already labeled test instances to pick which next one to label

-

It calls these (estimated or not) test scores test risk, $R$ .

Strategies

- Naive: we uniformly sample the full test dataset

- BUT if $M«N$, its variance will be large, so though it’s uniformly/unbiasedly sampled, it won’t necessarily approximate the real score well

- Actively sampling, to reduce the variance of the estimator.

- Actively selecting introduces biases as well if not done right

- e.g. active learning you label hard points to make it informative, but in active testing testing on hard points will inflate the risk

- Actively selecting introduces biases as well if not done right

Active sampling

- sth monte carlo etc. etc.

- TODO grok the math and stats behind all of this.

- follows my understanding that might be wrong

- The [2101.11665] On Statistical Bias In Active Learning: How and When To Fix It paper that introduces $R_{lure}$ is might be a starting point but at first sight it’s math-heavy

- TL;DR pick the most useful points, and get rid of the biases this introduces (e.g. what if they are all complex?) by using smart auto-correcting mechanisms (=‘unbiased estimator’)

- You have some $q(i_m)$ strategy that picks interesting samples, though they might bias the result.

- $q(i_m)$ is actually shorthand for $q(i_m, i_{1:m-1}, D_{test}, D_{train})$ — so we have access to the the datasets and the already aquired test data.

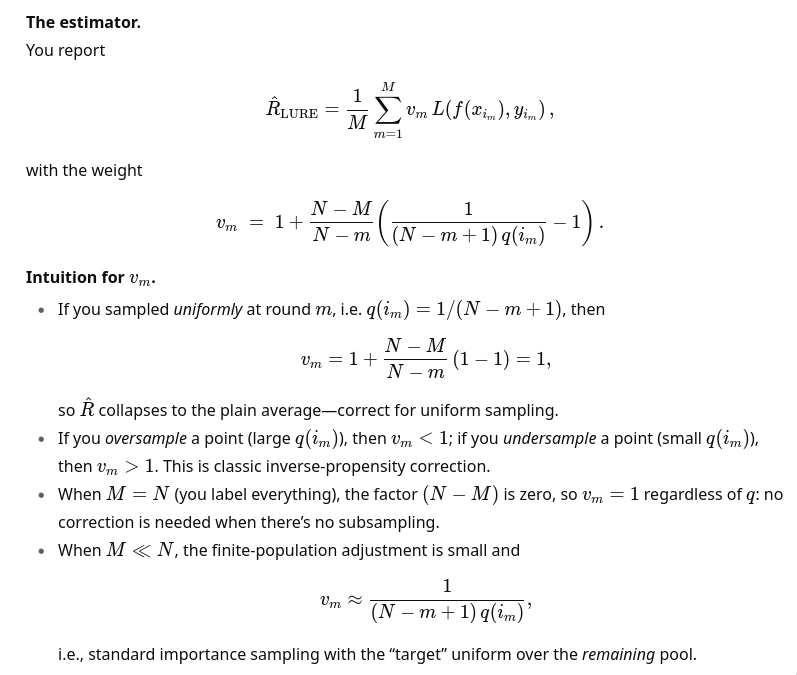

- $R_{LURE}$: An unbiased estimator that auto-corrects the discribution to be closer to the one expected if you iid-uniformly sample the test dataset.

- ChatGPT on the intuition behind this:

- also:

This is the classic promise of importance sampling: move probability mass toward where the integrand is large/volatile, then down-weight appropriately.

- also:

Bottom line: active testing = (i) pick informative test points stochastically with $q$; (ii) compute a weighted Monte Carlo estimate with $v_m$ to remove selection bias; (iii) enjoy lower variance if $q$ is well-chosen.

-

<_(@kossenactivetesting2021) “Active Testing: Sample-Efficient Model Evaluation” (2021) / Jannik Kossen, Sebastian Farquhar, Yarin Gal, Tom Rainforth: z / http://arxiv.org/abs/2103.05331 /

10.48550/arXiv.2103.05331_> ↩︎ ↩︎ -

<_(@berradascalingactive2025) “Scaling Up Active Testing to Large Language Models” (2025) / Gabrielle Berrada, Jannik Kossen, Muhammed Razzak, Freddie Bickford Smith, Yarin Gal, Tom Rainforth: z / http://arxiv.org/abs/2508.09093 /

10.48550/arXiv.2508.09093_> ↩︎