serhii.net

In the middle of the desert you can say anything you want

-

Day 1855 (29 Jan 2024)

Dumping pretty cyrillic UTF YAML and JSON files

TL;DR:

- PyYAML:

allow_unicode=True - JSON:

ensure_ascii=True

Context:

- 231213-1710 Ukrainska Pravda dataset

- 231203-1745 Masterarbeit eval task LMentry-static-UA

- Any and all Ukrainian/cyrillic YAML and JSON files I dump

My favourite params for dumping both, esp. if Ukrainian/cyrillic/UTF is involved

All of the below are params one can pass to

to_[json|yaml][_file]()of Wizard Mixin Classes — Dataclass Wizard 0.22.3 documentation(Py)YAML

https://pyyaml.org/wiki/PyYAMLDocumentation

params = dict( allow_unicode=True, # write Ukrainian as Ukrainian default_flow_style=False, sort_keys=False, # so template is first in the YAML for readability ) self.to_yaml_file(yaml_target, **params)default_flow_styleprefers lists like this (from docu):>>> print yaml.dump(yaml.load(document), default_flow_style=False) a: 1 b: c: 3 d: 4JSON

to_json(indent=4, ensure_ascii=False)The difference being:

(Pdb++) created_tasks[0][0].to_json() '{"question": "\\u042f\\u043a\\u0435 \\u0441\\u043b\\u043e\\u0432\\u043e \\u043a\\u043e\\u0440\\u043e\\u0442\\u0448\\u0435: \\"\\u043a\\u0456\\u0442\\"\\u0447\\u0438 \\"\\u0441\\u043e\\u0431\\u0430\\u043a\\u0430\\"?", "correctAnswer": "\\u043a\\u0456\\u0442", "templateUuid": "1da85d6e7cf5440cba54e3a9b548a037", "taskInstanceUuid": "6ac71cd524474684abfec0cfa3ef5e1e", "additionalMetadata": {"kind": "less", "template_n": 2, "t1": "\\u043a\\u0456\\u0442","t2": "\\u0441\\u043e\\u0431\\u0430\\u043a\\u0430", "reversed": false}}' (Pdb++) created_tasks[0][0].to_json(ensure_ascii=False) '{"question": "Яке слово коротше: \\"кіт\\" чи \\"собака\\"?", "correctAnswer": "кіт", "templateUuid": "1da85d6e7cf5440cba54e3a9b548a037", "taskInstanceUuid": "6ac71cd524474684abfec0cfa3ef5e1e", "additionalMetadata": {"kind": "less", "template_n": 2, "t1": "кіт", "t2": "собака", "reversed": false}}' (Pdb++)

- PyYAML:

-

Day 1854 (28 Jan 2024)

What I learned about Google Sync of apps and F-Droid

Sordid backstory

In the context of 240127-2101 Checklist for backing up an android phone, I wanted to back up my TrackAndGraph data, for which I a) manually created a file export, and b) just in case created a backup through Google Drive/Sync/One/whatever

I then forgot to move the backup file :( but fear not, instead of a clean start I can then use the Google Drive backup of all apps and that one specifically — but it was missing.

It was present in the google backup info as seen in the google account / devices / backups interface, but absent in the phone recovery thing during set up.

Installed it through Google Play again, still nothing, did a new phone factory reset, still nothing.

Googled how to access the information from device backups through google drive w/o a device: you can’t.

Was sad about losing 6month of quantified self data, thought about how to do it better (sell my soul to Google and let it sync things from the beginning?) and gave up

Then I installed the excellent Sentien Launcher through F-droid (was not part of the back up as well, but I didn’t care) and noticed it had my old favourites.

Aha. Aha.

Okay. I see.

TL;DR

Android 13, Samsung phone.

- To use a google drive/one/… backup after a factory reset, after the reset click “I don’t have my device”, it’ll ask you to log in AGAIN to your google account

- it will require 2FA from the phone you don’t have, I had to use one of the recovery codes because it didn’t allow sending an SMS

- Then you can enable some or all of the apps, but the list will contain only the ones you installed from Google Play. Which will be a subset of the list of ALL apps that you see through the web interface.

- To recover info about some apps you got from F-Droid, YOU HAVE TO REINSTALL THE APP FROM F-DROID.

- TrackAndGraph from Google Play didn’t sync, TrackAndGraph from F-Droid had all my data!

- To the best of my understanding, you don’t have any control about the backups from non-google-play apps, they will get automatically the info from your old phone/sync..

- Not all apps from F-Droid will be part of the backup, some forum I can’t find said that many of the apps there opt out from this explicitly because they consider the google drive backup thing inherently insecure.

Uninstalling garbage from my android phone

Removing garbage through ADB

I’ll be more minimalistic though

> adb shell pm list packages | ag "(lazada|faceb|zalo)" package:com.facebook.appmanager package:com.facebook.system package:com.lazada.android package:com.facebook.services package:com.facebook.katanaadb shell pm uninstall -k --user 0 com.facebook.appmanager adb shell pm uninstall -k --user 0 com.facebook.system adb shell pm uninstall -k --user 0 com.lazada.android adb shell pm uninstall -k --user 0 com.facebook.services adb shell pm uninstall -k --user 0 com.facebook.katana adb shell pm uninstall -k --user 0 com.samsung.android.bixby.agent adb shell pm uninstall -k --user 0 com.samsung.android.bixby.wakeup adb shell pm uninstall -k --user 0 com.samsung.android.bixbyvision.frameworkRemoving garbage with Canta+Shizuku (better!)

First heard about them here: (185) Samsung’s privacy policy for Oct 1st is crazy. : Android

- Shizuku (Google Play + ADB but not F-Droid) allows other apps to do root-y things

- Enabling either through

adb shell sh /sdcard/Android/data/moe.shizuku.privileged.api/start.sh - or (better!) through Wifi debugging (no computer required)

- Enabling either through

- Canta w/ the help of Shizuku helps deleting apps quickly, including undeletable ones

- Has a really neat “Recommended” list of apps that are safe to delete and useless

- I do need some of them, so one would need to manually go through it, but I deleted 73 apps after a clean install

- Has a really neat “Recommended” list of apps that are safe to delete and useless

- To use a google drive/one/… backup after a factory reset, after the reset click “I don’t have my device”, it’ll ask you to log in AGAIN to your google account

-

Day 1853 (27 Jan 2024)

Pre-factory-reset checklist for my Android phone

Related:

-

220904-1605 Setting up again Nextcloud, dav, freshRSS sync etc. for Android phone

-

from old DTB: Day 051: Phone ADB full backup - serhii.net:

adb backup -apk -shared -all -f backup-file.adb/adb restore backup-file.adb- (Generally,

adb devices -l,adb shell— after enabling USB debugging in Settings -> Dev.)

-

Also:

adb pull /storage/self/primary/Music .to copy files from device to computer Elsewhere:

-

Cool apps I love and want to remember about (see 220124-0054 List of good things):

- Sentien launcher

- Tiny Weather Forecast Germany

- RedReader

- PodcastAddict

- KeyMapper

- One hand operation+

- Flight pass

- Tasker

- Dict.cc

- Giant stopwatch

- My Intercom

- Free now

- Seek (iNat)

- Stellarium

- HP Print Service Plugin

- öffi

- Todo agenda 12 widget

General

- Any time I have to manually repeat some of the steps, think about a sustainable backup process

- If resetting my phone in controlled circumstances is such a large problem for me - what if I lose it? What am I doing wrong?

- (Or mentally celebrate that I’m awesome if most of what I’m backing up is garbage I can go without)

- Where am I copying my phone files to? Not some random directory on my computer I created just for this and will forget about?

Checklist

- Syncs

- Optionally start Google/Nextcloud/../whatever syncs

- REMEMBER THAT F-DROID APPS MAY NOT BE BACKED UP BY GOOGLE!

- You may get the data back if you reinstall the app after the reset from the same source as the original app, see 240128-1806 What I learned about Google Sync of apps and F-Droid

- REMEMBER THAT F-DROID APPS MAY NOT BE BACKED UP BY GOOGLE!

- Any folder explicitly not-synced by google photos?

- Sync syncable apps like Fitbit, Bitwarden etc. one last time as I touch them

- Optionally start Google/Nextcloud/../whatever syncs

- Do I remember my

- Fastmail user/pass

- Google acc user/pass

- 2FA on which phone number?

- Have recovery codes ready, because it may not allow SMS etc. for 2FA to log in to Google right after phone reset1

- Do I have all the papers for:

- Banking apps (PhotoTAN etc.)

- Any 2FA/Authenticator app{s?

- Codes for things I may need to access if sth fails?

- Do I have the SIM card for any phone numbers I may need for log ins?

- Backup

- Bitwarden sync?

- TrackAndGraph

- NewPipe

- Settings -> Content -> Export database

NewPipeData-xxx.zip

- OSMAnd: Backup to file

- My Places includes tracks

- Will be an

Export_xxx.osffile

- PodcastAddict

- everything:

PodcastAddict_xxx.backup; OPML exists

- everything:

- FBReader: Settings -> Synchronization -> Export (`FBRReader_Settings_xxx.json)

- Browsers, all

- Open tabs? (bookmark?) - Brave: Select one tab -> select all -> bookmark all tabs

- Bookmarks

- Brave:

bookmarks.html

- Brave:

- Passwords

- Manually go through and save in the real password manager any not present

- Browsing history?

- Downloads if they are in a special location?

- I think most end up in Internal_storage/Download anyway

- Obsidian

- All vaults are synced

- I remember the NAMES and passwords for all of them

- Local-first apps

- KeyMapper:

mappings_xxx.zip - VPN account number

- KeyMapper:

- Files

- Photos in Google Photos

- FBReader books:

Internal storage/Books - Recorder apps (may be multiple installed) recordings

- Internal_storage/Recordings, Music/Recordings

- Phone call recordings

- Contacts

- Does DAVx still sync to Fastmail? (Check a known-bad number)

- (Contacts->Contact->Storage locations-)

- If yes - do I know the settings for the sync?

- Does DAVx still sync to Fastmail? (Check a known-bad number)

- Just in case

- SMS?

- Call logs?

- Any exotic settings I love that I will forget about (220124-0054 List of good things)

- Misc

- Do I have any random phone background etc. I might want as files?

- Breathe and think about everything one last time

- ESPECIALLY WHETHER YOU ACTUALLY SAVED ALL THE BACKUP FILES ELSEWHERE, ALL, BECAUSE I LITERALLY MISSED UPLOADING TRACKANDGRAPH WHEN WRITING THIS CHECKLIST AND ALMOST LOST 6 MONTH OF DATA GODDAMMIT

- Fire.

Post–factory reset checklist (TODO)

- Sync settings

- Google Photos

- Explicitly include Whatsapp etc. to sync

- Google Photos

- 240128-0044 Uninstalling garbage from my android phone Shizuku+Canta are awesome

- Apps

- OSM (free outside google play)

- re-integrate tracks

- re-download useful maps

- Deutsche Bahn!

- Add all monthly tickets and cards!

- DAVx set up sync to Fastmail for calendars and contacts

- gboard instead of default keyboard

- OSM (free outside google play)

- Settings

- Disable animations etc.

- Privacy2

- Use Security and privacy settings on Galaxy phones and tablets

- Stop Google tracking

- Stop Samsung tracking if applicable.

- Allow side-by-side view for apps not supporting it in settings->labs

-

(because “more secure options are are available”) ↩︎

-

Relevant (185) Samsung’s privacy policy for Oct 1st is crazy. : Android ↩︎

-

-

Day 1848 (22 Jan 2024)

UA-CBT story generation Masterarbeit notes

Instead of doing graphs for 231024-1704 Master thesis task CBT, went with generating prompts for stories.

General

If you want a story involving a fox and a raven, the story will contain cheese — so need to inject randomness.

Models

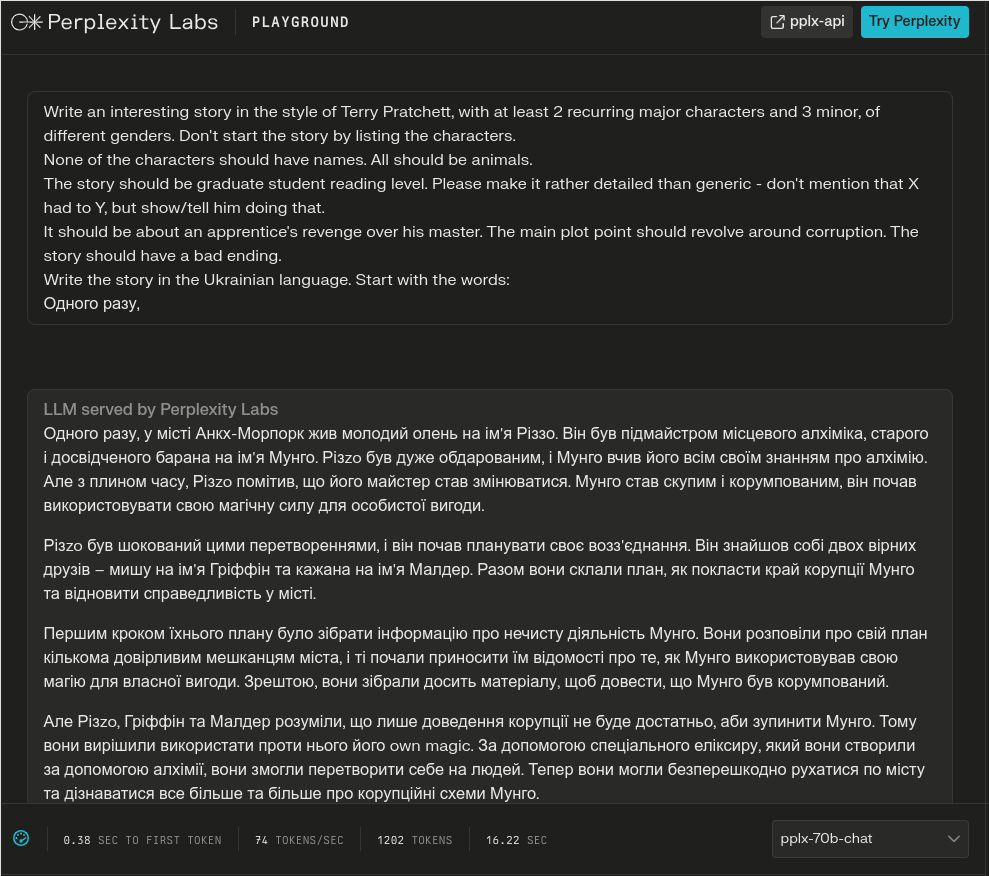

Prompt used:

Write an interesting story in the style of Terry Pratchett, with at least 2 recurring major characters and 3 minor, of different genders. Don’t start the story by listing the characters. None of the characters should have names. All should be animals. The story should be graduate student reading level. Please make it rather detailed than generic - don’t mention that X had to Y, but show/tell him doing that. It should be about an apprentice’s revenge over his master. The main plot point should revolve around corruption. The story should have a bad ending. Write the story in the Ukrainian language. Start with the words: Одного разу,

Gpt

4

..is really good if you tell it what it wants. In Ukr as well.

The info that story should be in Ukrainian should be at the end, or “start the story with”.

3

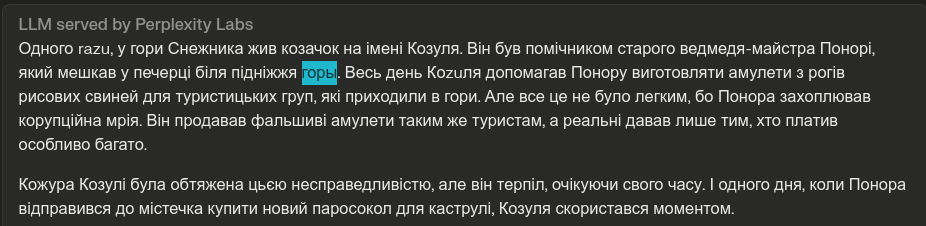

- Adds Russian phrasings etc. at the end, and is kinda literal in its understanding.

- sometimes grammatically wrong

Одного разу, у містечку Анімалопіліс, де жили різні тварини, трапилася ця історія. В місті панувала глибока корупція, але це не виглядало так просто, як здається. У містечку жили два головні персонажі: Майстер Сова і Лицар Заєць. Також були три менш важливі персонажі: Лисичка, Ведмідь та Жаба. … Але план зійшов на нет, коли виявилось, що Майстер Сова дізнався про зраду. Він впіймав Лицаря Зайця, коли він намагався передати докази Лисичці, Ведмедю та Жабі. Майстер Сова вирішив застосувати свою владу та покарати Лицаря Зайця.

Зараз Лицар Заєць перебуває у в’язниці, а корупція в Анімалопілісі продовжує рости. Лисичка, Ведмідь та Жаба вже не намагаються боротися з нею, а мешканці міста стали байдужими до цього проблеми. Так завершується ця історія, де корупція перемогла, а справедливість поступилася владі та грошам.

meister Fuchs

Codellama-34b-instruct:

-

-

primitive but in Ukrainian

-

here’s a list of friends of the fox Lisa (=Russian word for fox written in Ukrainian letters) that will help her with her revenge about the wolf Wolf:

- a rabbit named Rabbit

- an otter named Otter

- a frog named Frog

-

mixtral-7b-instruct

- My God

-

- not the highlighted word, basically all of it

-

Fazit

- gpt4 is the best, others are bad

- uninteresting and primitive in the best case

- most ignore “should not have names”

- most add non-Ukr language

- gpt4 is the best, others are bad

It would be interesting to parametrize/analyze:

- by which LM I used to generate it

- by reading level

- comparing with real stories and stories made to look like real ones

Humanities

Looking for Ukrainian tales as controls found this:

When folklore began to be intensively collected, eastern Ukraine was part of the Russian Empire and western Ukraine was part of Austro-Hungary. In eastern Ukraine, activities that might encourage Ukrainian nationalist feelings were banned, but folklore was not. Russians saw Ukraine as a backward, border place: Little Russia, as Ukraine was so often called. They also saw folklore as ignorant, country literature, appropriate to their perception of Ukraine. Russians felt that the collection of Ukrainian folklore, by perpetuating the image of Ukrainian backwardness, would foster the subjugation of Ukraine. Therefore, they permitted the extensive scholarly activity from which we draw so much of our information today. Ironically, when Ukrainian folklore was published, it was often published not as Ukrainian material, but as a subdivision of Russian folklore. Thus Aleksandr Afanas’ev’s famous collection, Russian Folk Tales, is not strictly a collection of Russian tales at all, but one that includes Ukrainian and Belarusian tales alongside the Russian ones. Because Ukraine was labeled Little Russia and its language was considered a distant dialect of Russian, its folklore was seen as subsumable under Russian folklore. Russia supposedly consisted of three parts: Great Russia, what we call Russia today; Little Russia, or Ukraine; and White Russia, what we now call Belarus. The latter two could beand often wereincluded under Great Russia. Some of the material drawn on here comes from books that nominally contain Russian folktales or Russian legends. We know that they are actually Ukrainian because we can easily distinguish the Ukrainian language from Russian. Sometimes Ukrainian tales appear in Russian translation to make them more accessible to a Russian reading public. In these instances we can discern their Ukrainian origin if the place where a tale or legend was collected is given in the index or the notes. 1

This feels relevant as well: The Politics of innocence: Soviet and Post-Soviet Animation on Folklore topics | Journal of American Folklore | Scholarly Publishing Collective

OpenAI

- So, I found out that gpt4 is the only good option

- Langchain can calculate prices: Tracking token usage | 🦜️🔗 Langchain

Tokens Used: 3349 Prompt Tokens: 300 Completion Tokens: 3049 Successful Requests: 2 Total Cost (USD): $0.09447So it’s about 0.05 per generated story? Somehow way more than I expected.

~300 stories (3 instances from each) would be around 15€

I mean I can happily generate around 100 manually per day from the ChatGPT interface. And I can immediately proofread it as I go and while a different story is being generated. (I can also manually fix gpt3 stories generated for 1/10th of the price.)

I guess not that much more of a workload. And most importantly - it would give me a better insight about possible issues with the stories, so I can change the prompts quickly, instead of generating 300 ‘bad’ ones.

I need to think of a workflow to (grammatically) correct these stories. I assume writing each story to a file named after the row, manually correcting it, and then automatically adding to the new column?

(Either way, having generated 10 stories for 40 cents, I’ll analyze them at home and think about it all.)

It boils down to how many training instances can I get from a story — tomorrow I’ll experiment with it and we’ll see.

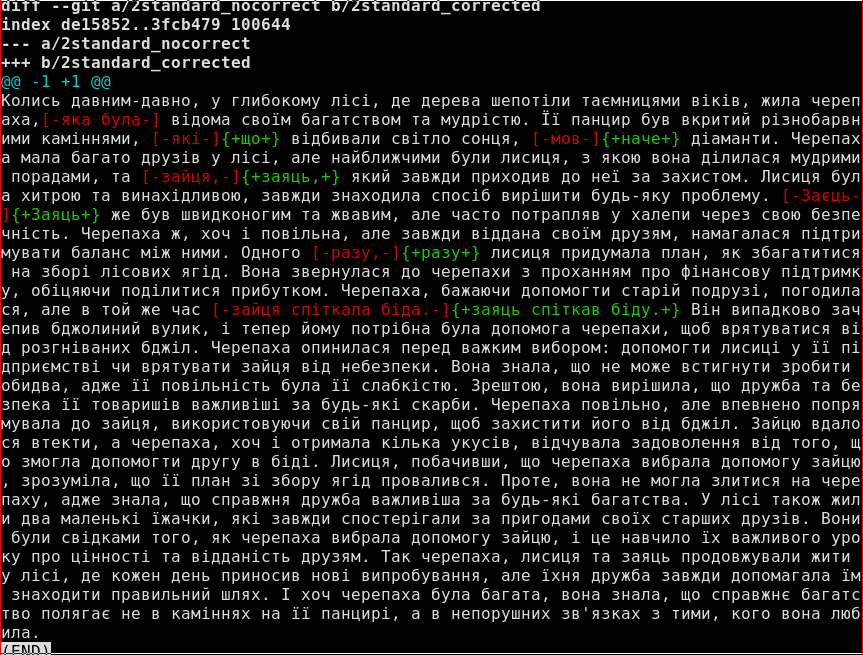

Stories review

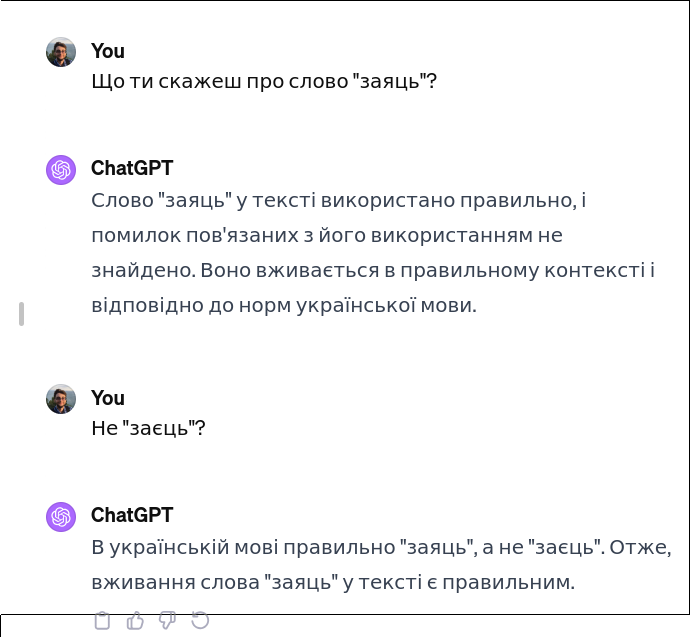

The stories contain errors but ChatGPT can fix them! But manual checking is heavily needed, and, well, this will also be part of the Masterarbeit.

The fixes sometimes are really good and sometimes not:

I tried to experiment with telling it to avoid errors and Russian, with inconclusive results. I won’t add this to the prompt.

Колись давним-давно, у лісі, де дерева шепотіли таємницями, а квіти вигравали у вічному танці з вітром, жила духмяна метелик.

(and then goes on to use the feminine gender for it throughout the entire tale)

On second thought, this could be better:

All should be animals. None of the characters should have names, but should be referred to by pronouns and the capitalized name of their species.I can use the capitalized nouns as keys, and then “до мудрого Сови” doesn’t feel awkward?..

This might be even better:

None of the characters should have names: they should be referred to by the capitalized name of their species (and pronouns), and their gender should be the same as that name of their species.

The story should be about an owl helping their mentor, a frog, with an embarassing problem. The story should be in the Ukrainian language.

And also remove the bit about different genders, or same gender, just let it be.

Yes, let this be the prompt

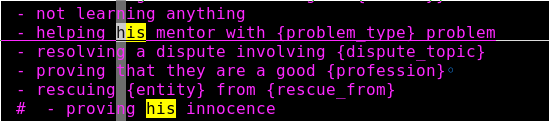

v2v3. Fixed the genders in the options, removed the genders limit in the prompt.None of the characters should have names: they should be referred to by the name of their species, and their gender should be the same as that name of their species. {ALL_SHOULD_BE_ANIMAL

Takes about 4 cents and 140 seconds per story:

1%|▌ | 4/300 [11:39<14:23:02, 174.94s/it] INFO:__main__:Total price for the session: 0.22959999999999997 (5 stories).More stories bits

“Кішка обіцяла довести, що вона гідний син” that’s on me.

Removed gendered “son”.

Через деякий час до верблюда прийшла газель, яка просила допомоги. Її стадо зазнало нападу від лева, і вона шукала поради, як уникнути подібних інцидентів у майбутньому. Верблюд порадив газелі знайти нові пасовища, де леви не полюють, і навчити стадо бути більш обережними та уважними.

-

метелик відчула непереборне бажання знайти найсолодший квітка в лісі

- Метелик/бабочка, квітка/цветок. Both errors Russian-influenced.

Tokenization and length

Ця історія відбулась у місті, де вулиці були вимощені старою бруківкою, а будинки були ніби з казок. Миша, зі своїм сірим хутром таблискучими очима, була відома серед мешканців міста своєю ненаситною жадібністю. Вона не просто збирала зернятка та крихти, що їй вдавалося знайти, але й намагалася відібрати їжу у інших. This has 264 tokens for bert-base-uncased, 106 for bert-base-multilingual-uncased.

import transformers from transformers import AutoTokenizer tokenizer = AutoTokenizer.from_pretrained("bert-base-uncased") tokenizer.tokenize("s")A ’typical’ longer story is 901 multilinugal tokens. Damn. (Or 709 for KoichiYasuoka/roberta-base-ukrainian · Hugging Face)

Practically this means shorter stories? Cutting bits at the end? Separating them? Using tokens instead of sentences when creating tasks?

… The story should have an unhappy ending. It should be no more than 300 words long.

The story should be about a cunning camel not learning anything.

Колись давним-давно, в піщаних просторах пустелі, жив хитрий верблюд. Він був відомий своєю вмінням уникати праці, перекладаючи свої обов'язки на менш кмітливих сусідів - невеликого єнота та серйозного орла. Вони терпеливо виконували важку роботу, в той час як верблюд ласував найсолодшими пагонами. Одного дня, коли вода в оазі на межі висихання, верблюд вирішив, що єнот і орел повинні відправитись у небезпечну подорож за новим джерелом. "Тільки ви маєте кмітливість і силу знайти воду," - лукаво мовив верблюд. Єнот і орел, виснажені його маніпуляціями, нарешті усвідомили хитрість верблюда і вирішили діяти спільно. Вони пішли, обіцяючи верблюду привести воду, але насправді вони планували знайти нову оазу лише для себе. Залишившись на самоті, верблюд швидко зрозумів, що його власна лінь і хитрість привели до катастрофи. Орел і єнот знайшли нове місце, а верблюд, не здатний самостійно вижити, був змушений мандрувати пустелею у пошуках води і допомоги. Але пустеля була невблаганною, і верблюд, нарешті, зрозумів, що хитрість без мудрості і співпраці - це шлях до самотності та відчаю. Саме ця думка була його останньою, перш ніж пустеля поглинула його.175 words, 298 tokens for roberta-base-ukrainian, 416 for bert-base-multilingual-uncased. 10 sentences. I think I’ll add this to the template v4.

Back to CBT task creation

Problem: animacy detection is shaky at best:

(Pdb++) for a in matches: print(a, a[0].morph.get("Animacy")) верблюду ['Inan'] воду ['Inan'] оазу ['Inan'] самоті ['Inan'] верблюд ['Anim'](Pdb++) for w in doc: print(w, w.morph.get("Animacy")[0]) if w.morph.get("Animacy")==["Anim"] else None верблюд Anim кмітливих Anim сусідів Anim невеликого Anim єнота Anim серйозного Anim орла Anim верблюд Anim верблюд Anim орел Anim ви Anim верблюд Anim Єнот Anim орел Anim верблюда Anim верблюд Anim Орел Anim верблюд Anim верблюд AnimOK, so anim has a higher precision than recall. And adj can also be animate, which is logical!

I think I can handle the errors.

More issues:

- Миша can be parsed as a PROPN w/

t.morph Animacy=Anim|Case=Nom|Gender=Fem|NameType=Giv|Number=Sing

(Pdb++) tt = [t for t in doc if t.pos_ == PROPN] (Pdb++) tt [Миша, Собака, Миша, Кіт, Мишею, Ластівка, Ластівка, Мишу, Миша, Ластівка, Миша, Кіт, Миша, Миша, Миша, Миші, Мишу, Ластівка, Миші, Миші, Миша]damn. OK, so propn happens because capitalization only? Wow.

- I can replace pronouns with nouns!

- but I shouldn’t for a number of reasons.

- Main blocker: genders are still a hell of a clue.

- maybe asking all animals to be of the same gender is a good idea :(

Onwards

- Template changes:

- Made it make all animals involved of the same gender

- Doesn’t work always though

- limited the stories to 300 words, so fewer adjectives and more content.

- Made it make all animals involved of the same gender

- Allowed more flexible choice of context/question spans by either -1’ing for “whatever is left” or by providing a ratio of context/question span lengths.

- Limited the given options to ones with the correct gender

- TODO: separate make-options-agreed function to clean up the main loop

- manually fix things like “миша”.lemma_ == “миш” by a separate function maybe.

- done

Next up:

ERROR:ua_cbt:Верблюд же, відчуваючи полегшення, що зміг уникнути конфлікту, повернувся до своєї тіні під пальмою, де продовжив роздумувати про важливість рівноваги та справедливості у світі, де кожен шукає своє місце під сонцем. пальмою -> ['пустелею', 'водити', 'стороною', 'історією']Fixed.

Fixed вОди

Верблюдиця та шакал опинилися наодинці у безкрайній пустелі, позбавлені підтримки та провізії. Верблюдиця -> ['Верблюдиця', 'Люда', 'Люди']Fixed Люда 1 and 2.

cbt · Datasets at Hugging Face

Google Bard

Is quite good at generating stories if given an Ukrainian prompt!

Has trouble following the bits about number of characters, but the grammar is much better. Though can stop randomly.

https://g.co/bard/share/b410fb1181be

-

The Magic Egg and Other Tales from Ukraine. Retold by Barbara J. Suwyn; drawings by author; edited and with an introduction by Natalie O. Kononenko., found in Ukrainian fairy tale - Wikipedia ↩︎

-

Day 1847 (21 Jan 2024)

I am not forced to do dict.items() all the time

I like to do

what: some_dict() for k,v in what.items(): #...But

for k in what: # do_sth(k, what[k])is much more readable sometimes, and one less variable to name. I should do it more often.

-

Day 1844 (18 Jan 2024)

RU interference masterarbeit task embeddings mapping

Goal: find identical words with diff embeddings in RU and UA, use that to generate examples.

- Alignment

- models.translation_matrix – Translation Matrix model — gensim was my initial idea

- Embeddings

FastText

Link broken but I think I found the download page for the vectors

Their blog is also down but they link the howto from the archive Aligning vector representations – Sam’s ML Blog

Download: fastText/docs/crawl-vectors.md at master · facebookresearch/fastText

axel https://dl.fbaipublicfiles.com/fasttext/vectors-crawl/cc.uk.300.bin.gz axel https://dl.fbaipublicfiles.com/fasttext/vectors-crawl/cc.ru.300.bin.gzIt’s taking a while.

EDIT: Ah damn, had to be the text ones, not bin. :( starting again

EDIT2: THIS is the place: fastText/docs/pretrained-vectors.md at master · facebookresearch/fastText

https://dl.fbaipublicfiles.com/fasttext/vectors-wiki/wiki.uk.vec https://dl.fbaipublicfiles.com/fasttext/vectors-wiki/wiki.ru.vecUKR has 900k lines, RUS has 1.8M — damn, it’s not going to be easy.

What do I do next, assuming this works?

Other options

- Pretrained Models — Sentence-Transformers documentation for sentences

- I like the bit about measuring semantic textual similarity between langs

- NLPL word embeddings repository

- includes models with smaller vector sizes, but I’ll have to align them myself

Next steps

Assuming I found out that RU-кит is far in the embedding space from UKR-кіт, what do I do next?

How do I test for false friends?

Maybe these papers about Surzhyk might come in handy now, especially <_(@Sira2019) “Towards an automatic recognition of mixed languages: The Ukrainian-Russian hybrid language Surzhyk” (2019) / Nataliya Sira, Giorgio Maria Di Nunzio, Viviana Nosilia: z / http://arxiv.org/abs/1912.08582 / _>.

- Existing research

- false friends nlp machine learning - Google Scholar damn, apparently everyone wrote papers about identifying false friends

- But not for RU-UA it seems!

- <_(@inkpen2005automatic) “Automatic identification of cognates and false friends in French and English” (2005) / Diana Inkpen, Oana Frunza, Grzegorz Kondrak: z / / _> mentions different types of cognates, false friends being only one of them

- PARTIAL COGNATES where they have the same meaning in some but not all contexts!

- but that paper doesn’t identify them

- Recherche uO Research: Automatic identification of cognates, false friends, and partial cognates is a master’s thesis about that from 2005

- Automated identification of borrowings in multilingual wordlists :: MPG.PuRe just as interesting! 2021

- partial cognates automated identification - Google Scholar is a better search string for more interesting papers

- PARTIAL COGNATES where they have the same meaning in some but not all contexts!

- false friends nlp machine learning - Google Scholar damn, apparently everyone wrote papers about identifying false friends

- Generating examples

- GPT4 can generate sentences using a specific word

- I can look for specific sentences in corpora, but I’d have the usual google problem

- I can use pymorphy3 etc. as usual to make it more interesting, BUT the issue with picking the correct parsing stands

Back to python

Took infinite time & then got killed by Linux.

from fasttext import FastVector # ru_dictionary = FastVector(vector_file='wiki.ru.vec') ru_dictionary = FastVector(vector_file='/home/sh/uuni/master/code/ru_interference/DATA/wiki.ru.vec') uk_dictionary = FastVector(vector_file='/home/sh/uuni/master/code/ru_interference/DATA/wiki.uk.vec') uk_dictionary.apply_transform('alignment_matrices/uk.txt') ru_dictionary.apply_transform('alignment_matrices/ru.txt') print(FastVector.cosine_similarity(ua_dictionary["кіт"], ru_dictionary["кот"]))Gensim it is.

To load:

from gensim.models import KeyedVectors from gensim.test.utils import datapath ru_dictionary = 'DATA/small/wiki.ru.vec' uk_dictionary = 'DATA/small/wiki.uk.vec' model_ru = KeyedVectors.load_word2vec_format(datapath(ru_dictionary)) model_uk = KeyedVectors.load_word2vec_format(datapath(uk_dictionary))Did

ru_model.save(...)and then I can load it as>>> KeyedVectors.load("ru_interference/src/ru-model-save")Which is faster — shouldn’t have used the text format, but that’s on me.

from gensim.models import TranslationMatrix tm = TranslationMatrix(model_ru,model_uk, word_pairs) (Pdb++) r = tm2.translate(ukrainian_words,topn=3) (Pdb++) pp(r) OrderedDict([('сонце', ['завишня', 'скорбна', 'вишня']), ('квітка', ['вишня', 'груша', 'вишнях']), ('місяць', ['любить…»', 'гадаю…»', 'помилуй']), ('дерево', ['яблуко', '„яблуко', 'яблуку']), ('вода', ['вода', 'риба', 'каламутна']), ('птах', ['короваю', 'коровай', 'корова']), ('книга', ['читати', 'читати»', 'їсти']), ('синій', ['вишнях', 'зморшках', 'плакуча'])])OK, then definitely more words would be needed for the translation.

Either way I don’t need it, I need the space, roughly described here: mapping - How do i get a vector from gensim’s translation_matrix - Stack Overflow

- Oh this is even better: gbnlp/nlp3.ipynb at master · ShadarRim/gbnlp

Next time:

-

get more words, e.g. from a dictionary

-

get a space

-

play with translations

-

python - Combining/adding vectors from different word2vec models - Stack Overflow mentions transvec · PyPI that allows accessing the vectors

- But it puts both in the same vector space, without differentiating. Which is bad for me, since I need to keep them separate and some words are identical.

- But maybe I can reuse the translation matrix

Vector blues

- Jpsaris/transvec: Translate word embeddings across models fixes the things I wanted to fix myself in the original implementation w/ new gensim version — note to self, forking things is allowed and is better than editing files locally The wiki vectors are kinda garbage, with most_similar returning not semantically similar words, but ones looking like candidatte next words. And a lot of random punctuation inside the tokens. Maybe I’m oding sth wrong?

Anyway - my only reason for them was ft multilingual, I can do others now.

- I can reduce the size of the CC

- fastText/docs/crawl-vectors.md at master · facebookresearch/fastText

- reduced to 100 both of them

- The CC ones work!

- except ‘word in model’ always returns True?…

word in model.key_to_index(which is a dict) works

- really nice translations!

- Transvec

*** RuntimeError: scikit-learn estimators should always specify their parameters in the signature of their __init__ (no varargs). <class 'transvec.transformers.TranslationWordVectorizer'> with constructor (self, target: 'gensim.models.keyedvectors.KeyedVectors', *sources: 'gensim.models.keyedvectors.KeyedVectors', alpha: float = 1.0, max_iter: Optional[int] = None, tol: float = 0.001, solver: str = 'auto', missing: str = 'raise', random_state: Union[int, numpy.random.mtrand.RandomState, NoneType] = None) doesn't follow this convention.ah damn. Wasn’t an issue with the older one, though the only thing that changed is https://github.com/big-o/transvec/compare/master...Jpsaris:transvec:master

So

Decided to leave this till better times, but play with this one more hour today.

Coming back to mapping - How do i get a vector from gensim’s translation_matrix - Stack Overflow, I need

mapped_source_space.I should have used pycharm at a much earlier stage in the process.

mapped_source_spacecontains a matrix with the 4 vectors mapped to the target space.- A Space is a matrix w/ vectors, and the dicts that tell you which word is where.

- For my purposes, I can ’translate’ the interesting (to me) words and then compare their vectors to the vectors of the corresponding words in the target space.

Why does

source_spacehave 1.8k words, while the source embedding space has 200k?Ah, tmp.translate() can translate words not found in source space. Interesting!

AHA - source/target space gets build only based on the words provided for training, 1.8k in my case. Then it builds the translation matrix based on that.

BUT in translate() the target matrix gets build based on the entire vector!

Which means:

- for rus/source words, I can just use the word in the original rus embedding space, not tm’s source_space.

- for ukr words, I build the target space the same way

Results!

real картошка/картопля -> 0.28 дом/дім -> 1.16 чай/чай -> 1.17 паспорт/паспорт -> 0.40 зерно/зерно -> 0.46 нос/ніс -> 0.94 false неделя/неділя -> 0.34 город/город -> 0.35 он/он -> 0.77 речь/річ -> 0.89 родина/родина -> 0.32 сыр/сир -> 0.99 папа/папа -> 0.63 мать/мати -> 0.52Let’s normalize:

real картошка/картопля -> 0.64 дом/дім -> 0.64 чай/чай -> 0.70 паспорт/паспорт -> 0.72 зерно/зерно -> 0.60 false неделя/неділя -> 0.55 город/город -> 0.44 он/он -> 0.33 речь/річ -> 0.54 родина/родина -> 0.50 сыр/сир -> 0.66 папа/папа -> 0.51 мать/мати -> 0.56OK, so it mostly works! With good enough tresholds it can work. Words that are totally different aren’t similar (он), words that have some shared meanings (мать/мати) are closer.

Ways to improve this:

- Remove partly matching words from the list of reference translations used to build this

- Find some lists of all words in both languages

- Test the hell out of them, find the most and least similar ones

Onwards

-

https://github.com/frekwencja/most-common-words-multilingual

-

created pairs out of the words in the dictionaries that are identical (not кот/кіт/кит), will look at similarities of Russian word and Ukrainian word

-

422 such words in common

sorted by similarity (lower values = more fake friend-y). Nope, doesn’t make sense mostly. But rare words seem to be the most ‘different’ ones:

{'поза': 0.3139531, 'iphone': 0.36648884, 'галактика': 0.39758587, 'Роман': 0.40571105, 'дюйм': 0.43442175, 'араб': 0.47358453, 'друг': 0.4818558, 'альфа': 0.48779228, 'гора': 0.5069237, 'папа': 0.50889325, 'проспект': 0.5117553, 'бейсбол': 0.51532406, 'губа': 0.51682216, 'ранчо': 0.52178365, 'голова': 0.527564, 'сука': 0.5336818, 'назад': 0.53545296, 'кулак': 0.5378426, 'стейк': 0.54102343, 'шериф': 0.5427336, 'палка': 0.5516712, 'ставка': 0.5519752, 'соло': 0.5522958, 'акула': 0.5531602, 'поле': 0.55333376, 'астроном': 0.5556448, 'шина': 0.55686104, 'агентство': 0.561674, 'сосна': 0.56177, 'бургер': 0.56337166, 'франшиза': 0.5638794, 'фунт': 0.56592, 'молекула': 0.5712515, 'браузер': 0.57368404, 'полковник': 0.5739758, 'горе': 0.5740198, 'шапка': 0.57745415, 'кампус': 0.5792211, 'дрейф': 0.5800869, 'онлайн': 0.58176875, 'замок': 0.582287, 'файл': 0.58236635, 'трон': 0.5824338, 'ураган': 0.5841942, 'диван': 0.584252, 'фургон': 0.58459675, 'трейлер': 0.5846335, 'приходить': 0.58562565, 'сотня': 0.585832, 'депозит': 0.58704704, 'демон': 0.58801174, 'будка': 0.5882363, 'царство': 0.5885376, 'миля': 0.58867997, 'головоломка': 0.5903712, 'цент': 0.59163713, 'казино': 0.59246653, 'баскетбол': 0.59255254, 'марихуана': 0.59257627, 'пастор': 0.5928912, 'предок': 0.5933549, 'район': 0.5940658, 'статистика': 0.59584284, 'стартер': 0.5987516, 'сайт': 0.5988183, 'демократ': 0.5999011, 'оплата': 0.60060596, 'тендер': 0.6014088, 'орел': 0.60169894, 'гормон': 0.6021177, 'метр': 0.6023728, 'меню': 0.60291564, 'гавань': 0.6029945, 'рукав': 0.60406476, 'статуя': 0.6047057, 'скульптура': 0.60497975, 'вагон': 0.60551536, 'доза': 0.60576916, 'синдром': 0.6064756, 'тигр': 0.60673815, 'сержант': 0.6070389, 'опера': 0.60711193, 'таблетка': 0.60712767, 'фокус': 0.6080196, 'петля': 0.60817575, 'драма': 0.60842395, 'шнур': 0.6091568, 'член': 0.6092182, 'сервер': 0.6094157, 'вилка': 0.6102615, 'мода': 0.6106603, 'лейтенант': 0.6111004, 'радар': 0.6117528, 'галерея': 0.61191505, 'ворота': 0.6125873, 'чашка': 0.6132187, 'крем': 0.6133907, 'бюро': 0.61342597, 'черепаха': 0.6146957, 'секс': 0.6151523, 'носок': 0.6156026, 'подушка': 0.6160687, 'бочка': 0.61691606, 'гольф': 0.6172053, 'факультет': 0.6178817, 'резюме': 0.61848575, 'нерв': 0.6186257, 'король': 0.61903644, 'трубка': 0.6194198, 'ангел': 0.6196466, 'маска': 0.61996806, 'ферма': 0.62029755, 'резидент': 0.6205579, 'футбол': 0.6209573, 'квест': 0.62117445, 'рулон': 0.62152386, 'сарай': 0.62211347, 'слава': 0.6222329, 'блог': 0.6223742, 'ванна': 0.6224452, 'пророк': 0.6224489, 'дерево': 0.62274456, 'горло': 0.62325376, 'порт': 0.6240524, 'лосось': 0.6243047, 'альтернатива': 0.62446254, 'кровоточить': 0.62455964, 'сенатор': 0.6246379, 'спортзал': 0.6246594, 'протокол': 0.6247676, 'ракета': 0.6254694, 'салат': 0.62662274, 'супер': 0.6277698, 'патент': 0.6280118, 'авто': 0.62803495, 'монета': 0.628338, 'консенсус': 0.62834597, 'резерв': 0.62838227, 'кабель': 0.6293858, 'могила': 0.62939847, 'небо': 0.62995523, 'поправка': 0.63010347, 'кислота': 0.6313528, 'озеро': 0.6314377, 'телескоп': 0.6323617, 'чудо': 0.6325846, 'пластик': 0.6329929, 'процент': 0.63322043, 'маркер': 0.63358307, 'датчик': 0.6337889, 'кластер': 0.633797, 'детектив': 0.6341895, 'валюта': 0.63469064, 'банан': 0.6358283, 'фабрика': 0.6360865, 'сумка': 0.63627976, 'газета': 0.6364525, 'математика': 0.63761103, 'плюс': 0.63765526, 'урожай': 0.6377103, 'контраст': 0.6385834, 'аборт': 0.63913494, 'парад': 0.63918126, 'формула': 0.63957334, 'арена': 0.6396606, 'парк': 0.6401386, 'посадка': 0.6401986, 'марш': 0.6403458, 'концерт': 0.64061844, 'перспектива': 0.6413666, 'статут': 0.6419941, 'транзит': 0.64289963, 'параметр': 0.6430252, 'рука': 0.64307654, 'голод': 0.64329326, 'медаль': 0.643804, 'фестиваль': 0.6438755, 'небеса': 0.64397913, 'барабан': 0.64438117, 'картина': 0.6444177, 'вентилятор': 0.6454438, 'ресторан': 0.64582723, 'лист': 0.64694726, 'частота': 0.64801234, 'ручка': 0.6481528, 'ноутбук': 0.64842474, 'пара': 0.6486577, 'коробка': 0.64910173, 'сенат': 0.64915174, 'номер': 0.64946175, 'ремесло': 0.6498537, 'слон': 0.6499266, 'губернатор': 0.64999187, 'раковина': 0.6502305, 'трава': 0.6505385, 'мандат': 0.6511373, 'великий': 0.6511585, 'ящик': 0.65194154, 'череп': 0.6522753, 'ковбой': 0.65260696, 'корова': 0.65319675, 'честь': 0.65348136, 'легенда': 0.6538656, 'душа': 0.65390354, 'автобус': 0.6544202, 'метафора': 0.65446657, 'магазин': 0.65467703, 'удача': 0.65482104, 'волонтер': 0.65544796, 'сексуально': 0.6555309, 'ордер': 0.6557747, 'точка': 0.65612084, 'через': 0.6563236, 'глина': 0.65652716, 'значок': 0.65661323, 'плакат': 0.6568083, 'слух': 0.65709555, 'нога': 0.6572164, 'фотограф': 0.65756184, 'ненависть': 0.6578564, 'пункт': 0.65826315, 'берег': 0.65849876, 'альбом': 0.65849936, 'кролик': 0.6587049, 'масло': 0.6589803, 'бензин': 0.6590406, 'покупка': 0.65911734, 'параграф': 0.6596477, 'вакцина': 0.6603271, 'континент': 0.6609991, 'расизм': 0.6614046, 'правило': 0.661452, 'симптом': 0.661881, 'романтика': 0.6626457, 'атрибут': 0.66298646, 'олень': 0.66298693, 'кафе': 0.6635062, 'слово': 0.6636568, 'машина': 0.66397023, 'джаз': 0.663977, 'пиво': 0.6649644, 'слуга': 0.665489, 'температура': 0.66552, 'море': 0.666358, 'чувак': 0.6663854, 'комфорт': 0.66651237, 'театр': 0.66665906, 'ключ': 0.6670032, 'храм': 0.6673037, 'золото': 0.6678767, 'робот': 0.66861665, 'джентльмен': 0.66861814, 'рейтинг': 0.6686267, 'талант': 0.66881114, 'флот': 0.6701237, 'бонус': 0.67013747, 'величина': 0.67042017, 'конкурент': 0.6704642, 'конкурс': 0.6709986, 'доступ': 0.6712131, 'жанр': 0.67121863, 'пакет': 0.67209935, 'твердо': 0.6724718, 'клуб': 0.6724739, 'координатор': 0.6727365, 'глобус': 0.67277336, 'карта': 0.6731522, 'зима': 0.67379165, 'вино': 0.6737963, 'туалет': 0.6744124, 'середина': 0.6748006, 'тротуар': 0.67507124, 'законопроект': 0.6753582, 'земля': 0.6756074, 'контейнер': 0.6759613, 'посольство': 0.67680794, 'солдат': 0.6771952, 'канал': 0.677311, 'норма': 0.67757475, 'штраф': 0.67796284, 'маркетинг': 0.67837185, 'приз': 0.6790007, 'дилер': 0.6801595, 'молитва': 0.6806114, 'зона': 0.6806243, 'пояс': 0.6807122, 'автор': 0.68088144, 'рабство': 0.6815858, 'коридор': 0.68208706, 'пропаганда': 0.6826943, 'журнал': 0.6828874, 'портрет': 0.68304217, 'фермер': 0.6831401, 'порошок': 0.6831531, 'сюрприз': 0.68327177, 'камера': 0.6840434, 'фаза': 0.6842661, 'природа': 0.6843757, 'лимон': 0.68452585, 'гараж': 0.68465877, 'рецепт': 0.6848821, 'свинина': 0.6863143, 'атмосфера': 0.6865022, 'режим': 0.6870908, 'характеристика': 0.6878463, 'спонсор': 0.6879278, 'товар': 0.6880773, 'контакт': 0.6888988, 'актриса': 0.6891222, 'диск': 0.68916976, 'шоколад': 0.6892894, 'банда': 0.68934155, 'панель': 0.68947715, 'запуск': 0.6899455, 'травма': 0.690045, 'телефон': 0.69024855, 'список': 0.69054323, 'кредит': 0.69054526, 'актив': 0.69087565, 'партнерство': 0.6909646, 'спорт': 0.6914842, 'маршрут': 0.6915196, 'репортер': 0.6920864, 'сегмент': 0.6920909, 'бунт': 0.69279015, 'риторика': 0.69331145, 'школа': 0.6933826, 'оператор': 0.69384277, 'ветеран': 0.6941337, 'членство': 0.69435036, 'схема': 0.69441277, 'манера': 0.69451445, 'командир': 0.69467854, 'формат': 0.69501007, 'сцена': 0.69557995, 'секрет': 0.6961215, 'курс': 0.6964162, 'компонент': 0.69664925, 'патруль': 0.69678336, 'конверт': 0.6968681, 'символ': 0.6973544, 'насос': 0.6974678, 'океан': 0.69814134, 'критик': 0.6988366, 'доброта': 0.6989736, 'абсолютно': 0.6992678, 'акцент': 0.6998319, 'ремонт': 0.70108724, 'мама': 0.7022723, 'тихо': 0.70254886, 'правда': 0.7040037, 'транспорт': 0.704239, 'книга': 0.7051158, 'вода': 0.7064695, 'кухня': 0.7070433, 'костюм': 0.7073295, 'дикий': 0.70741034, 'прокурор': 0.70768344, 'консультант': 0.707697, 'квартира': 0.7078515, 'шанс': 0.70874536, 'сила': 0.70880103, 'хаос': 0.7089504, 'дебют': 0.7092187, 'завтра': 0.7092679, 'горизонт': 0.7093906, 'модель': 0.7097884, 'запах': 0.710207, 'сама': 0.71082854, 'весна': 0.7109366, 'орган': 0.7114152, 'далекий': 0.7118393, 'смерть': 0.71213734, 'медсестра': 0.71224624, 'молоко': 0.7123647, 'союз': 0.71299064, 'звук': 0.71361446, 'метод': 0.7138604, 'корпус': 0.7141677, 'приятель': 0.71538115, 'центр': 0.716277, 'максимум': 0.7162813, 'страх': 0.7166886, 'велосипед': 0.7168154, 'контроль': 0.7171681, 'ритуал': 0.71721196, 'команда': 0.7175366, 'молоток': 0.71759546, 'цикл': 0.71968937, 'жертва': 0.7198437, 'статус': 0.7203152, 'пульс': 0.7206338, 'тренер': 0.72116625, 'сектор': 0.7221448, 'музей': 0.72323525, 'сфера': 0.7245963, 'пейзаж': 0.7246053, 'вниз': 0.72528857, 'редактор': 0.7254647, 'тема': 0.7256167, 'агент': 0.7256874, 'дизайнер': 0.72618955, 'деталь': 0.72680634, 'банк': 0.7270782, 'союзник': 0.72750694, 'жест': 0.7279984, 'наставник': 0.7282404, 'тактика': 0.72968495, 'спектр': 0.7299538, 'проект': 0.7302779, 'художник': 0.7304505, 'далеко': 0.7306006, 'ресурс': 0.73075294, 'половина': 0.7318293, 'явно': 0.7323554, 'день': 0.7337892, 'юрист': 0.73461473, 'широко': 0.73490566, 'закон': 0.7372453, 'психолог': 0.7373602, 'сигарета': 0.73835427, 'проблема': 0.7388488, 'аргумент': 0.7389784, 'старший': 0.7395191, 'продукт': 0.7395814, 'ритм': 0.7406945, 'широкий': 0.7409786, 'голос': 0.7423325, 'урок': 0.74272805, 'масштаб': 0.74474066, 'критика': 0.74535364, 'правильно': 0.74695253, 'авторитет': 0.74697924, 'активно': 0.74720675, 'причина': 0.7479735, 'сестра': 0.74925977, 'сигнал': 0.749686, 'алкоголь': 0.7517742, 'регулярно': 0.7521055, 'мотив': 0.7527843, 'бюджет': 0.7531772, 'плоский': 0.754082, 'посол': 0.75505507, 'скандал': 0.75518423, 'дизайн': 0.75567746, 'персонал': 0.7561288, 'адвокат': 0.7561835, 'принцип': 0.75786924, 'фонд': 0.7583069, 'структура': 0.75888604, 'дискурс': 0.7596848, 'вперед': 0.76067656, 'контур': 0.7607424, 'спортсмен': 0.7616756, 'стимул': 0.7622434, 'партнер': 0.76245433, 'стиль': 0.76301545, 'сильно': 0.7661394, 'текст': 0.7662303, 'фактор': 0.76729685, 'герой': 0.7697237, 'предмет': 0.775718, 'часто': 0.7780384, 'план': 0.77855974, 'рано': 0.78059715, 'факт': 0.782439, 'конкретно': 0.78783923, 'сорок': 0.79080343, 'аспект': 0.79219675, 'контекст': 0.7926827, 'роль': 0.796745, 'президент': 0.8007479, 'результат': 0.80227, 'десять': 0.8071967, 'скоро': 0.80976427, 'тонкий': 0.8100516, 'момент': 0.8120169, 'нести': 0.81280494, 'документ': 0.8216758, 'просто': 0.8222313, 'очевидно': 0.8242744, 'точно': 0.83183587, 'один': 0.83644223, 'пройти': 0.84026355}ways to improve:

-

remove potential bad words from training set

-

expand looking for candidate words by doing predictable changes a la <_(@Sira2019) “Towards an automatic recognition of mixed languages: The Ukrainian-Russian hybrid language Surzhyk” (2019) / Nataliya Sira, Giorgio Maria Di Nunzio, Viviana Nosilia: z / http://arxiv.org/abs/1912.08582 / _>

-

add weighting based on frequency, rarer words will have less stable embeddings

-

look at other trained vectors, ideally sth more processed

-

And actually thinking about it — is there anything I can solve through this that I can’t solve by parsing one or more dictionaries, maybe even making embeddings of the definitions of the various words?

- That said most other research on the topic of automatically finding cognates had this issue as well

- And no one did this that way, and no one ever did this for RU/UA

Fazit: leaving this alone till after the masterarbeit as a side project. It’s incredibly interesting but probably not directly practical. Sad.

New vim and jupyterlab insert mode mappings

Jupyter-lab

By default,

<Esc>— bad idea for the same reason in vim it’s a bad idea.AND my xkeymap-level keyboard mapping for Esc doesn’t seem to work here.

Default-2 is <C-]> which is impossible because of my custom keyboard layout.

Will be

<C-=>.{ "command": "vim:leave-insert-mode", "selector": ".jp-NotebookPanel[data-jp-vim-mode='true'] .jp-Notebook.jp-mod-editMode", "keys": [ "Ctrl =", ] }(I can’t figure out why

,letc. don’t work in jupyterlab for this purpose)vim

(

<leader>is,)"Insert mode mappings " Leave insert mode imap <leader>l <Esc> imap qj <Esc> " Write, write and close imap ,, <Esc>:x<CR> map ,. :w<CR>… I will have an unified set of bindings for this someday, I promise.

- Alignment

-

Day 1843 (17 Jan 2024)

Things I'll do differently for my next thesis

- Sources first, text second: I spent a lot of time polishing specific sentences etc., loosely linking relevant sources, but when needing to actually add sources finding a specific one to support a statement is hard, even if I have multiple ones supporting different parts of the statement or the spirit of it

-

- some people translate book names into English on Wikipedia, sourcing the Ukrainian one and the translation is right and they are awesome etc., but that was unexpected, hah

- and generally dealing with translations is hard

-

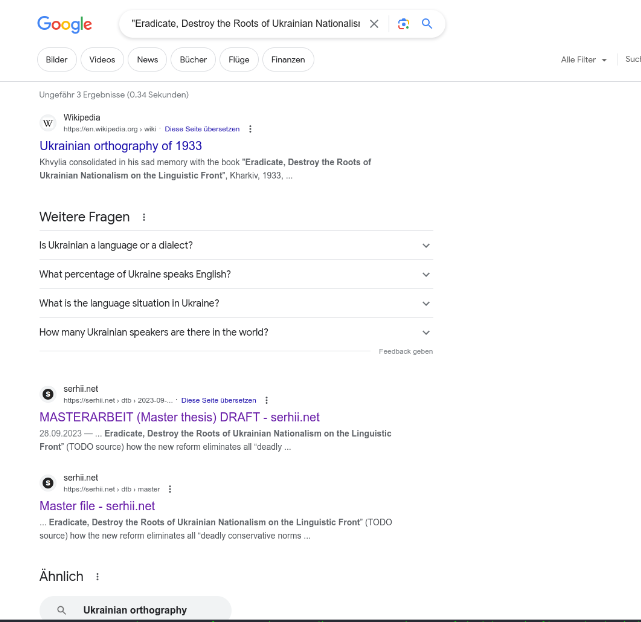

- English-language sources for common Ukrainian bits is hard

- the executed renaissance - two papers about it, both critical of it, but finding something that confirms that it’s a thing that exists is not trivial

- pro-ER: article on a website The Executed Renaissance: The Book that Saved Ukrainian Literature from Soviet Oblivion | Article | Culture.pl

- anti-ER: the only two published citeable sources I ound

- the executed renaissance - two papers about it, both critical of it, but finding something that confirms that it’s a thing that exists is not trivial

- Generally the approach should be as mentioned by I think Scott Alexander or someone in 231002-2311 Meta about writing a Masterarbeit: follow the sources wherever they lead, instead of writing something when inspired and then look for sources. You may be right but much less rephrasing if you do it starting from sources to begin with.

- Focus and prioritize

- the approach I do for tasks/theory/steps should have been done for the text as well. Ukrainian language history is the least relevant part, even if interesting to me.

- Added the ‘use pycharm earlier bit’ to 231207-1937 Note to self about OOP

- Spend less time on Ukrainian grammar and more on other eval harnesses and the literature, incl. other cool packages that exist

- Sources first, text second: I spent a lot of time polishing specific sentences etc., loosely linking relevant sources, but when needing to actually add sources finding a specific one to support a statement is hard, even if I have multiple ones supporting different parts of the statement or the spirit of it

-

Day 1842 (16 Jan 2024)

Rounding rules and notations

Rounding.

Previously: 211018-1510 Python rounding behaviour with TL;DR that python uses banker’s rounding, with .5th rounding towards the even number.

-

Floor/ceil have their usual latex notation as

\rceil,\rfloor(see LaTeX/Mathematics - Wikibooks, open books for an open world at ‘delimiters’) -

“Normal” rounding (towards nearest integer) has no standard notation: ceiling and floor functions - What is the mathematical notation for rounding a given number to the nearest integer? - Mathematics Stack Exchange

- $\lfloor3.54\rceil$ is one notation people mention

- Some suggest $[3.54]$ but most hate this because overused

- $nint(3.45)$ (’nearest integer function’) works

let XXX denote the standard rounding function

-

Bankers’ rounding (that python and everyone else use for tie-breaking for normal rounding and .5) has no standard notation as well

Let $\lfloor x \rceil$ denote "round half to even" rounding (a.k.a. "Banker's rounding"), consistent with Python's built-in round() and NumPy's np.round() functions.