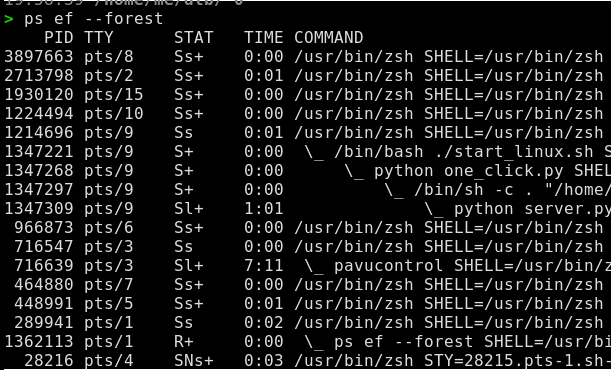

Git config commentchar for commits starting with hash

Commit messages starting with #14 whatever are awkward as # is the default comment in git rebase and friends.

git config core.commentchar ";"

fixes that for me.

For a one-time thing this works as well:

git -c core.commentChar="|" commit --amend

(escaping - Escape comment character (#) in git commit message - Stack Overflow)

(EDIT: oh damn it’s 7, not 6!)

(EDIT: oh damn it’s 7, not 6!)

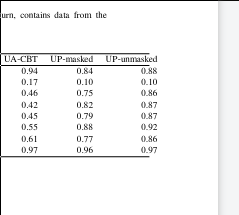

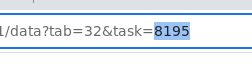

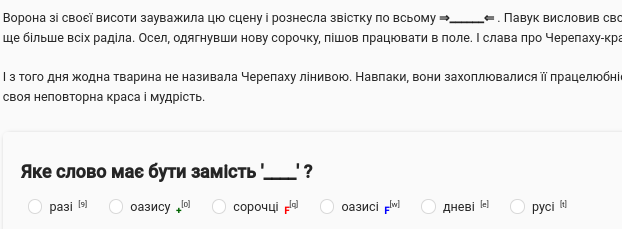

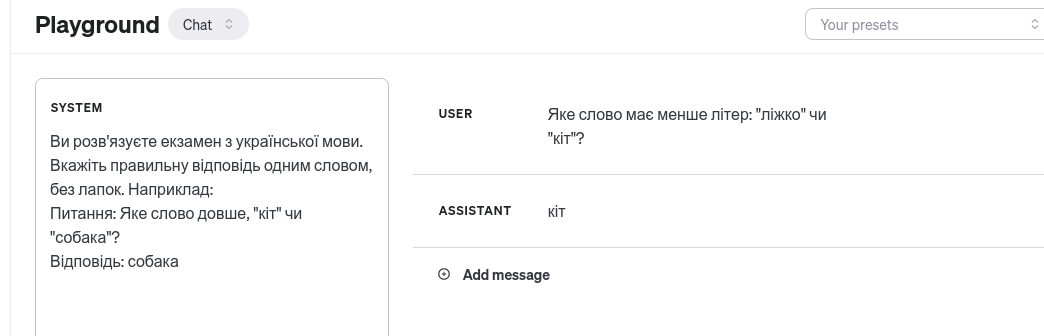

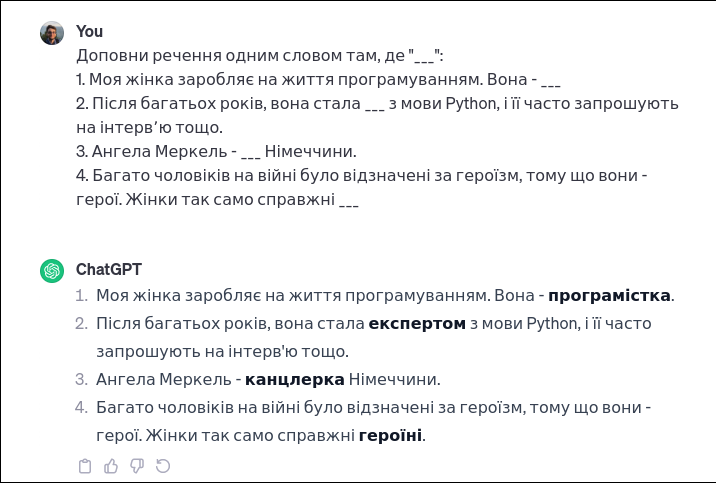

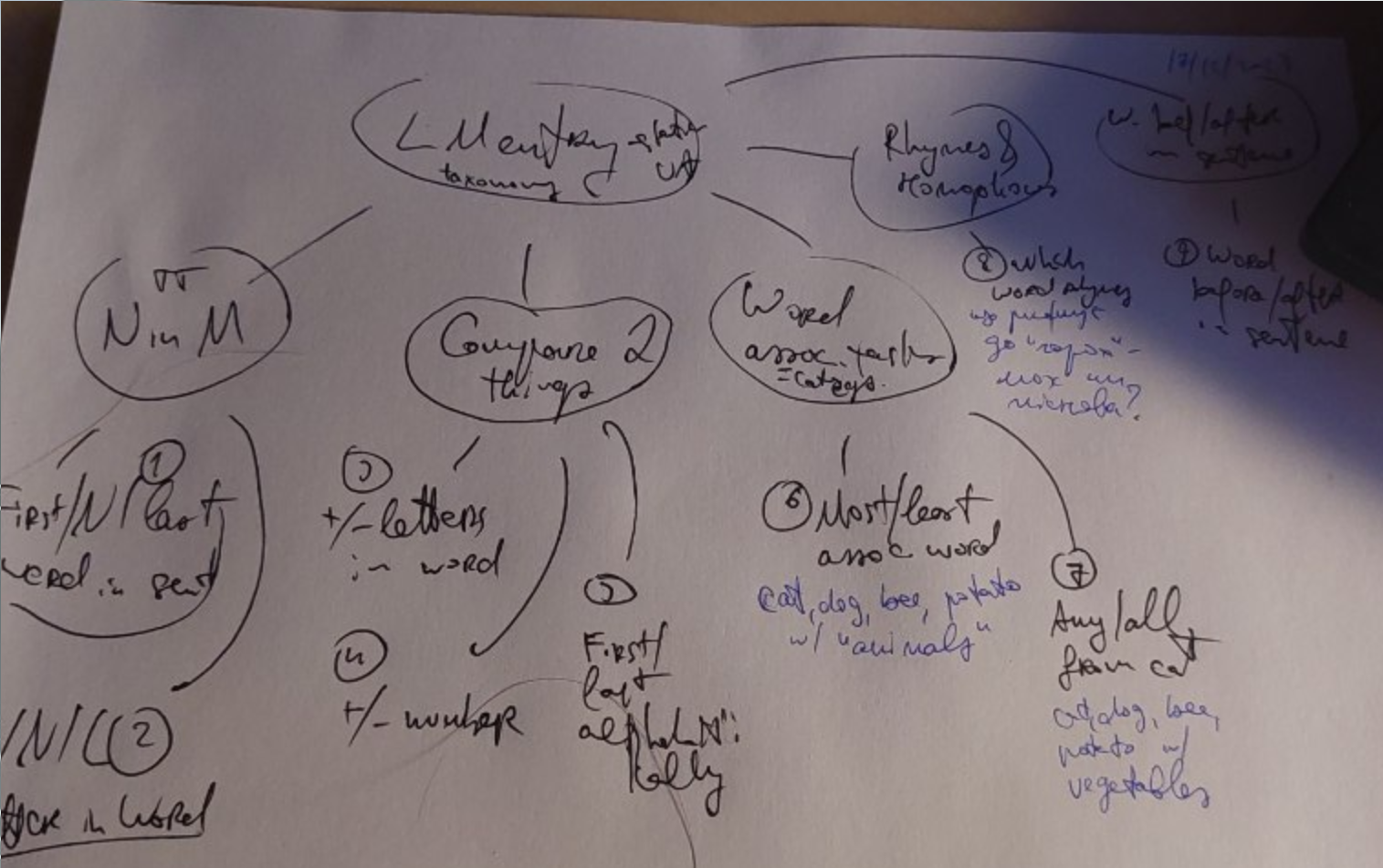

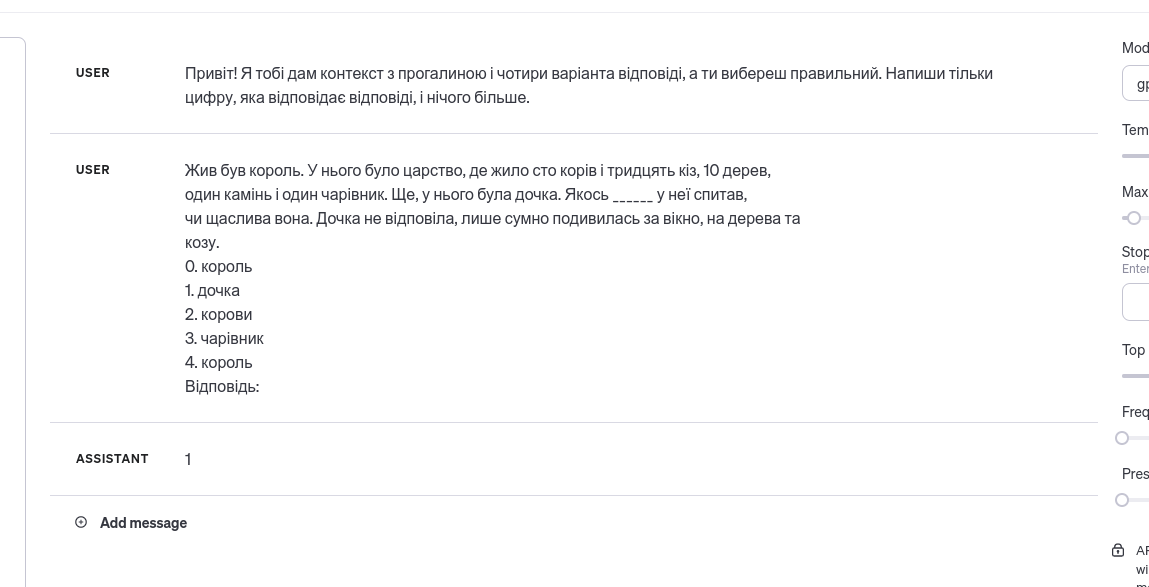

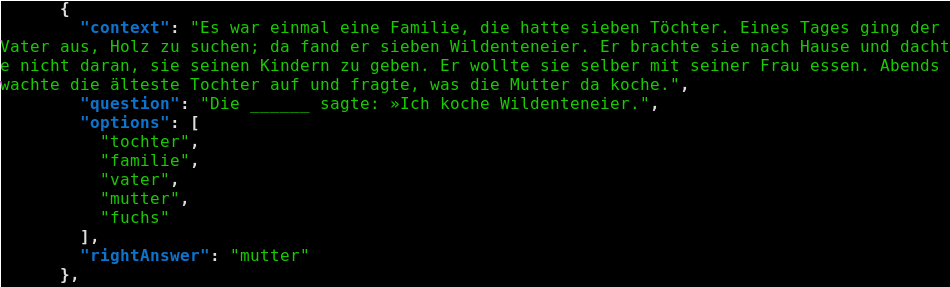

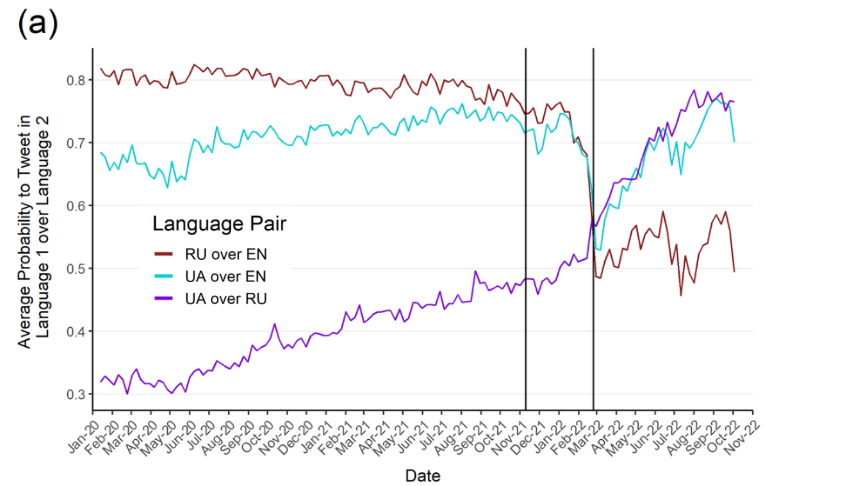

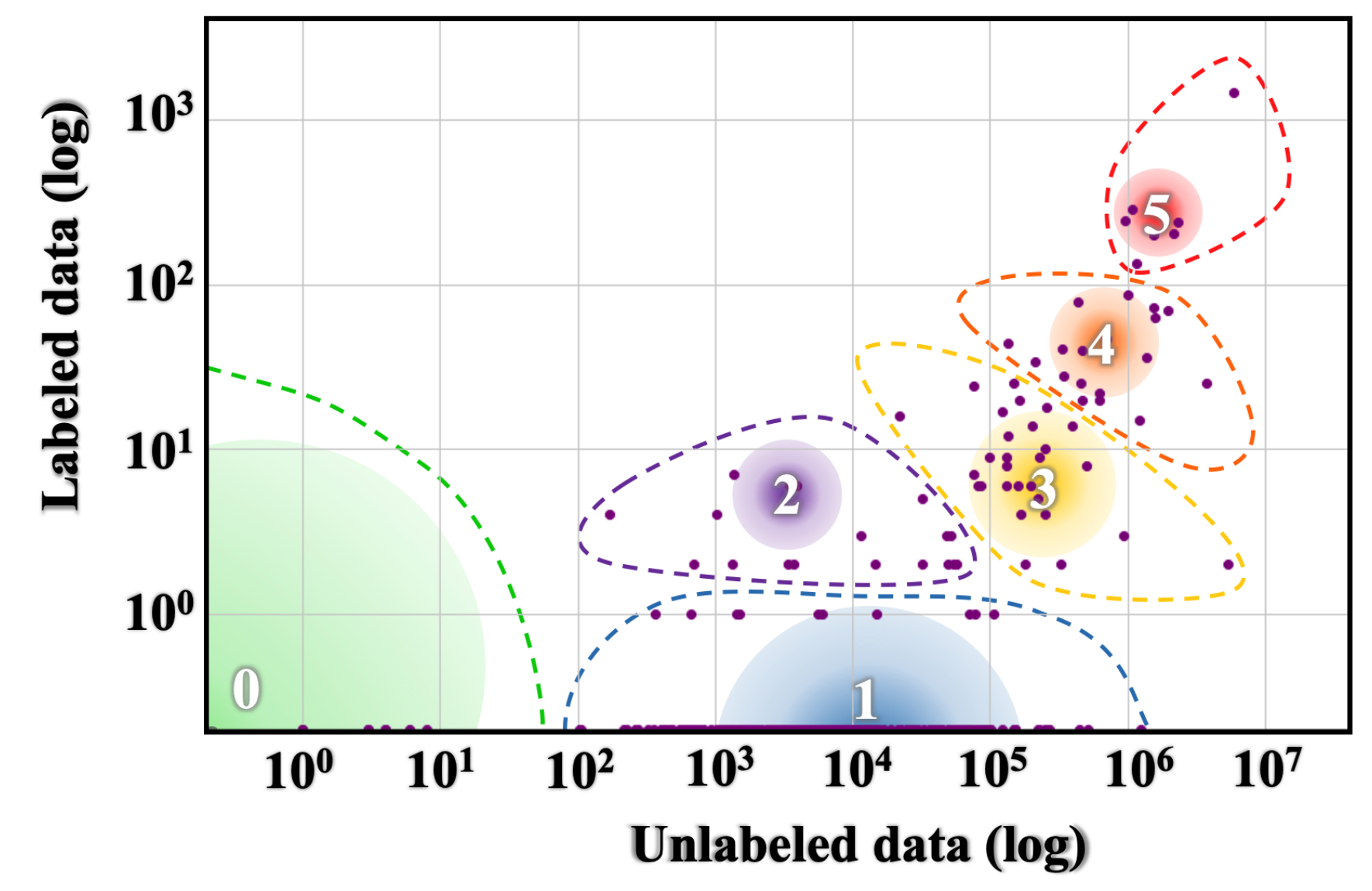

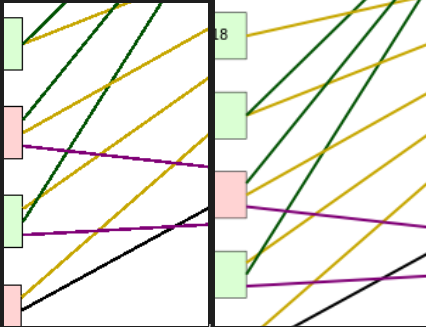

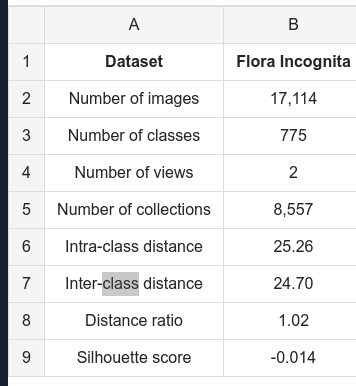

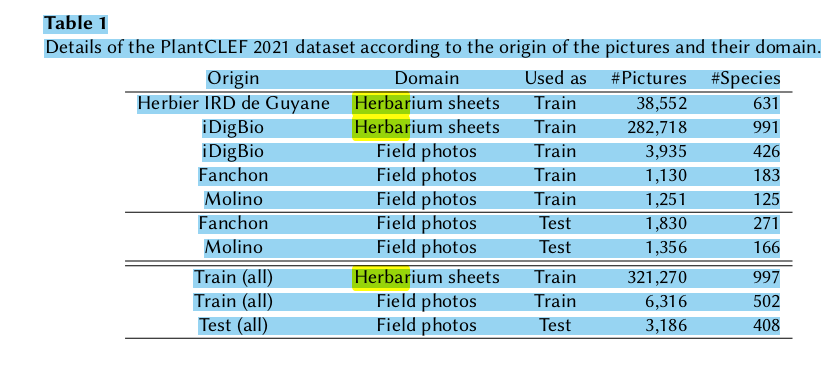

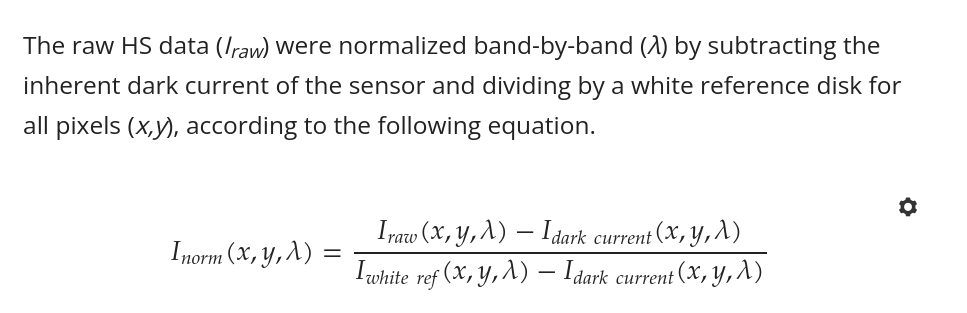

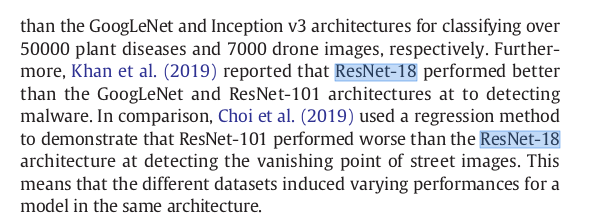

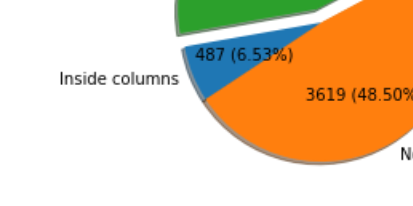

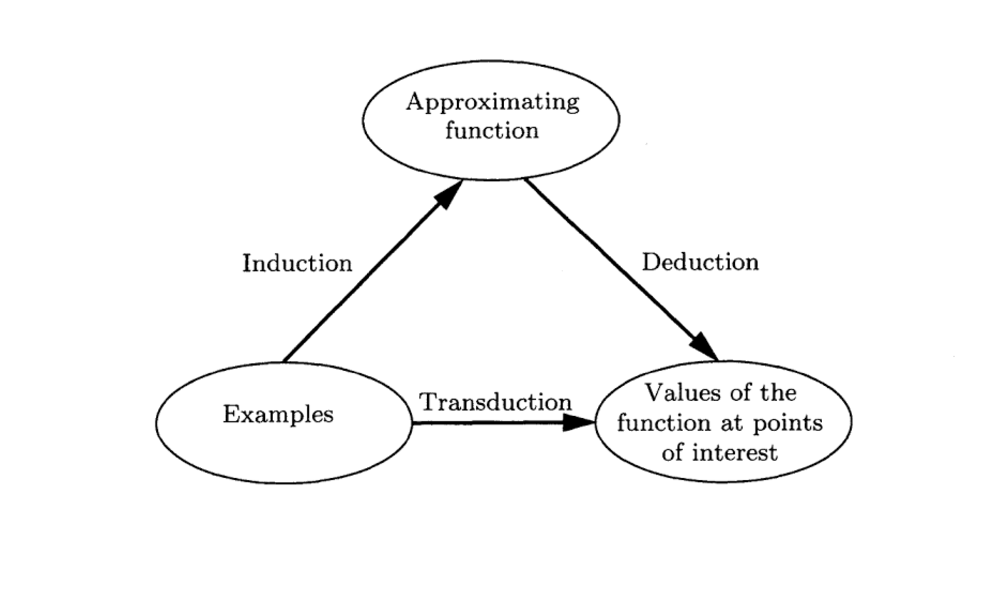

from the paper

from the paper  +

+

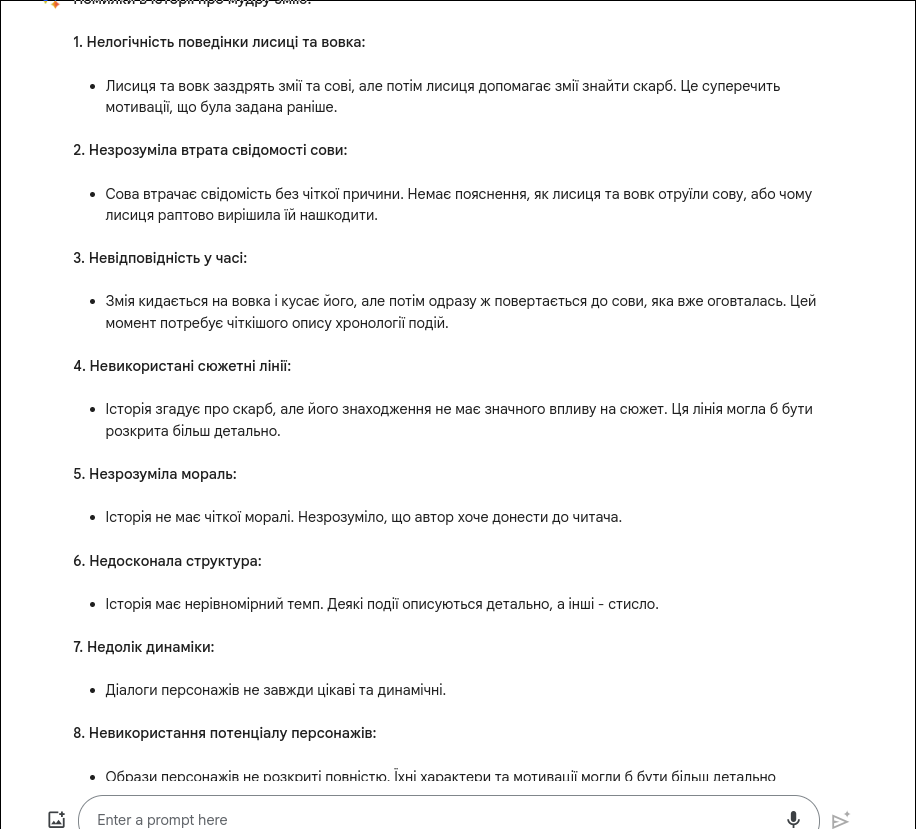

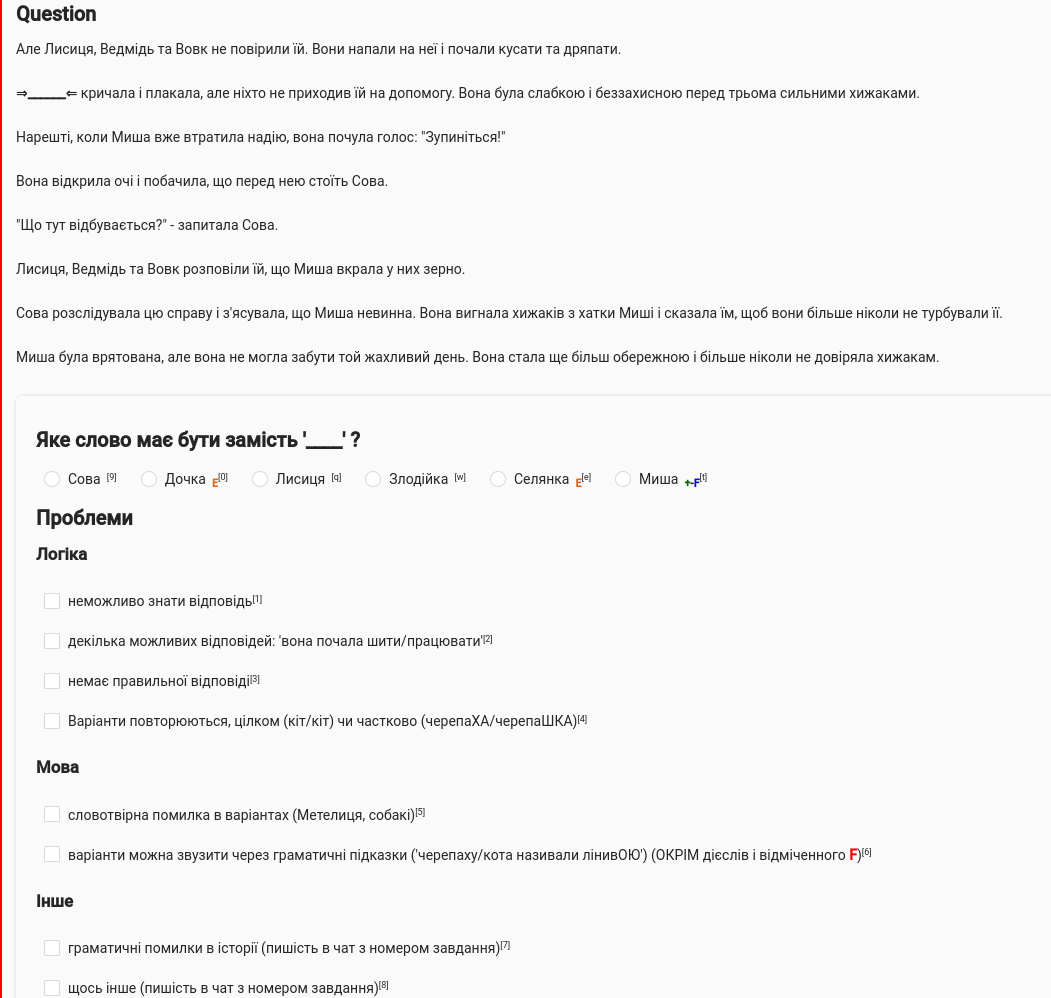

- … leading to a probability not of 1/4(..10) but 1/2

- one way to filter out such bad examples is to get a LM to solve the task without providing context, or even better - look at the distribution of probabilities over the answers and see if some are MUCH more likely than the others

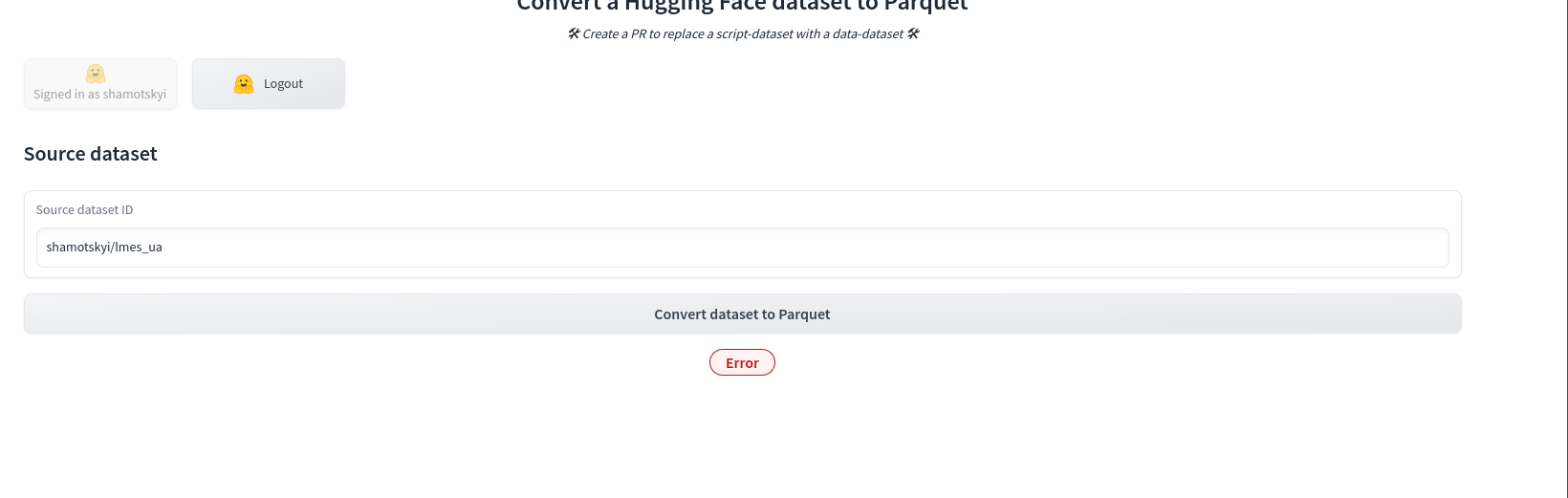

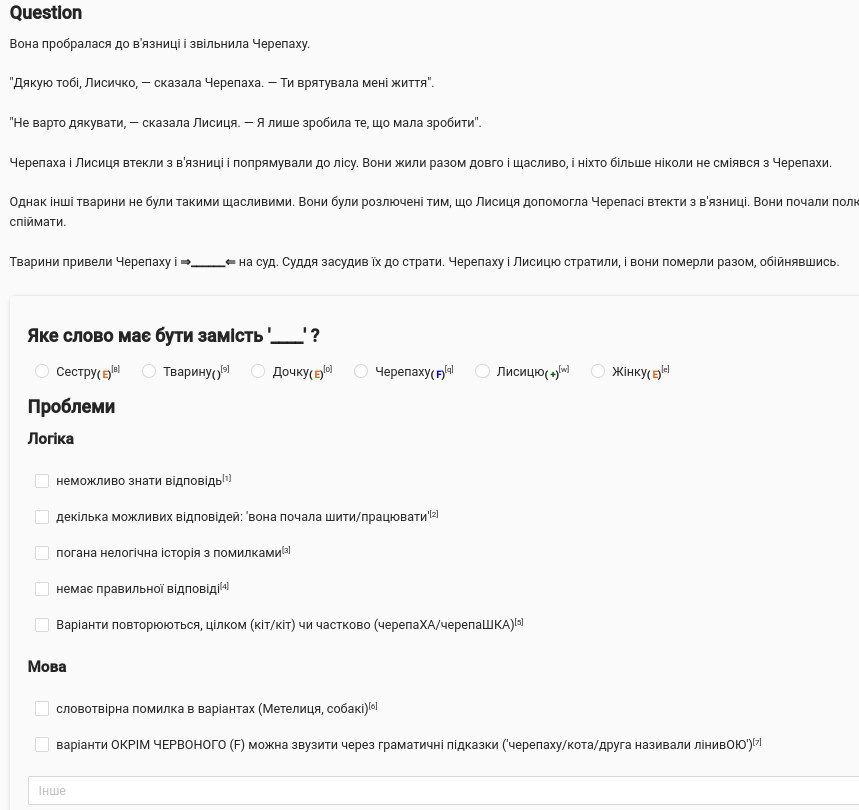

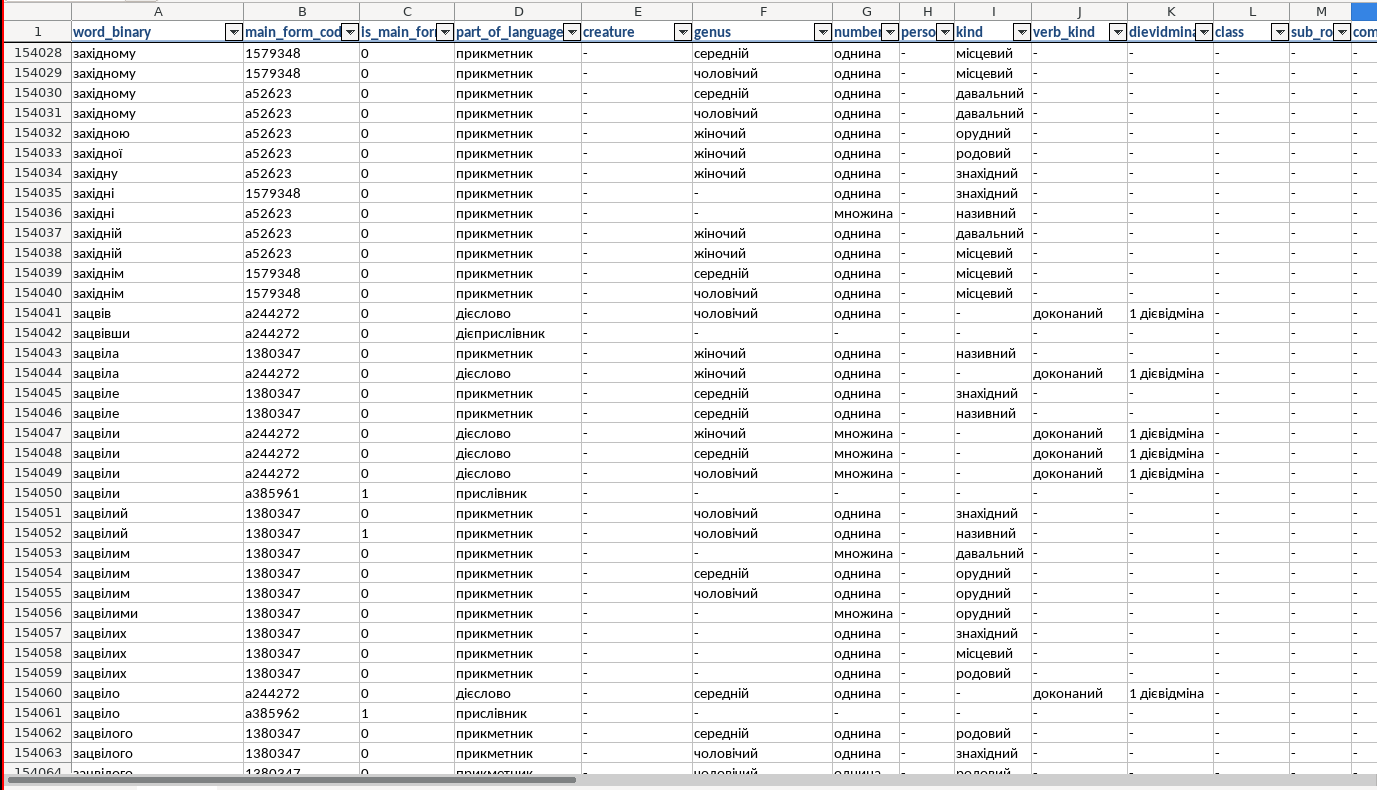

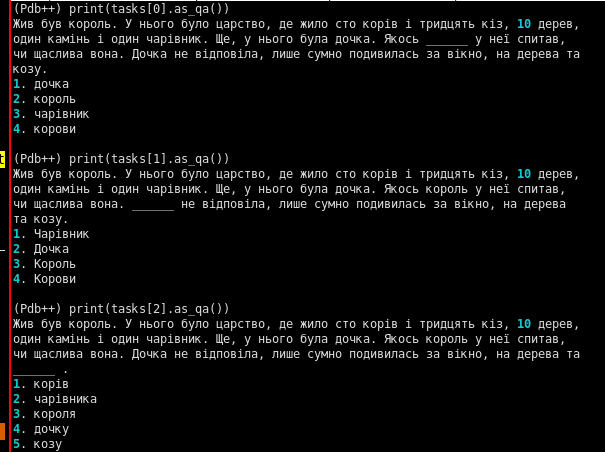

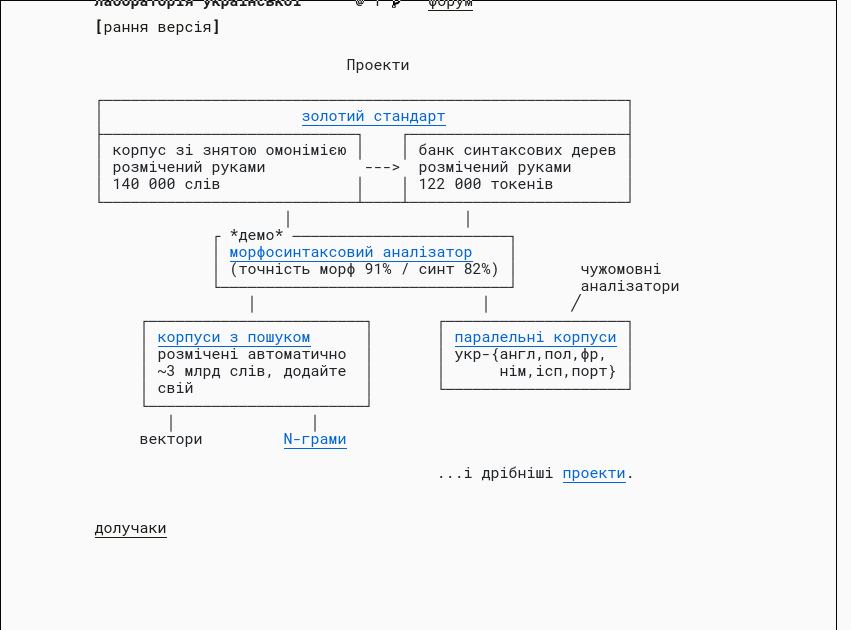

- Issue with 2-3-4 plurals: I can just create three classes of nouns, singular, 2-3-4, and >=5

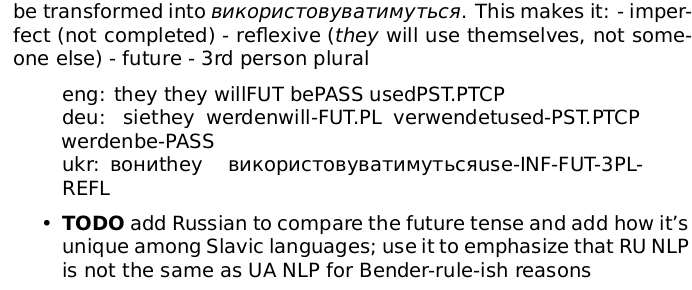

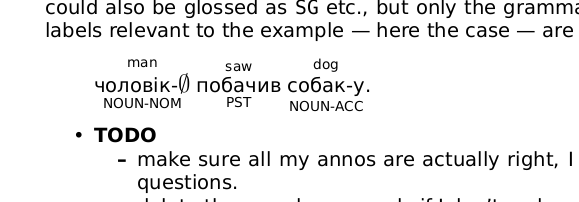

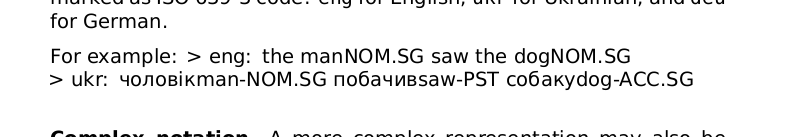

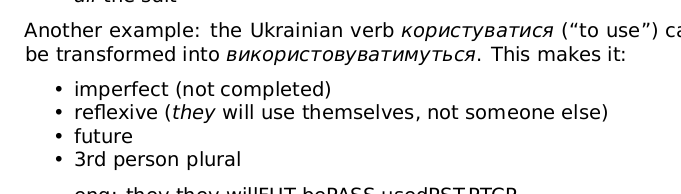

- don’t forget to discuss the morphology complexities in the masterarbeit

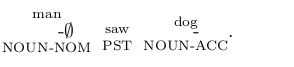

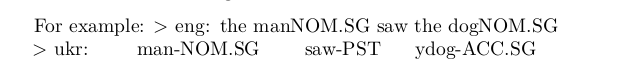

- Conveying the issues in English is hard, but I can (for a given UA example)

- provide the morphology info for the English words

- provide a third German translation

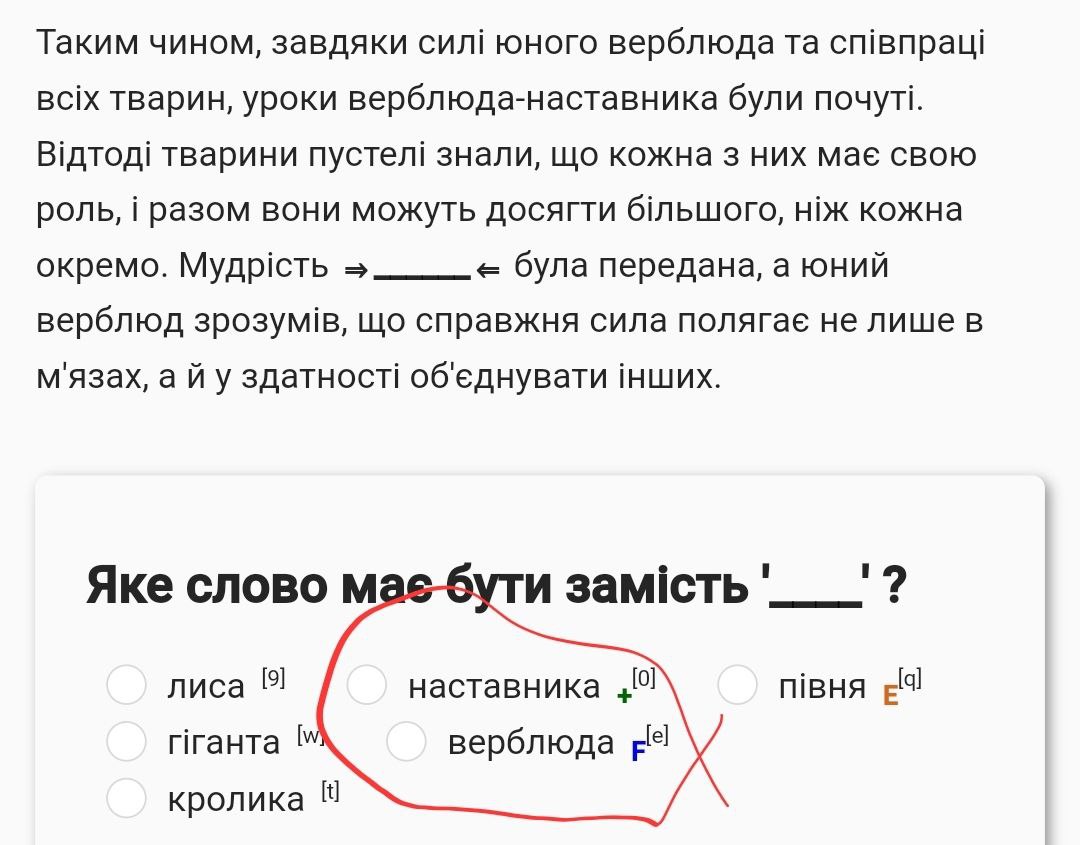

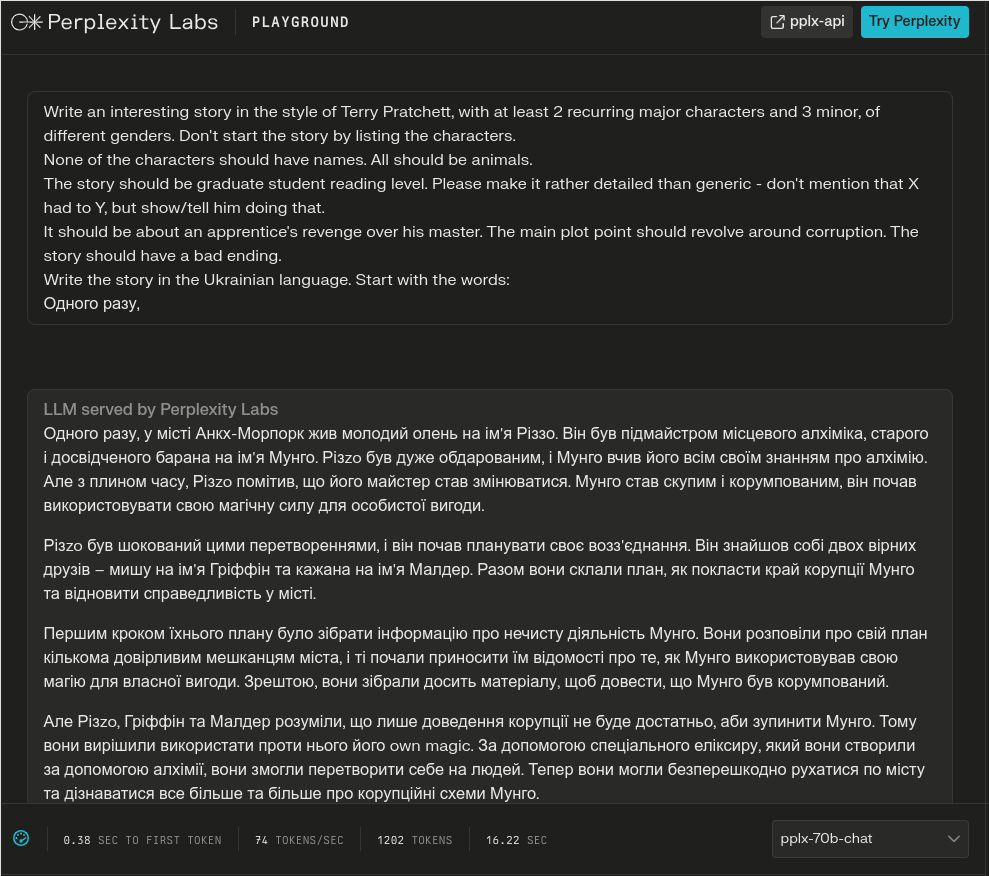

- … leading to a probability not of 1/4(..10) but 1/2

- one way to filter out such bad examples is to get a LM to solve the task without providing context, or even better - look at the distribution of probabilities over the answers and see if some are MUCH more likely than the others

- Issue with 2-3-4 plurals: I can just create three classes of nouns, singular, 2-3-4, and >=5

- don’t forget to discuss the morphology complexities in the masterarbeit

- Conveying the issues in English is hard, but I can (for a given UA example)

- provide the morphology info for the English words

- provide a third German translation

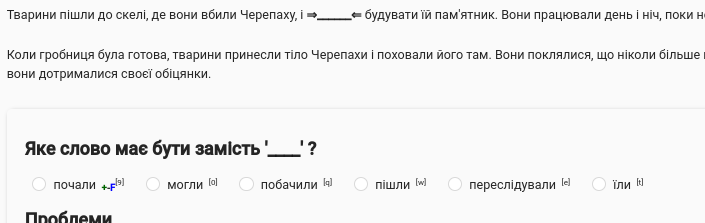

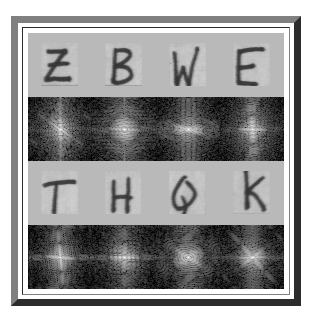

G

G

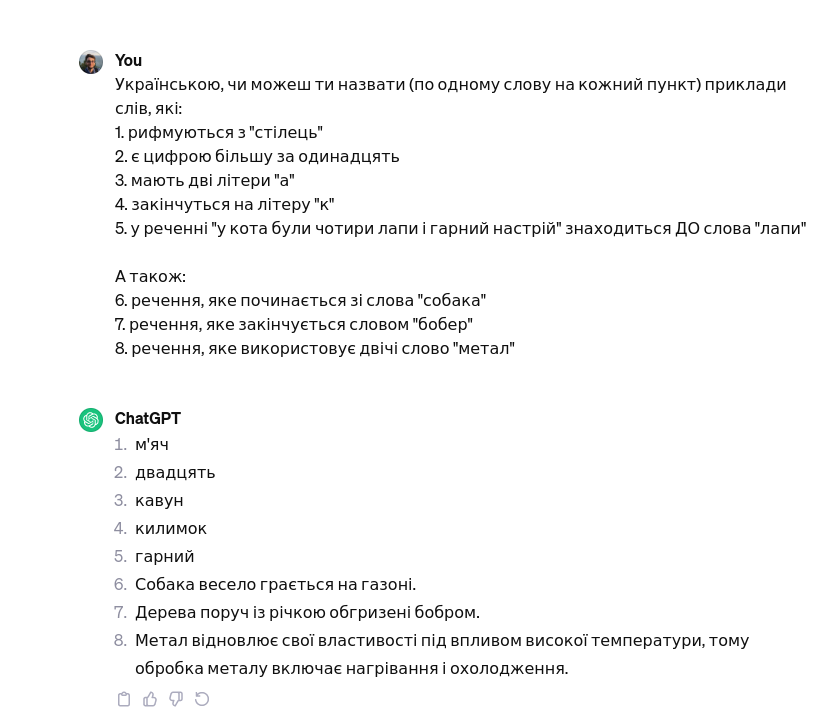

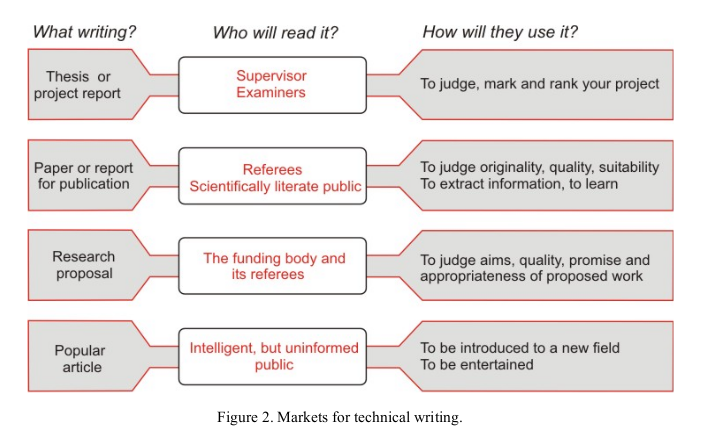

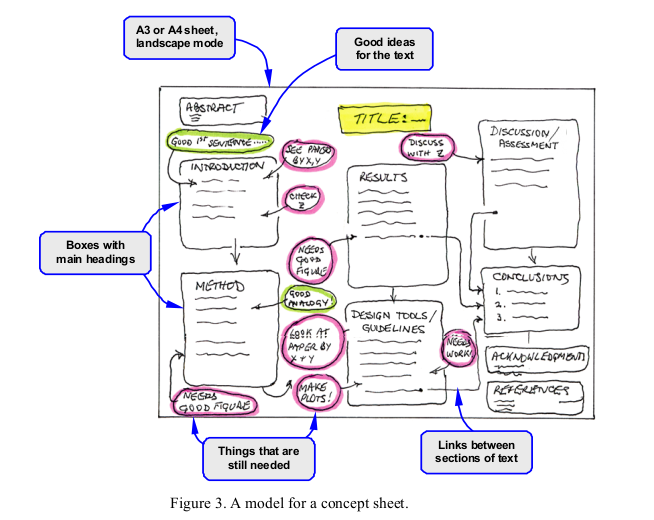

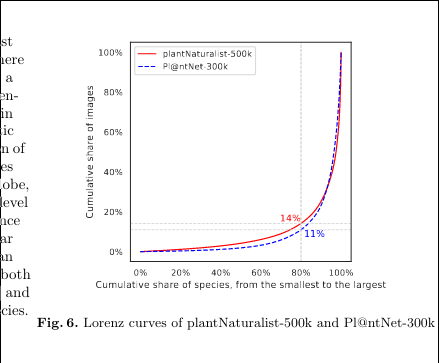

(<

(< (same paper)

(same paper) (pic from <

(pic from <

j

j

{:height=“500px”}

{:height=“500px”}

{:height=“500px”}.

{:height=“500px”}.

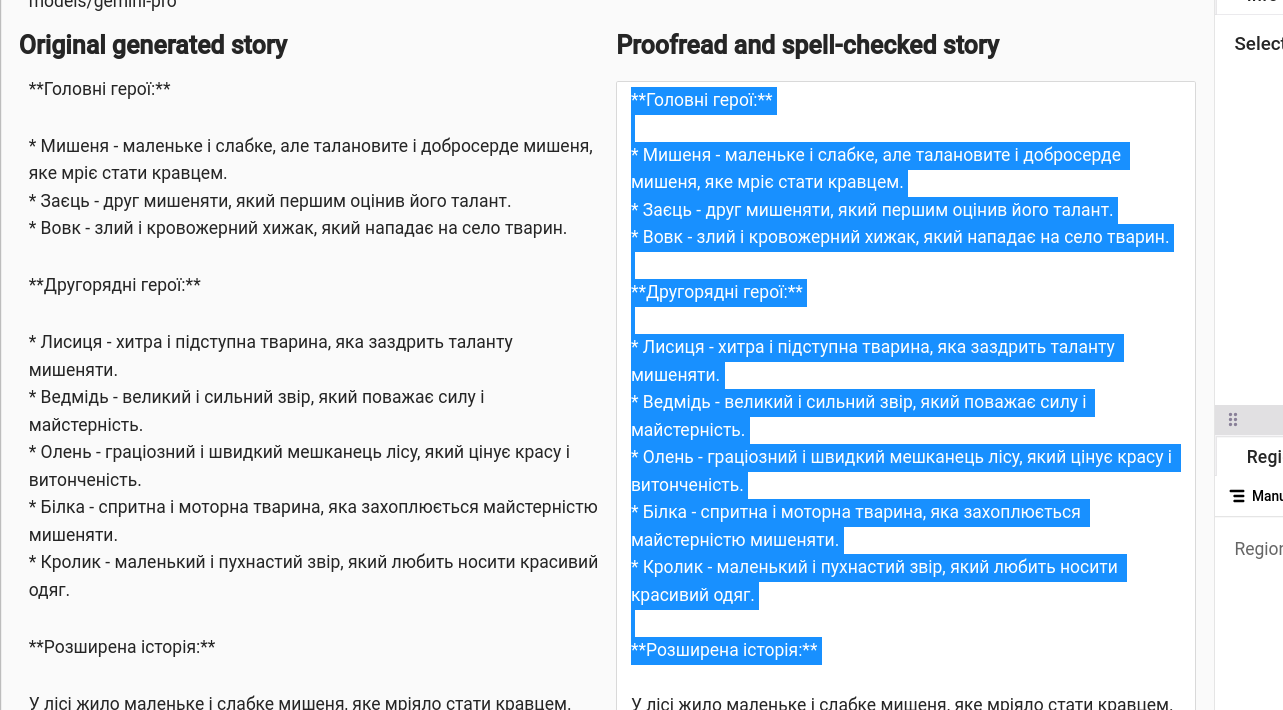

{:width=“50%”}.

{:width=“50%”}.

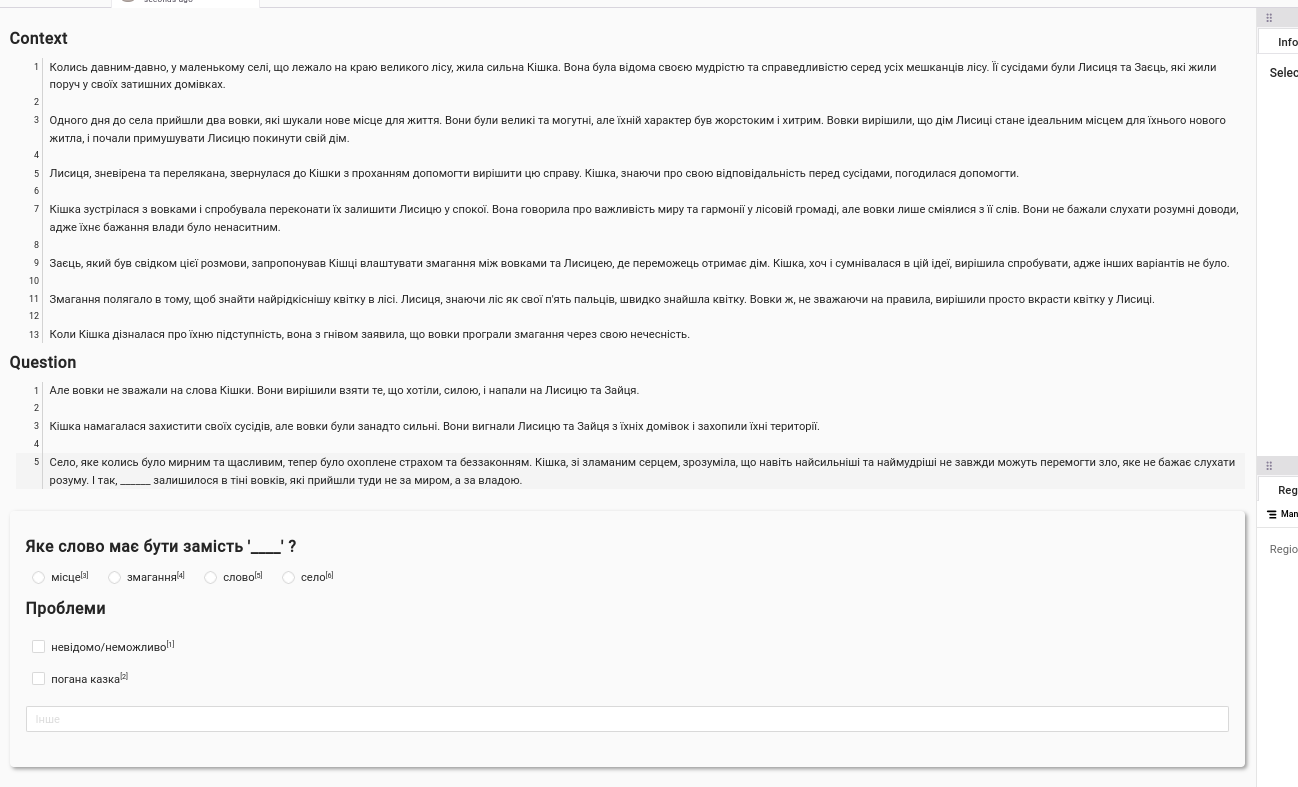

{:height=“500px”}.

{:height=“500px”}. {:height=“500px”}.

{:height=“500px”}. {:height=“500px”}.

{:height=“500px”}.

{:height=“500px”}

{:height=“500px”}

{:height=“500px”}.

{:height=“500px”}. {:height=“500px”}.

{:height=“500px”}. {:height=“300px”}.

{:height=“300px”}.

{:height=“300px”}.

{:height=“300px”}.

{:height=“300px”}.

{:height=“300px”}.