My custom keyboard layout with dvorak and LEDs

3439 words, ~13 min read

Intro

My keyboard setup has always been weird, and usually glued together with multiple programs. Some time ago I decided to re-do it from scratch and this led to some BIG improvements and simplifications I’m really happy about, and want to describe here.

Context: I regularly type in English, German, Russian, Ukrainian, and write a lot of Python code. I use vim, qutebrowser, tiling WMs and my workflows are very keyboard-centric.

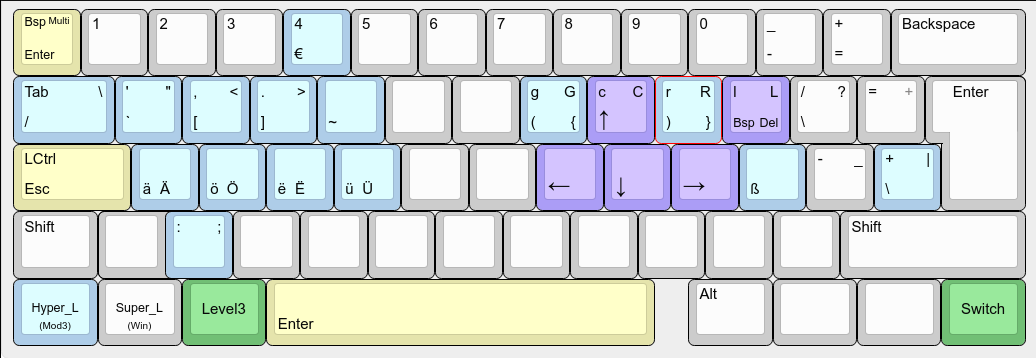

TL;DR: This describes my current custom keyboard layout that has:

- only two sub-layouts (latin/cyrillic)

- the Caps Lock LED indicating the active one

- Caps Lock acting both as Ctrl and Escape

- things like arrow keys, backspace accessible without moving the right hand

- Python characters moved closer to the main row

- UPDATE Feb. 2023: Added numpad keys on level5! But not level5 itself. For now,

-option 'lv5:ralt_switch_lock'tosetxkbmapworks. For an updated picture, see the github repo.

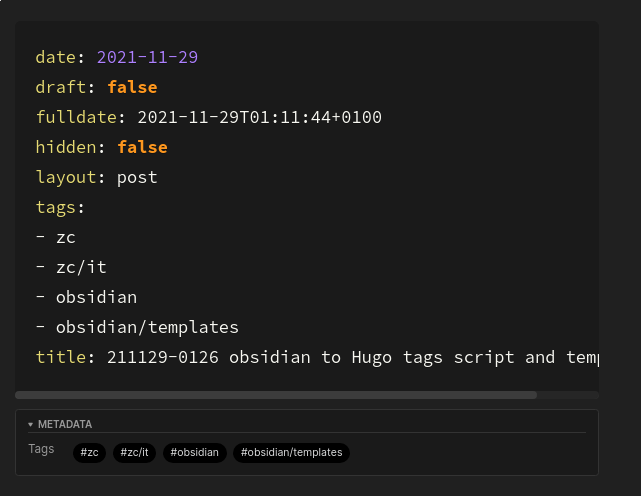

It looks like this1:

and is available on Github.