serhii.net

In the middle of the desert you can say anything you want

-

Day 1899 (13 Mar 2024)

Huggingface Hub prefers zip archives because they support streaming

Random nugget from Document to compress data files before uploading · Issue #5687 · huggingface/datasets:

- gz, to compress individual files

- zip, to compress and archive multiple files; zip is preferred rather than tar because it supports streaming out of the box

(Streaming: https://huggingface.co/docs/datasets/v2.4.0/en/stream TL;DR don’t download the entire dataset for very large datasets, add

stream=trueto theload_dataset()fn)

-

Day 1877 (20 Feb 2024)

Latex has paragraphs and subparagraphs

Til from NASA’s (!) docs1 that there are two sub-levels after

subsubsection:\subsubsection{Example Sub-Sub-Section} \label{sec:example-subsubsection} \ref{sec:example-subsubsection} is an example of \texttt{subsubsection}. \paragraph{Example Paragraph} \label{sec:example-paragraph} \ref{sec:example-paragraph} is an example of \texttt{paragraph}. \subparagraph{Example Sub-Paragraph} \label{sec:example-subparagraph} \ref{sec:example-subparagraph} is an example of \texttt{subparagraph}.I so needed them!

Things I'll do different next time when creating datasets

-

Day 1875 (18 Feb 2024)

Huggingface dataset build configs

Goal: create multiple dataset configs for 231203-1745 Masterarbeit LMentry-static-UA task.

- Example: datasets/templates/new_dataset_script.py at main · huggingface/datasets

- Tutorial: Builder classes

- One example they give: https://huggingface.co/datasets/frgfm/imagenette/blob/main/imagenette.py

Developing:

- One can in

_URLSprovide paths to local files as well, to speed up development!

It’s not magic dictionaries, it’s basically syntax known to me (with Features etc.) which is neat!

elif self.config.name == "WhichWordWrongCatTask": yield key, { "question": data["question"], "correctAnswer": data["correctAnswer"], "options": data["additionalMetadata_all_options"] # "second_domain_answer": "" if split == "test" else data["second_domain_answer"], }Ah, dataset viewer not available :( But apparently one can use manual configs and then it works: https://huggingface.co/docs/hub/datasets-manual-configuration

I can use https://huggingface.co/datasets/scene_parse_150/edit/main/README.md as an example here.

dataset_info: - config_name: scene_parsing features: - name: image dtype: image - name: annotation dtype: image - name: scene_category dtype: class_label: names: '0': airport_terminal '1': art_gallery '2': badlands - config_name: instance_segmentation features: - name: image dtype: image - name: annotation dtype: image… This shows WISTask in the viewer, but not LOWTask (because

'str' object has no attribute 'items')configs: - config_name: LOWTask data_files: "data/tt_nim/LOWTask.jsonl" features: - name: question dtype: string - name: correctAnswer dtype: string default: true - config_name: WISTask data_files: "data/tt_nim/WISTask.jsonl"And I can’t download either with python because

Traceback (most recent call last): File "/home/sh/.local/lib/python3.8/site-packages/datasets/builder.py", line 1873, in _prepare_split_single writer.write_table(table) File "/home/sh/.local/lib/python3.8/site-packages/datasets/arrow_writer.py", line 568, in write_table pa_table = table_cast(pa_table, self._schema) File "/home/sh/.local/lib/python3.8/site-packages/datasets/table.py", line 2290, in table_cast return cast_table_to_schema(table, schema) File "/home/sh/.local/lib/python3.8/site-packages/datasets/table.py", line 2248, in cast_table_to_schema raise ValueError(f"Couldn't cast\n{table.schema}\nto\n{features}\nbecause column names don't match") ValueError: Couldn't cast question: string correctAnswer: string templateUuid: string taskInstanceUuid: string additionalMetadata_kind: string additionalMetadata_template_n: int64 additionalMetadata_option_0: string additionalMetadata_option_1: string additionalMetadata_label: int64 additionalMetadata_t1_meta_pos: string additionalMetadata_t1_meta_freq: int64 additionalMetadata_t1_meta_index: int64 additionalMetadata_t1_meta_freq_quantile: int64 additionalMetadata_t1_meta_len: int64 additionalMetadata_t1_meta_len_quantile: string additionalMetadata_t1_meta_word_raw: string additionalMetadata_t2_meta_pos: string additionalMetadata_t2_meta_freq: int64 additionalMetadata_t2_meta_index: int64 additionalMetadata_t2_meta_freq_quantile: int64 additionalMetadata_t2_meta_len: int64 additionalMetadata_t2_meta_len_quantile: string additionalMetadata_t2_meta_word_raw: string additionalMetadata_reversed: bool additionalMetadata_id: int64 system_prompts: list<item: string> child 0, item: string to {'question': Value(dtype='string', id=None), 'correctAnswer': Value(dtype='string', id=None), 'templateUuid': Value(dtype='string', id=None), 'taskInstanceUuid': Value(dtype='string', id=None), 'additionalMetadata_kind': Value(dtype='string', id=None), 'additionalMetadata_template_n': Value(dtype='int64', id=None), 'additionalMetadata_all_options': Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), 'additionalMetadata_label': Value(dtype='int64', id=None), 'additionalMetadata_main_cat_words': Sequence(feature=Value(dtype='string', id=None), length=-1, id=None), 'additionalMetadata_other_word': Value(dtype='string', id=None), 'additionalMetadata_cat_name_main': Value(dtype='string', id=None), 'additionalMetadata_cat_name_other': Value(dtype='string', id=None), 'additionalMetadata_id': Value(dtype='int64', id=None), 'system_prompts': Sequence(feature=Value(dtype='string', id=None), length=-1, id=None)} because column names don't match The above exception was the direct cause of the following exception: Traceback (most recent call last): File "test.py", line 18, in <module> ds = load_dataset(path, n) File "/home/sh/.local/lib/python3.8/site-packages/datasets/load.py", line 1797, in load_dataset builder_instance.download_and_prepare( File "/home/sh/.local/lib/python3.8/site-packages/datasets/builder.py", line 890, in download_and_prepare self._download_and_prepare( File "/home/sh/.local/lib/python3.8/site-packages/datasets/builder.py", line 985, in _download_and_prepare self._prepare_split(split_generator, **prepare_split_kwargs) File "/home/sh/.local/lib/python3.8/site-packages/datasets/builder.py", line 1746, in _prepare_split for job_id, done, content in self._prepare_split_single( File "/home/sh/.local/lib/python3.8/site-packages/datasets/builder.py", line 1891, in _prepare_split_single raise DatasetGenerationError("An error occurred while generating the dataset") from e datasets.builder.DatasetGenerationError: An error occurredwhile generating the datasetOh goddammit. Relevant:

- pytorch - Load dataset with datasets library of huggingface - Stack Overflow

- python - Huggingface datasets ValueError - Stack Overflow is an issue because he loaded it locally

- machine learning - Huggingface Load_dataset() function throws “ValueError: Couldn’t cast” - Stack Overflow also Ukrainian

I give up.

Back to the script.

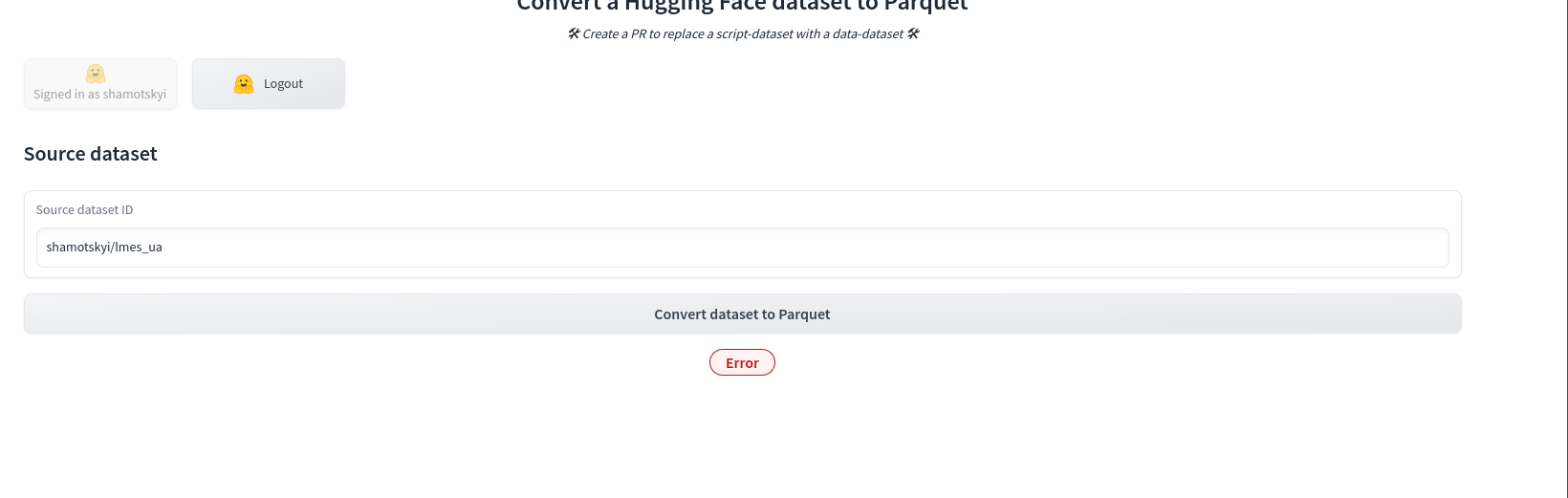

Last thing I’ll try (as suggested by tau/scrolls · Dataset Viewer issue: DatasetWithScriptNotSupportedError):

Convert Dataset To Parquet - a Hugging Face Space by albertvillanova

…

feels so unsatisfying not to see the datasets in the viewer :(

tau/scrolls · Dataset Viewer issue: DatasetWithScriptNotSupportedError this feels like something relevant to me. We’ll see.

python random sample vs random choices

Got bit by this.

random — Generate pseudo-random numbers — Python 3.12.2 documentation

- SAMPLE() (

random.sample()) IS WITHOUT REPLACEMENT: no duplicates unless present in list (random.shuffle()) - CHOICES() (

random.choices()) IS WITH REPLACEMENT: duplicates MAY happen.

Also:

random.shuffle()works in-place. Sampling len(x) is a way to shuffle immutable lists.

JSONL to JSON conversion with jq

jq -s '.' input.jsonl > output.json jq -c '.[]' input.json > output.jsonl

-

Day 1872 (15 Feb 2024)

DBnary is a cool place I should look into further

Dbnary – Wiktionary as Linguistic Linked Open Data

It’s something something Wiktionary something, but more than that I think. “RDF multilingual lexical resource”.

Includes Ukrainian, though not in the dashboard pages: Dashboard – Dbnary.

Learned about it in the context of 240215-2136 LMentry improving words and sentences by frequency, linked by dmklinger/ukrainian: English to Ukrainian dictionary.

LMentry improving words and sentences by frequency

Word frequencies

- oprogramador/most-common-words-by-language: List of the most common words in many languages links to https://raw.githubusercontent.com/hermitdave/FrequencyWords/master/content/2016/uk/uk_50k.txt

- of-fucking-course:

я 116180 не 99881 в 53280 что 45257 ты 38282 на 37762 що 34824 и 34712 это 33254 так 31178

- of-fucking-course:

- most-common-words-multilingual/data/wordfrequency.info/uk.txt at main · frekwencja/most-common-words-multilingual similar to the above but much better, without ыs.

- ssharoff/robust: Robust frequency estimates for word lists has nice info about filtering wordlists etc to make them ‘robust’

- Includes a link to Ukrainian: http://corpus.leeds.ac.uk/frqc/robust/wikipedia-uk-robust.tsv

- … that I can’t get to connect

- Includes a link to Ukrainian: http://corpus.leeds.ac.uk/frqc/robust/wikipedia-uk-robust.tsv

- Where can I find the list of Ukrainian words ordered by frequency of use? : r/Ukrainian

- MOVA.info

- I see no way to download their corpora: Корпус української мови MOVA.info

- MOVA.info

- Find some way to get one of the old/cool/known big ukr corpora

- Dictionary of the Ukrainian Language

- nice! But not directly usable. But very nice.

- Oder?.. dmklinger/ukrainian: English to Ukrainian dictionary

- YES!

words.json

- One of its sources:

- Download – Dbnary

- HA, it’s a really awesome place worth looking at further!

- Download – Dbnary

- nice! But not directly usable. But very nice.

Anyway - found the perfect dictionary. Wooho.

Huggingface Hub full dataset card metadata

The HF Hub dataset UI allows to set only six fields in the metadata, the full fields can be set through the YAML it generates. Here’s the full list (hub-docs/datasetcard.md at main · huggingface/hub-docs):

--- # Example metadata to be added to a dataset card. # Full dataset card template at https://github.com/huggingface/huggingface_hub/blob/main/src/huggingface_hub/templates/datasetcard_template.md language: - {lang_0} # Example: fr - {lang_1} # Example: en license: {license} # Example: apache-2.0 or any license from https://hf.co/docs/hub/repositories-licenses license_name: {license_name} # If license = other (license not in https://hf.co/docs/hub/repositories-licenses), specify an id for it here, like `my-license-1.0`. license_link: {license_link} # If license = other, specify "LICENSE" or "LICENSE.md" to link to a file of that name inside the repo, or a URL to a remote file. license_details: {license_details} # Legacy, textual description of a custom license. tags: - {tag_0} # Example: audio - {tag_1} # Example: bio - {tag_2} # Example: natural-language-understanding - {tag_3} # Example: birds-classification annotations_creators: - {creator} # Example: crowdsourced, found, expert-generated, machine-generated language_creators: - {creator} # Example: crowdsourced, ... language_details: - {bcp47_lang_0} # Example: fr-FR - {bcp47_lang_1} # Example: en-US pretty_name: {pretty_name} # Example: SQuAD size_categories: - {number_of_elements_in_dataset} # Example: n<1K, 100K<n<1M, … source_datasets: - {source_dataset_0} # Example: wikipedia - {source_dataset_1} # Example: laion/laion-2b task_categories: # Full list at https://github.com/huggingface/huggingface.js/blob/main/packages/tasks/src/pipelines.ts - {task_0} # Example: question-answering - {task_1} # Example: image-classification task_ids: - {subtask_0} # Example: extractive-qa - {subtask_1} # Example: multi-class-image-classification paperswithcode_id: {paperswithcode_id} # Dataset id on PapersWithCode (from the URL). Example for SQuAD: squad configs: # Optional for datasets with multiple configurations like glue. - {config_0} # Example for glue: sst2 - {config_1} # Example for glue: cola # Optional. This part can be used to store the feature types and size of the dataset to be used in python. This can be automatically generated using the datasets-cli. dataset_info: features: - name: {feature_name_0} # Example: id dtype: {feature_dtype_0} # Example: int32 - name: {feature_name_1} # Example: text dtype: {feature_dtype_1} # Example: string - name: {feature_name_2} # Example: image dtype: {feature_dtype_2} # Example: image # Example for SQuAD: # - name: id # dtype: string # - name: title # dtype: string # - name: context # dtype: string # - name: question # dtype: string # - name: answers # sequence: # - name: text # dtype: string # - name: answer_start # dtype: int32 config_name: {config_name} # Example for glue: sst2 splits: - name: {split_name_0} # Example: train num_bytes: {split_num_bytes_0} # Example for SQuAD: 79317110 num_examples: {split_num_examples_0} # Example for SQuAD: 87599 download_size: {dataset_download_size} # Example for SQuAD: 35142551 dataset_size: {dataset_size} # Example for SQuAD: 89789763 # It can also be a list of multiple configurations: # ```yaml # dataset_info: # - config_name: {config0} # features: # ... # - config_name: {config1} # features: # ... # ``` # Optional. If you want your dataset to be protected behind a gate that users have to accept to access the dataset. More info at https://huggingface.co/docs/hub/datasets-gated extra_gated_fields: - {field_name_0}: {field_type_0} # Example: Name: text - {field_name_1}: {field_type_1} # Example: Affiliation: text - {field_name_2}: {field_type_2} # Example: Email: text - {field_name_3}: {field_type_3} # Example for speech datasets: I agree to not attempt to determine the identity of speakers in this dataset: checkbox extra_gated_prompt: {extra_gated_prompt} # Example for speech datasets: By clicking on “Access repository” below, you also agree to not attempt to determine the identity of speakers in the dataset. # Optional. Add this if you want to encode a train and evaluation info in a structured way for AutoTrain or Evaluation on the Hub train-eval-index: - config: {config_name} # The dataset config name to use. Example for datasets without configs: default. Example for glue: sst2 task: {task_name} # The task category name (same as task_category). Example: question-answering task_id: {task_type} # The AutoTrain task id. Example: extractive_question_answering splits: train_split: train # The split to use for training. Example: train eval_split: validation # The split to use for evaluation. Example: test col_mapping: # The columns mapping needed to configure the task_id. # Example for extractive_question_answering: # question: question # context: context # answers: # text: text # answer_start: answer_start metrics: - type: {metric_type} # The metric id. Example: wer. Use metric id from https://hf.co/metrics name: {metric_name} # Tne metric name to be displayed. Example: Test WER --- Valid license identifiers can be found in [our docs](https://huggingface.co/docs/hub/repositories-licenses). For the full dataset card template, see: [datasetcard_template.md file](https://github.com/huggingface/huggingface_hub/blob/main/src/huggingface_hub/templates/datasetcard_template.md).Found this in their docu: Sharing your dataset — datasets 1.8.0 documentation

Full MD template : huggingface_hub/src/huggingface_hub/templates/datasetcard_template.md at main · huggingface/huggingface_hub EDIT: oh nice “import dataset card template” is an option in the GUI and it works!

- oprogramador/most-common-words-by-language: List of the most common words in many languages links to https://raw.githubusercontent.com/hermitdave/FrequencyWords/master/content/2016/uk/uk_50k.txt

-

Day 1867 (10 Feb 2024)

CBT Task filtering instructions (Masterarbeit)

(Context: 240202-1312 Human baselines creation for Masterarbeit / 231024-1704 Master thesis task CBT / 240202-1806 CBT Story proofreading for Masterarbeit)

Ціль: відсортувати добрі і погані тестові завдання по казкам. Погані казки - ті, де проблеми з варіантами відповіді.

Контекст: автоматично створюю казки, а потім тестові завдання по цим казкам, щоб перевіряти наскільки добре ШІ може розуміти суть казок (and by extension - мови). Для цього треба перевірити, чи створені казки та тести по ним взагалі можливо вирішити (і це мають робити люди). Потрібно зібрати 1000 правильних тестових завдань.

Завдання: НЕ вибирати правильну відповідь (вона +/- відома), а вирішувати, чи завдання ОК чи ні.

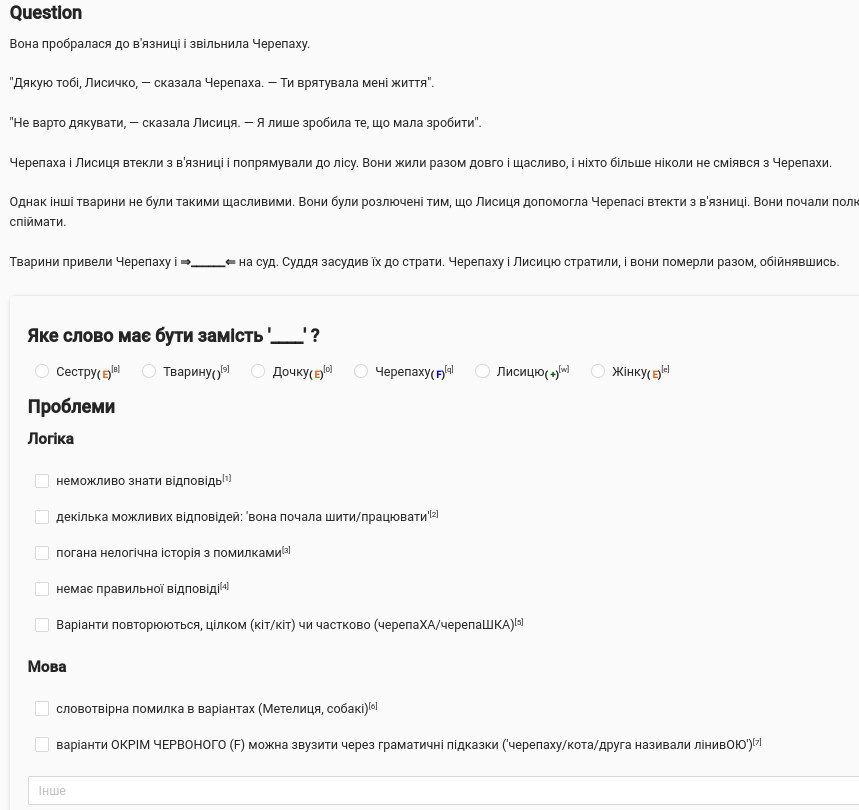

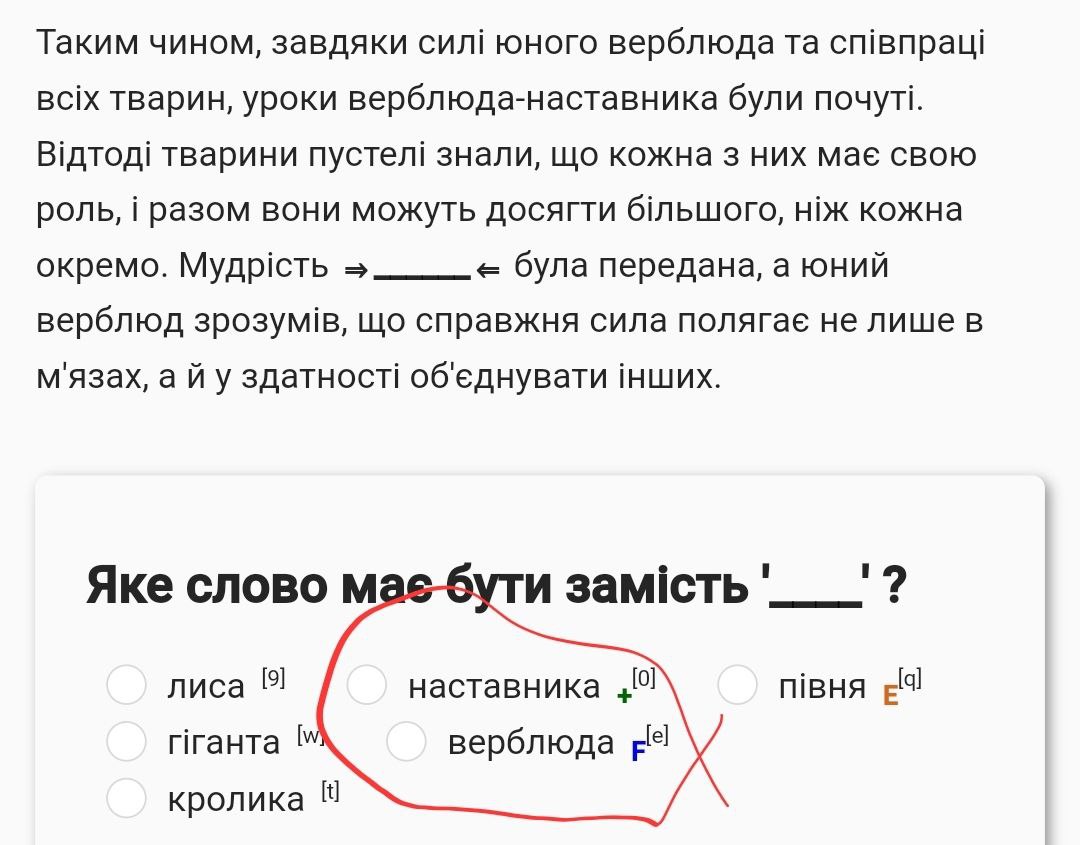

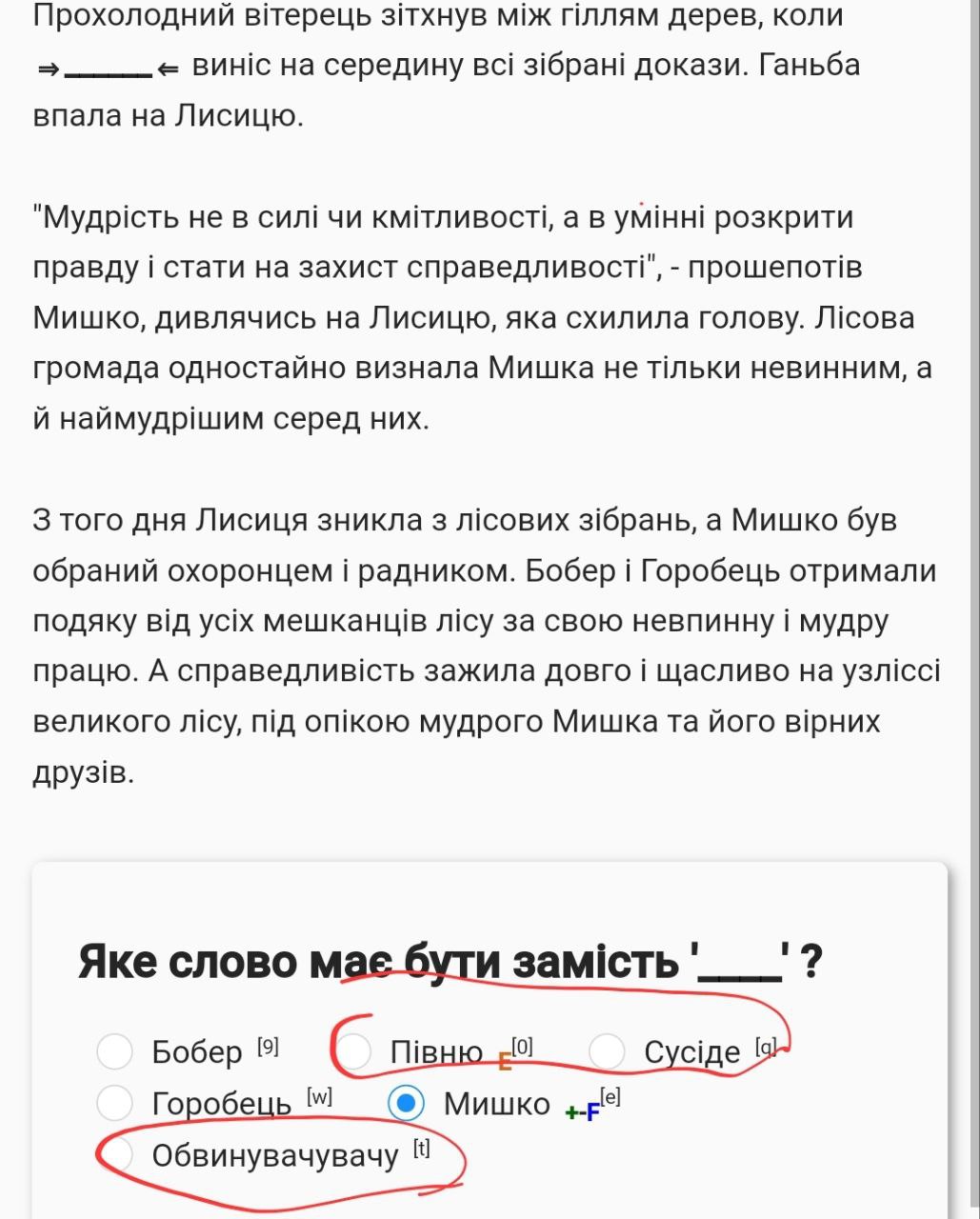

Типове завдання:

Коротко суть

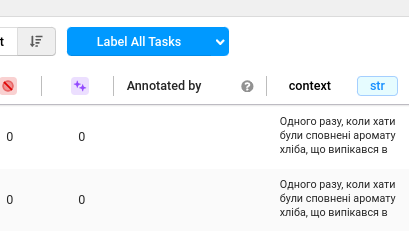

Інтерфейс

- Нажимаємо на Label All Tasks:

- Клавіші зручні:

- Ctrl+Enter “зберегти і далі”

- Ctrl-Space для “пропустити”

- Для варіантів в квадратних дужках їх клавішаG

Казки

В списку казок натискаємо на label all tasks і бачимо історію з двох частин:

- context: перші 60% казки. Часто можна не читати взагалі, відповідь буде зрозумілою по другій частині

- question: останні 40% казки, і якесь слово там буде замінено на “

_____”.

Далі бачимо варіанти відповіді і проблеми.

Варіантів відповіді шість. Це різні слова які можуть бути у прочерку. Можливі три типи прочерків:

- головні герої (Коза, Черепаха, кравчиня)

- іменники (їжа, одежа)

- дієслова (пішла, вирішив)

Варіанти мають бути узгодженими з текстом. Узгоджено:

- синій плащ, черепаха сміялась Не узгоджено:

- весела кіт, орел полетіла

Проблеми

Переважна більшість завдань ОК, але не всі.

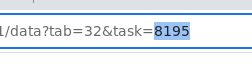

Якщо є питання, кидайте в чат скрін та номер завдання.

Воно в URI:

Проблеми в завданні можуть бути логічні і мовні.

Логічні проблеми

- відповідь знати неможливо

- текст до і після не дає достатньо інформації щоб вибрати правильний варіант

- ми тупо не знаємо до кого вони пішли додому, кота чи черепахи, і не можемо дізнатися. Але це різні істоти

- декілька відповідей правильні

-

Лев сказав Черепасі, що йому потрібен піджак. Черепаха взялася за роботу/шиття.

-

- Декілька варіантів підходять тому ж поняттю. Він почав шити+працювати, він Кіт+підозрюваний.

- Виключення:

- тварина/звір. Якщо в варіантах є тварини і слово тварина/звір (а всі коти тварини), то критерій якщо воно натурально може бути вживано. Якщо кіт і їжак йдуть мандрувати, то писати потім кіт і звір дивно. Тобто це проблема тільки якщо можна вжити в тому реченні ці слова і воно буде ОК.

-

Невідомо – це коли ми тупо не знаємо до кого вони пішли додому, кота чи черепахи, щоб почати шити далі, і не можемо дізнатися. Але це різні істоти

- немає правильної відповіді

-

Тигр вкусив собаку. Коза/синхрофазотрон закричала від болі: “тигр, за що ти мене вкусив”.

-

- варіанти повторюються

- або один і той самий варіант двічі, або дуже схожі між собою (кіт/котик) і означають те саме

- Виключення: якщо там два різних персонажа, умовно кіт і його син котик, то все ОК.

- доконаний/недоконаний вид дієслів дублікатом не вважається (вона летіла/полетіла до свого вулика), але МОЖЕ бути “декілька правильних відповідей” (якщо контекст дозволяє обидва варіанти)

- або один і той самий варіант двічі, або дуже схожі між собою (кіт/котик) і означають те саме

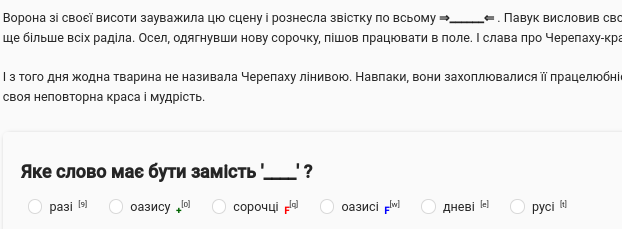

Мовні проблеми

- неіснуючі слова в варіантах

-

Метелиця, собакі, …

-

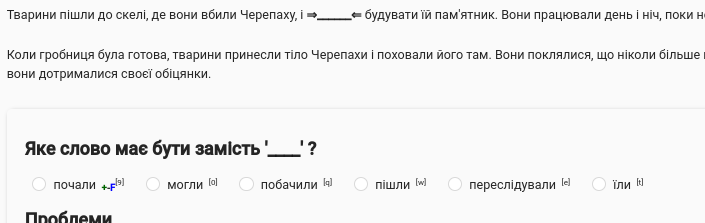

- граматика в варіантах дає підказки, …

- … КРІМ дієслів

- … КРІМ варіанта відміченного F

- Наприклад, тут є підказки і це завдання некоректне:

-

черепаху/кота/метелика називали лінивОЮ

-

лисиця взяла свій кожух/сумку/їжу…

-

-

- А ці варіанти ОК, бо виключення

- Тут “сорочці” не підходить бо “всьому” означає чоловічий рід, АЛЕ під варіантом є літера F - тобто це норм

- Тут можна сказати, що “переслідували” очевидно не може бути перед “будувати” і відкинути варіант навіть не знаючи казки, АЛЕ це дієслова і тут все ОК

- Тут “сорочці” не підходить бо “всьому” означає чоловічий рід, АЛЕ під варіантом є літера F - тобто це норм

- ВИКЛЮЧЕННЯ: правила милозвучності (на жаль) не вважаємо граматичними проблемами.

- “з твариною/звіром” - “з звіром” граматично не ОК, але ми це ігноруємоjjj

Інші проблеми

- В деяких казках є граматичні проблеми, не шукайте спеціально, але якщо помітите – кидайте в чат з номером task де знайшли

- лише раз, в усіх інших тасках по цій історії можна не відмічати

- Щось інше, для цього поле внизу

Будь-які думки чи нотатки пишіть в полі внизу.

- Нажимаємо на Label All Tasks: