serhii.net

In the middle of the desert you can say anything you want

-

Day 381 (16 Jan 2020)

Day 379

Semantic highlighting

This is actually really nice as idea, and as usual someone on the internet thought about this more than I did: Making Semantic Highlighting Useful - Brian Will - Medium

I somehow really like the idea of having color giving me actual semantic information about the thing I’m reading, and there are a lot of potentially cool stuffs that can be done, such as datatypes etc. It’s very connected to my old idea of creating a writing system that uses color to better and more concisely mark different letters, like the apparently defunct Dotsies but even more interesting.

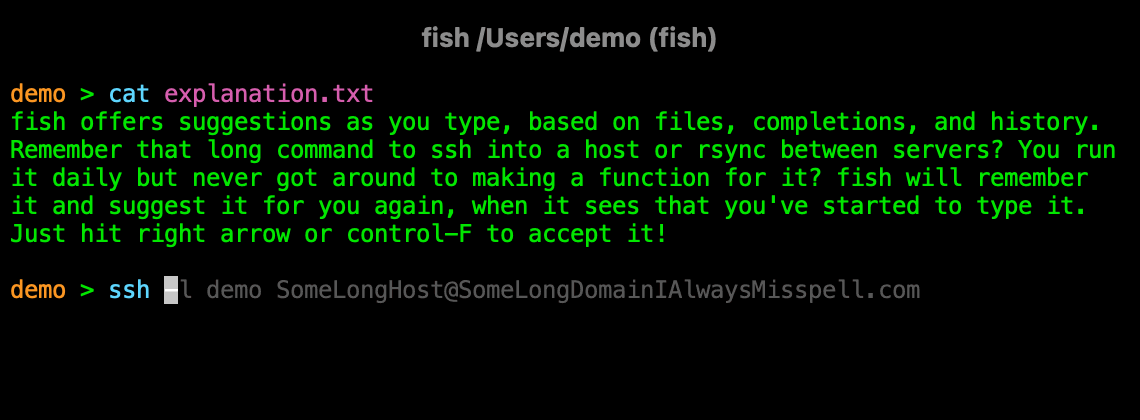

Zsh autosuggestions (fish-like)

This is interesting: zsh-users/zsh-autosuggestions: Fish-like autosuggestions for zsh

Less noisy autocomplete than the default, should look similar to this:

As a side note I like the

cat explanation.txtpart for screenshots.

-

Day 380 (15 Jan 2020)

Day 378

Adding numbers in Bash

integer arithmetic - How can I add numbers in a bash script - Stack Overflow

num=$((num1 + num2)) num=$(($num1 + $num2)).. which is what I used in the updated

create.shscript.FILE=_posts/$(date +%Y-%m-%d)-day$((365+$(date +%j))).markdownTensorflow

- TODO - why can’t

tf.convert_to_tensor()convert stuff to other types (int64->float32) and I have to usetf.cast()afterwards? tf.in_train_phase()– both x and y have to be the same shape- In a custom layer,

compute_mask()can return a singleNoneeven if there are multiple output layers!

German

Erfahrungsmäßig

- TODO - why can’t

-

Day 378 (13 Jan 2020)

Day 013

German random

The Ctrl key in Germany is “Strg”, pronounced “Steuerung”

English random

refuse - Dictionary Definition : Vocabulary.com Refuse as a verb is re-FYOOZ, as a noun it’s REF-yoss.

-

Day 363 (29 Dec 2019)

Day 363

German

- Schmierpapier: scratch paper

- verwursten: to make into wurst.

Random

-

Day 354 (20 Dec 2019)

Day 354

Tensorflow eager execution

Makes everything slower by about 2-4 times.

-

Day 350 (16 Dec 2019)

Day 350

Tensorflow object has no attribute

_keras_historyAttributeError: 'tensorflow.python.framework.ops.EagerTensor' object has no attribute '_keras_historydisappears if we dont’t use eager execution inside the metric, fine if we use it inside the model. That istf.config.experimental_run_functions_eagerly(False)inside metrics.py solves this, butmodel.run_eagerly=Trueis fine.https://github.com/tensorflow/addons/pull/377 re output_masks and it being blocked

tf.keras vs tf.python.keras

tensorflow - What is the difference between tf.keras and tf.python.keras? - Stack Overflow

-

Day 344 (10 Dec 2019)

Day 344

Python shell get last value

_does the magic. Can be used in expressions too.

-

Day 343 (09 Dec 2019)

Day 343

Python unittest

- When creating a TestCase, all vars set up in setUp should belong to the class –

self.xxx - The functions run in alphabetical order but it’s not something I should depend on

Stack / ideas

Some kind of ML language switcher that trains on my input – I write something in L1, delete, write same keystrokes on L2 => training instance. Also based on window class and time maybe?

Tensorflow ‘could not find valid device for node’

“Could not find valid device for node.” while eagerly executing. - means wrong input type.

- When creating a TestCase, all vars set up in setUp should belong to the class –