serhii.net

In the middle of the desert you can say anything you want

-

Day 1595 (15 May 2023)

Pandas joining and merging tables

I was trying to do a join based on two columns, one of which is a pd

Timestamp.What I learned: If you’re trying to join/merge two DataFrames not by their indexes,

pandas.DataFrame.merge is better (yay precise language) than pandas.DataFrame.join.Or, for some reason I had issues with

df.join(.. by=[col1,col2]), even withdf.set_index([col1,col2]).join(df2.set_index...), then it went out of memory and I gave up.Then a SO answer1 said

use merge if you are not joining on the index

I tried it and

df.merge(..., by=col2)magically worked!

Pandas categorical types weirdness

Spent hours trying to understand what’s happening.

TL;DR categorical types inside groupbys get shown ALL, even if there are no instances of a specific type in the actual data.

# Shows all categories including OTHER df_item[df_item['item.item_category']!="OTHER"].groupby(['item.item_category']).sum() df_item['item.item_category'] = df_item['item.item_category'].astype(str) # Shows three categories df_item[df_item['item.item_category']!="OTHER"].groupby(['item.item_category']).sum()Rel. thread: groupby with categorical type returns all combinations · Issue #17594 · pandas-dev/pandas

Pandas seaborn plotting groupby can be used without reset_index

Both things below work! Seaborn is smart and parses pd groupby-s as-is

sns.histplot(data=gbc, x='items_available', hue="item.item_category", ) sns.histplot(data=gbc.reset_index(), x='items_available', hue="item.item_category", )

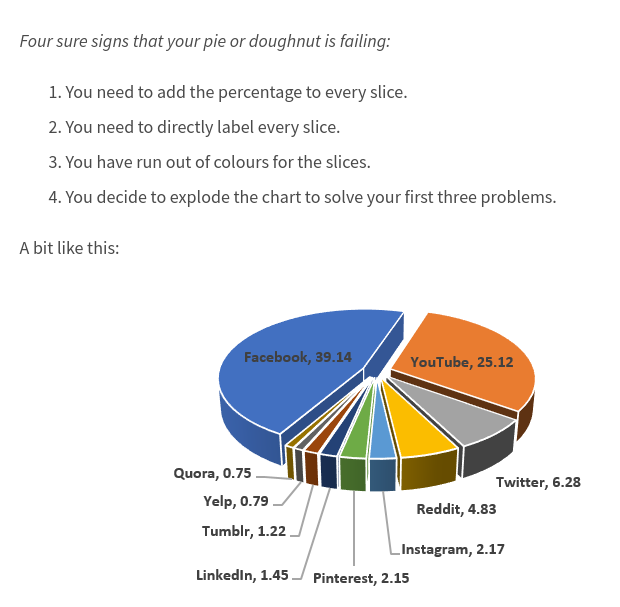

Pie Charts Considered Harmful

Note that seaborn doesn’t create pie charts, as seaborn’s author considers those to be unfit for statistical visualization. See e.g. Why you shouldn’t use pie charts – Johan 1

Why you shouldn’t use pie charts:

Pies and doughnuts fail because:

- Quantity is represented by slices; humans aren’t particularly good at estimating quantity from angles, which is the skill needed.

- Matching the labels and the slices can be hard work.

- Small percentages (which might be important) are tricky to show.

The world is interesting.

Pandas set column value based on (incl.groupby) filter

TL;DR

df.loc[row_indexer, col_indexer] = valuecol_indexercan be a non-existing-yet column! Androw_indexercan be anything, including based on agroupbyfilter.Below, the groupby filter has

dropna=Falsewhich would return also the rows that don’t match the filter, giving a Series with the same indexes as the main df# E.g. this groupby filter - NB. dropna=False df_item.groupby(['item.item_id']).filter(lambda x:x.items_available.max()>0, dropna=False)['item.item_id'] # Then we use that in the condition, nice arbitrary example with `item.item_id` not being the index of the DF df_item.loc[df_item['item.item_id']==df_item.groupby(['item.item_id']).filter(lambda x:x.items_available.max()>0, dropna=False)['item.item_id'],'item_active'] = TrueI’m not sure whether this is the “best” way to incorporate groupby results, but seems to work OK for now.

Esp. the remaining rows have

naninstead of False, can be worked around but is ugly:df_item['item_active'] = df_item['item_active'].notna() # For plotting purposes sns.histplot(data=df_item.notna(), ... )Pandas Filter by Column Value - Spark By {Examples} has more examples of conditions:

# From https://sparkbyexamples.com/pandas/pandas-filter-by-column-value/ df.loc[df['Courses'] == value] df.loc[df['Courses'] != 'Spark'] df.loc[df['Courses'].isin(values)] df.loc[~df['Courses'].isin(values)] df.loc[(df['Discount'] >= 1000) & (df['Discount'] <= 2000)] df.loc[(df['Discount'] >= 1200) & (df['Fee'] >= 23000 )] df[df["Courses"] == 'Spark'] df[df['Courses'].str.contains("Spark")] df[df['Courses'].str.lower().str.contains("spark")] df[df['Courses'].str.startswith("P")]

-

Day 1591 (11 May 2023)

jupyter pandas interactive tables

mwouts/itables: Pandas DataFrames as Interactive DataTables:

from itables import init_notebook_mode init_notebook_mode(all_interactive=True)It kept “loading”. I set the notebook to ’trusted’ as per help, didn’t help.

But this did:

init_notebook_mode(all_interactive=True, connected=True)(

connected=Truemakes it load libraries dynamically from the internet, and is not the default)Allows more interesting interactive dynamical tables. Incl. things like sorting by column etc.

Pandas add colums to dataframe based on dictionary inside a column

Courtesy of ChatGPT, after googling for 15 minutes didn’t bring anything immediately copy-pasteable.

I’ll deal with the existential crisis from this later.

To explode the cells containing dictionaries in a pandas DataFrame and append them as additional columns, you can use the apply() method along with the pd.Series() constructor. Here’s an example:

import pandas as pd # create example DataFrame df = pd.DataFrame({ 'id': [1, 2], 'info': [ {'name': 'Alice', 'age': 25}, {'name': 'Bob', 'age': 30, 'city': 'New York'} ] }) # use apply() with pd.Series() to explode the dictionaries and append them as columns df = pd.concat([df.drop('info', axis=1), df['info'].apply(pd.Series)], axis=1) print(df)Output:

id name age city 0 1 Alice 25 NaN 1 2 Bob 30 New YorkIn this example, we first create a DataFrame with two rows, where the info column contains dictionaries. We then use the apply() method with pd.Series() to explode the dictionaries and create additional columns for each key in the dictionaries. Finally, we concatenate the exploded columns with the original DataFrame using pd.concat().

-

Day 1587 (07 May 2023)

Zotero basics and group syncing

- After playing with it for an hour or so: Zotero is freaking awesome

- Sync

- If you add an account (in Preferences), all groups you’re part of will be synced after you click the sync green thing top-left1

- even if you enabled “automatic sync” through Preferences

- If you add an account (in Preferences), all groups you’re part of will be synced after you click the sync green thing top-left1

- Adding/importing

- Adding by DOI (“Add items by identifier”) is wonderful

- Works for DOI, ISBN, arxiv ID, and other IDs I never heard of

- Bibtex “Import from clipboard” is magic for the items that don’t have a DOI/ISBN/… (incl. direct arxiv links!)

- You can even import multiple papers that way! Neat for copying other .bib files

- You can add links to files online, and attach local copies of files

- There’s also “Find available PDF”! Wow!

- Adding by DOI (“Add items by identifier”) is wonderful

- notes and highlights; PDF viewer

- If the item has a (file, not link) PDF, you can highlight inside it!

- text and rectangle, multiple colors

- they are synced

- can be seen and edited in the web version too!

- Item/file notes

- can contain citations (= links to other files)!

- that are clickable!

- can be created based on existing annotations (=highlights!)

- support templates2

- can contain citations (= links to other files)!

- (AWESOME) tutorial: New PDF Reader Available in Zotero 6 | Lane Library Blog

- PDF reader shortcuts: kb:keyboard shortcuts [Zotero Documentation] (incomplete) and PDF Reader shortcuts (default and missing Zotero-specific ones mentioned in the first link)

- If the item has a (file, not link) PDF, you can highlight inside it!

- Taxonomy

- Items have

- tags (auto-completed; really nice search interface)

- related items/files

- symmetrical relationship, being related works both ways

- Nested collections work as expected (~ nested categories, parent shows all items belonging to all it’s descendants)

- Advanced search exists and is about as expected

- good support for ALL THE METADATA FIELDS

- no support for regex :(

- you can save useful searches

- Items have

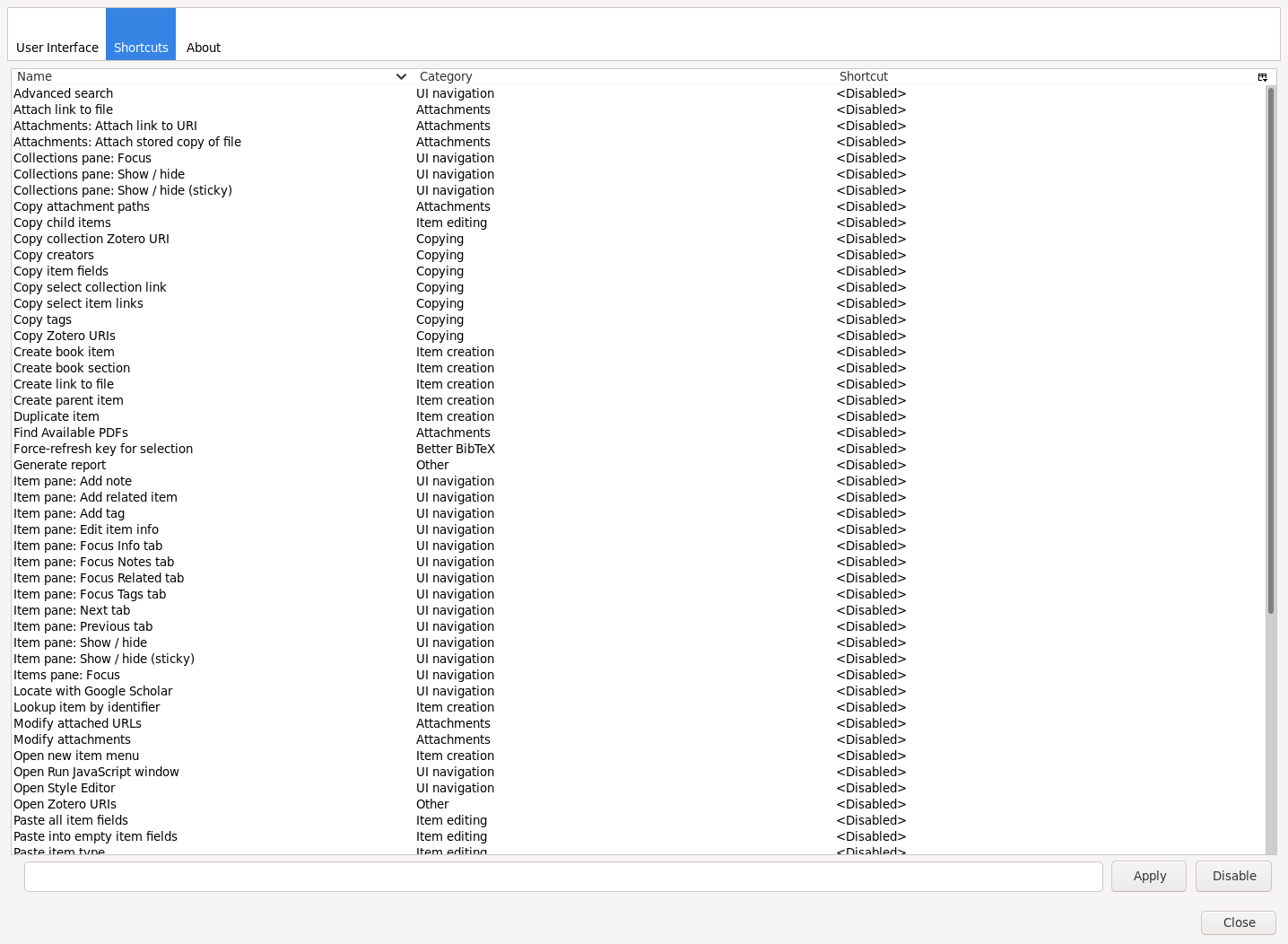

- Addons/Plugins

- Info and link to full list: Plug-ins and Integrations

- Zutilo3 is an addon for macros/shortcuts, found it when looking for a shortcut for “add items by identifier”

- “Lookup item by identifier”, now

<C-N>for me. - It’s awesome:

- “Lookup item by identifier”, now

- “Better Bibtex”4 allows exporting as bibtex

- including automatic export (used in 230507-1620 Zotero and Obsidian)

- and is EXTREMELY configurable5

- Zotero Robust Links for archiving links with Web Archive and friends

- Integrations

- Overleaf Zotero integration is a Premium Overleaf feature

- Other features

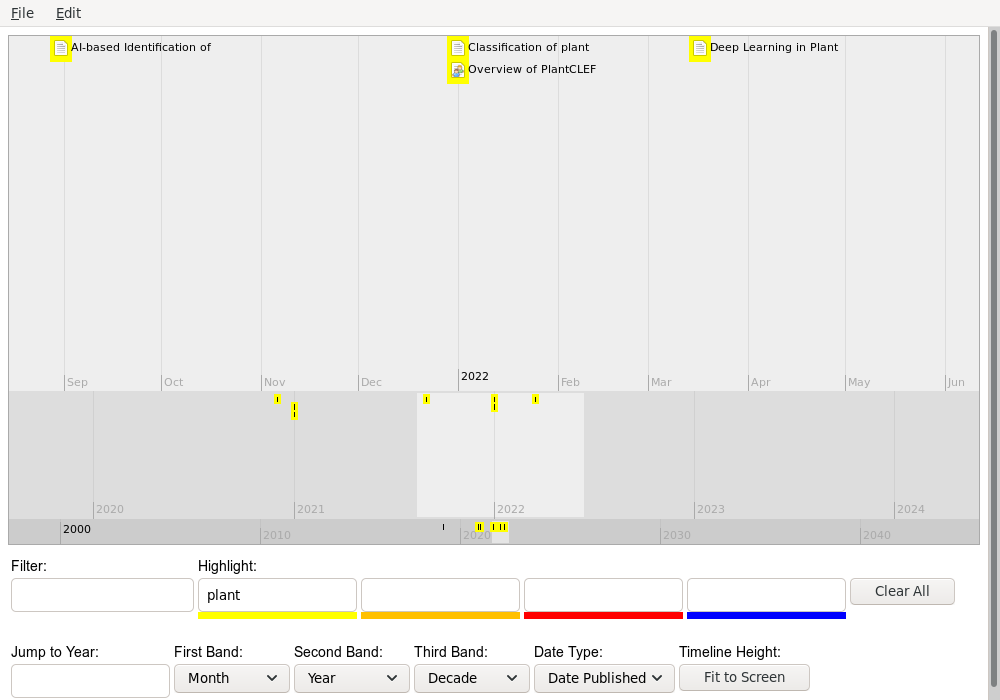

- “Timeline” is neat but not too useful right now:

- “Timeline” is neat but not too useful right now:

- Misc

- (Almost) all keyboard shortcuts (for PDF see above): kb:keyboard shortcuts [Zotero Documentation]

- TODO

zotero://links don’t work for me, and the default .desktop file they provide seems broken - TODO later

Plants paper notes

Related: 230529-1413 Plants datasets taxonomy

Key info

PlantCLEF

- PlantCLEF 2021 and 2022 summary papers, no doi :(

- Latest datasets not available, previous ones use eol and therefore are a mix of stuff

- Tasks and datasets differ by year (=can’t reliably do baseline), and main ideas differ too:

- 2021: use Herbaria to supplement lacking real-life photos

- Best methods were the ones that used domain-specific adaptations as opposed to simple CNNs

- 2022: multi-image(/metadata) class. problem with A LOT of classes (80k)

- Classes mean a lot of gimmicks to handle this memory-wise

- 2021: use Herbaria to supplement lacking real-life photos

- Why this doesn’t work for us:

- datasets not available!

- the ones that are are a mix of stuff

- A lot of methods that work there well are specific to the task, as opposed to the general thing

- People can use their own datasets for training

Metrics: MRR (=not comparable to some other literature, even if there were results on the same dataset)

PlantNet300k3 paper

- Dataset is a representative subsample of the big PlantNet dataaset that “covers over 35K species illustrated by nearly 12 million validated images”

- Subset has “306,146 plant images covering 1,081 species.

- Long-tailed distribution of classes:

- 80% of the species account for only 11% of the total number of images”

- Top1 accuracy is OK, but not meaningful

- Macro-average top-1 accuracy differs by A LOT

- The paper does a baselines using a lot of networks

Useful stuff

Citizen science

-

Citizen science (similar to [..] participatory/volunteer monitoring) is scientific research conducted with participation from the general public

most citizen science research publications being in the fields of biology and conservation

-

can mean multiple things, usually using citizens acting volunteers to help monitor/classify/.. stuff (but also citizens initiating stuff; also: educating the public about scientific methods, e.g. schools)

-

allowed users to upload photos of a plant species and its components, enter its characteristics (such as color and size), compare it against a catalog photo and classify it. The classification results are juried by crowdsourced ratings.4

-

Papers

- Paper about using Pl@ntNet5 for CS:

-

“Here we present two Pl@ntNet citizen science initiatives used by conservation practitioners in Europe (France) and Africa (Kenya).”

- paper citing it are interesting: Bonnet: How citizen scientists contribute to monitor… - Google Scholar

- Pl@ntNet can be

- limited for subsets of plants

- limiting plants based on GPS coordinates

- made to train better certain species by manually adding good examples as done in the Lewa Conservatory in Kenya

-

- Assessing accuracy in citizen science-based plant phenology monitoring | SpringerLink <

@fuccilloAssessingAccuracyCitizen2015(2015) z>-

Volunteers demonstrated greatest overall accuracy identifying unfolded leaves, ripe fruits, and open flowers.

- Maybe we’ll want to compare the areas where people are better at than ML in our paper?

-

- Similar to the above, but detecting weeds:Assessing citizen science data quality: an invasive species case study

<

@crallAssessingCitizenScience2011Assessing citizen science data quality (2011) z>- Compare to the paper about detecting weeds with DL: <

@chenPerformanceEvaluationDeep2021(2021) z>

- Compare to the paper about detecting weeds with DL: <

Centralized repositories of stuff

- GBIF (ofc)

- https://bien.nceas.ucsb.edu/bien/ more than 200k observations, and

- This:

- Georeferenced plant observations from herbarium, plot, and trait records;

- Plot inventories and surveys;

- Species geographic distribution maps;

- Plant traits;

- A species-level phylogeny for all plants in the New World;

- Cross-continent, continent, and country-level species lists.

- This:

- No names known to me in their Data contributors

Biodiversity

- Really nice paper: <

@ortizReviewInteractionsBiodiversity2021A review of the interactions between biodiversity, agriculture, climate change, and international trade (2021) z/d> - TL;DR climate change is not the worst wrt biodiversity

Positioning / strategy

Main bits

- Plant classification as a method to monitor biodiversity in the context of citizen science

Why plant classification is hard

- A lot of cleanly labeled herbaria, few labeled pictures (esp. tropical), but trasferring learned stuff from herbarium sheets to field photos is challenging:

-

(e.g. strong colour variation and the transformation of 3D objects after pressing like fruits and flowers) <

@waldchenMachineLearningImage2018(2018) z> - PlantCLEF2021 was entirely dedicated to using herbaria+photos, and there domain adaptations (joint representation space between herb+field) dramatically outperform best classical CNN, esp. the most difficult plants.<

@goeau2021overview(2021) z><@goeauAIbasedIdentificationPlant2021(2021) z>

-

- Connected to the above: lab-based VS field-based investigations

- lab-based has strict protocols for aquisition, people with mobile phones don’t

-

“Lab-based setting is often used by biologist that brings the specimen (e.g. insects or plants) to the lab for inspecting them, to identify them and mostly to archive them. In this setting, the image acquisition can be controlled and standardised. In contrast to field-based investigations, where images of the specimen are taken in-situ without a controllable capturing procedure and system. For fieldbased investigations, typically a mobile device or camera is used for image acquisition and the specimen is alive when taking the picture (Martineau et al., 2017). ”<

@waldchenMachineLearningImage2018(2018) z>

-

- lab-based has strict protocols for aquisition, people with mobile phones don’t

- Phenology (growth stages / seasons -> flowers) make life harder

- Plants sometimes have strong phenology (like bright red flowers) that make it more different and easier to find (esp. here in detecting them in satellite pictures: <

@pearseDeepLearningPhenology2021(2021) z>, but there DL failed less without flowers than non-DL), but sometimes don’t - And ofc. a plant with and without flowers looks like a totally different plant

- Related:

- Plant growth form has been the most helpful species metadata in PlantCLEF2021, but some plants at different stages of growth look like different plant stages.

- Plants sometimes have strong phenology (like bright red flowers) that make it more different and easier to find (esp. here in detecting them in satellite pictures: <

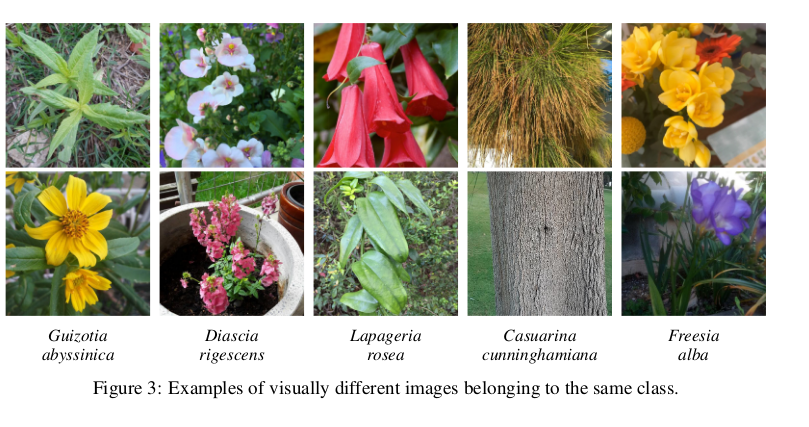

- Intra-species variability

- The Pl@ntNet-300k paper mentions

- epistemic (model) uncertainty (flowers etc.)

- aleatoric (data) uncertainty (small information given to make a decision)

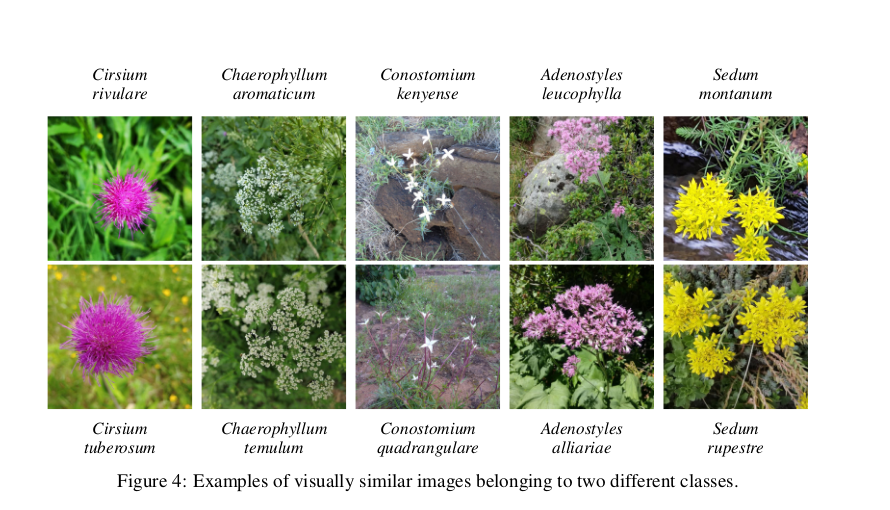

- Plants belonging to the same genus can be visually very similar to each other:

(same paper)

(same paper)

- Plants belonging to the same genus can be visually very similar to each other:

- long-tailed distribution, which: <

@walkerHarnessingLargeScaleHerbarium2022(2022) z/d>- is representative of RL

- is a problem because DL is “data-hungry”

- some say there are a lot of mislabeled specimens <

@goodwinWidespreadMistakenIdentity2015(2015) z/d>

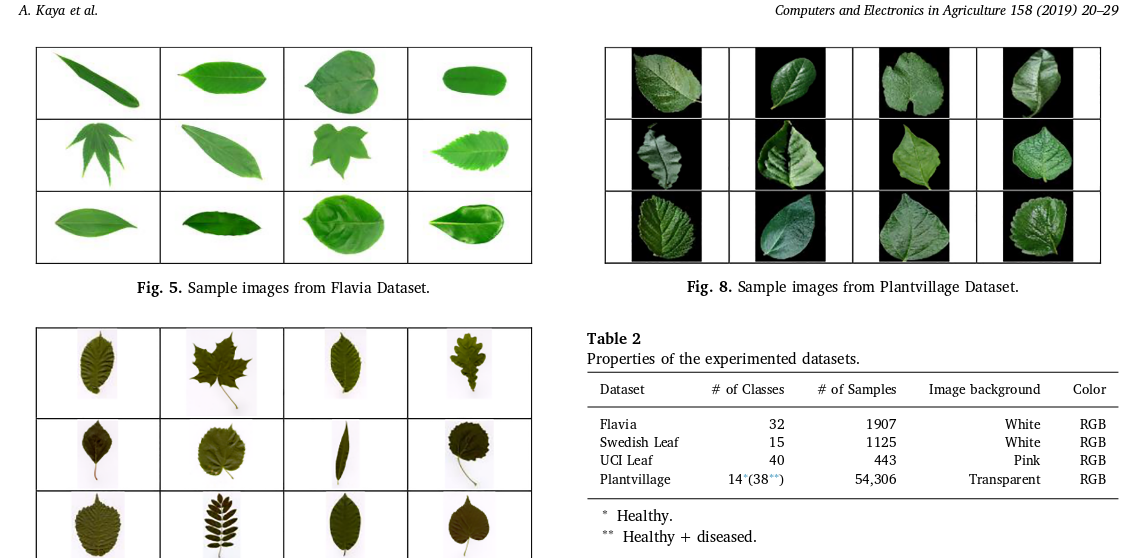

Datasets

EDIT separate post about this: 230529-1413 Plants datasets taxonomy

-

We can classify existing datasets in two types:

- Pl@ntNet / iNaturalist? / …: people with phones

- Clean standardized things like the Plant seedling classification dataset (<

@giselssonPublicImageDatabase2017(2017) z>), common weeds in Denmark dataset <@leminenmadsenOpenPlantPhenotype2020(2020) z/d> etc.- I’d put leaf datasets in this category too

- FloraIncognita is an interesting case:

-

FloraCapture requests contributors to photograph plants from at least five precisely defined perspectives

-

-

There are some special datasets, satellite and whatever, but especially:

- Leaf datasets exist and are used surprisingly often (if not exclusively) in overviews like the one we want to do:

- Flower datasets / “Natural flower classification”

- Seedlings etc. seem to be useful in industry (and they go hand-in-hand with weed-control)

- Fruit classification/segmentation and other very specific stuff we don’t really care about (<

@mamatAdvancedTechnologyAgriculture2022Advanced Technology in Agriculture Industry by Implementing Image Annotation Technique and Deep Learning Approach (2022) z/d> has an excellent overview of these)

-

Additional info present in datasets or useful:

- PlantCLEF2021 had additional metadata at the species level: growth form, habitat (forest, wetland, ..), and three others

-

- PlantCLEF2022: 3/5 taxonomic levels where used in various ways. Taxonomic loss is a thing (TODO - was this useful?)6

- Pl@ntNet and FloraIncognita apps (can) use GPS coordinates during the decision

- TODO Phenology / phenological stage: is this true to begin with?

Research questions similar to ours

Plant classification (a.k.a. species identification) on pictures

- Things like ecology and habitat loss, citizen science etc.

- Industry:

- Weed detection

Crop identification (sattellites)

Crop stage identification / phenology (sattellites)

Paper outline sketch

Introduction

- Tasks about plants are important

- Ecology: global warming etc., means different distribution of plant species, phenology stages changed, broken balances and stuff and one needs to track it; herbaria and digitization / labeling of herbaria

- Industry: crops stages identification, crops/weeds identification, fruit ripeness identification, etc. long list

- automatic methods have been used, starting from SVM/manual-feature-xxx, later - DL

- DL has been especially nice and improved stuff in all of these different sub-areas, show the examples that compare DL-vs-non-DL in the narrow fields

- The closest relevant thing is PlantCLEF competition that’s really really nice but \textbf{TODO what are we doing that PlantCLEF isn’t?}

- Goal of this paper is:

- Do a short overview of the tasks-connected-to-plants that exist and are usually tackled using AI magic

- Along the way: WHICH AI magic is usually used for the tasks that are formalized as image classification (TODO and object segmentation/detection?)

- Show that while

-

http://ceur-ws.org/Vol-2936/paper-122.pdf / <

@goeau2021overview(2021) z> ↩︎ -

https://hal-lirmm.ccsd.cnrs.fr/lirmm-03793591/file/paper-153.pdf / <

@goeau2022overview(2022) z> ↩︎ -

IBM and SAP open up big data platforms for citizen science | Guardian sustainable business | The Guardian ↩︎

-

Deep Learning with Taxonomic Loss for Plant Identification - PMC ↩︎

Zotero and Obsidian

EDIT: updated post 231010-2007 A new attempt at Zotero and Obsidian

Goal: Interact with Zotero from within Obsidian

Solution: “Citations”1 plugin for Obsidian, “Better Bibtex”2 plugin for Zotero!- Creating a local self-updating bibtex export:

- In Zotero, File->Export, format is “Better Bibtex”

- this shows an additional checkmark for keeping it autoupdated, check it

- file is now at the resulting path

- Setting up Obsidian with Citations (in Citations plugin settings):

- set the path to the one above

- and the format to BibLaTeX (or it’ll will fail with a generic error)

- Through the Palette run “Refresh citation database” (and do it every time something changes)

- Operation

- Search in palette for “Citations”

- Pandoc format citations3 are the default, but can be changed (almost anything can be changed!)

Neat bits:

-

There’s a configurable “Citations: Insert Markdown Citation” thing!

- My current template:

<_`@{{citekey}}` {{titleShort}} ({{year}}) [z]({{zoteroSelectURI}})/[d](https://doi.org/{{DOI}})_> - Legal fields:

- {{citekey}} - {{abstract}} - {{authorString}} - {{containerTitle}} - {{DOI}} - {{eprint}} - {{eprinttype}} - {{eventPlace}} - {{page}} - {{publisher}} - {{publisherPlace}} - {{title}} - {{titleShort}} - {{URL}} - {{year}} - {{zoteroSelectURI}}

- My current template:

-

hans/obsidian-citation-plugin: Obsidian plugin which integrates your academic reference manager with the Obsidian editor. Search your references from within Obsidian and automatically create and reference literature notes for papers and books. ↩︎

-

retorquere/zotero-better-bibtex: Make Zotero effective for us LaTeX holdouts ↩︎

(<

(< (pic from <

(pic from <