serhii.net

In the middle of the desert you can say anything you want

-

Day 2011 (04 Jul 2024)

Dia is dead, alternatives

Used Apps/Dia - GNOME Wiki! all my life and love it, but

News! 2011-Dec-18: Version 0.97.2 has been released.

Depends on python 2.7 and is very problematic to install now. I’ll miss it.

I hear https://draw.io is a good alternative.

I’ll add a list of options here next time I need this.

(But for older dia files I’ll have to edit maybe later: it could be installed on a Windows machine and export a broken .SVG which I could then edit in Inkscape.)

Cropping PDF files with Latex and inkscape

Context: in a paper, using PDF graphics is much better than e.g. PNG, because antialiasing (230628-1313 Saving plots matplotlib seaborn plotly PDF). Assume you need to crop one, and taking a PNG screenshot won’t cut it, because it would defeat the entire purpose.

LaTeX

TL;DR: in

\includegraphics,trim=left bottom right top(in cm)positioning - How can I crop included PDF documents? - TeX - LaTeX Stack Exchange:

includegraphics[clip, trim=0.5cm 11cm 0.5cm 11cm, width=1.00\textwidth]{PDFFILE.pdf}BUT! If I’m ever in this situation, inkscape can really nicely open a PDF and export only the selected things, also as PDF.

Inkscape (much better)

- When importing, “Replace text with closest available font”

- e.g. OpenSans gets matched to Open Sans and it works out usually

- the full list of fonts is shown in the import window

- Select the part I want to crop

- or create a shape over it and select that shape or something

- Export selection only as PDF; play with export settings as well if needed but for me the defaults worked this time

And either way

… test the results by downloading the PDF from overleaf and opening it locally, because antialiasing w/ imported vector pictures can be broken from within the preview window.

- When importing, “Replace text with closest available font”

-

Day 2009 (02 Jul 2024)

Ways to add CSS to a Quarto reveal presentation

Usual:

format: revealjs: logo: logo.png theme: [default, custom.scss]Adding after the first one, so no

!importantneeded1:format: revealjs: logo: logo.png header-includes: | <link href="custom.css" rel="stylesheet">Inline w/o declaring classes2:

Some [red words]{style="color:#cc0000"} or: ::: {style="font-size: 1.5em; text-align: center"} styling an entire div :::

- Main Quarto Reveal SCSS: quarto-cli/src/resources/formats/revealjs/reveal/css/reveal.scss at 303c4bc9f52ea0b0c36c5eb5dfbade9cece2b100 · quarto-dev/quarto-cli

- Quarto footer css: quarto-cli/src/resources/formats/revealjs/plugins/support/footer.css at main · quarto-dev/quarto-cli

-

Insert custom css into revealjs presentation · Issue #746 · quarto-dev/quarto-cli ↩︎

-

Excellent list of bits: Meghan Hall ↩︎

Dowgrading AUR packages to an older version in arch using yay cache and pacman pinning

For yay, the cache is in

$HOME/.cache/yay/If the package is there, then:

sudo pacman -U ./quarto-cli-1.4.555-1-x86_64.pkg.tar.zstThen pin the package in /etc/pacman.conf:

# Pacman won't upgrade packages listed in IgnorePkg and members of IgnoreGroup IgnorePkg = quarto-cli #IgnoreGroup =Then

yay -Syuwill ignore it as well::: Synchronizing package databases... endeavouros is up to date core is up to date extra is up to date multilib is up to date :: Searching AUR for updates... -> quarto-cli: ignoring package upgrade (1.4.555-1 => 1.5.52-1) :: Searching databases for updates... there is nothing to doRefs: How do you downgrade an AUR package? : r/archlinux

For not-AUR, there’s the

downgradecommand: archlinux-downgrade/downgrade: Downgrade packages in Arch Linux

More quarto reveal presentation notes for lecture slides

References

- Refs:

- Previously:

- 240424-1923 Presentations with Quarto and Reveal.js has my list of tricks

- Code

Misc

Better preview at a specific port, handy for restarting after editing CSS. Similar to quarto project frontmatter

preview: port: 4444 browser: false.quarto preview slides.qmd --port 4444 --no-browserSupported by default

In frontmatter:

logois in bottom-right+footer-logo-link

footerfor all slides- overwrite with div of class

footer

- overwrite with div of class

- Likely relevant for me: Numbering reveajs options for dynamic bits,

shift-heading-level-by,number-offset,number-section,number-depth— will touch if I need this.

Headers

- Extension: shafayetShafee/reveal-header: A Quarto filter extension that helps to add header text and header logo in all pages of RevealJs slide2

- Has absolutely awesome documentation!

In front matter:

header: one header text for all presentationtitle-as-header,subtitle-as-header: iftrue, place the presentation frontmatter’stitle/subtitleas header if one not provided (overwriting theheadervalue)- use-case: I same repeating text everywhere w/o specifying it every time.

hide-from-titleSlide:all/text/logoto hide it from title slidesc-sb-title: iftrue, h1/h2 slide titles will appear in the slide header automatically whenslide-levelis 2 or 3

Divs with classes:

-

.header: slide-specific header -

Excellent example from its docu of dynamic per-(sub-)section headers:

Left/right/center blocks of text in header

- If one uses section/subsection titles, they go in the left third and right third of the header, with the normal header text in the middle. This is neat to have in general, w/o the section/subsection titles.

- sc-title, on the left, is section title

- sb-title, on the right, is subsection title

- How do I add arbitrary text there? And what would be a good interface for it?

The right way — extension code

- I could look at the extension, maybe fork it, and find a way to put text in these divs

- Relevant code:

- This JS populates them in each slide: reveal-header/_extensions/reveal-header/resources/js/sc_sb_title.js at main · shafayetShafee/reveal-header

- add_header js: reveal-header/_extensions/reveal-header/resources/js/add_header.js at main · shafayetShafee/reveal-header

- Main lua: reveal-header/_extensions/reveal-header/reveal-header.lua at main · shafayetShafee/reveal-header

Ugly CSS hack

- (Learn sass in Y Minutes)

- CSS for the header bits: reveal-header/_extensions/reveal-header/resources/css/add_header.css at main · shafayetShafee/reveal-header

Since

.s[c|b]-titleis present always, text added that way will be present on the title slide regardless of settings. … and — adding text to a presentation through CSS is, well, ...reveal-header .sc-title { background-color: red; &::before { content: 'sc-title header content'; } }Slightly better ugly hack: main header text split in three, with two aligned l/r correspondingly.

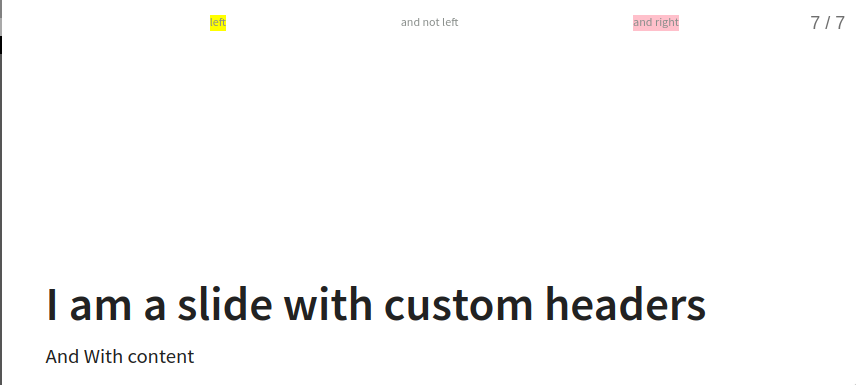

.header-right { // text-align: left; float: right; background-color: pink; display: inline-block; } .header-left { // text-align: left; float: left; background-color: yellow; display: inline-block; }::: header [left]{.header-left} and not left [and right]{.header-right} :::Result:

Extended ugly SCSS hack

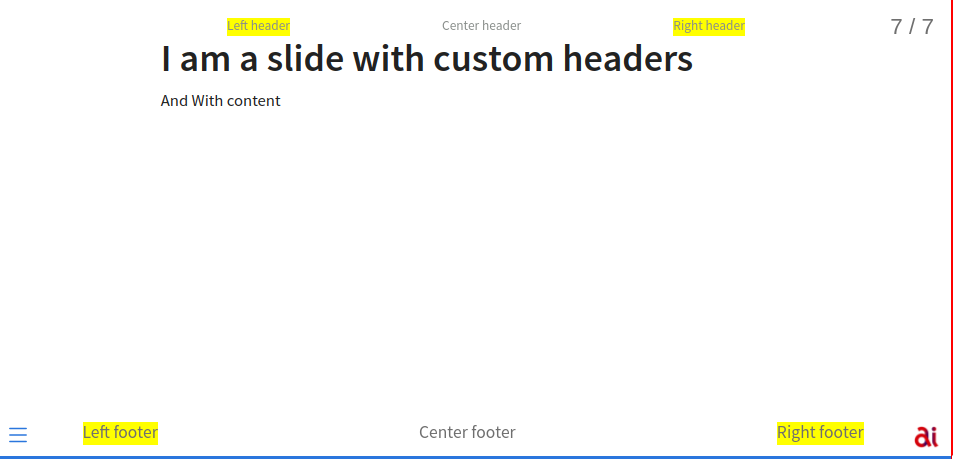

Improved the above to support both footer and headers

I have a hammer and everything is a nail SCSS can do mixins let’s use them// L/R margins of the footer — if logo is wider than this, it may overlap right footer text. // By default, logo max-height is 2.2em, width auto based on this. $footer-margin: 5em; // left or right column bits %hfcol { display: inline-block; } /* L/R columns in header */ .reveal .reveal-header .rightcol { @extend %hfcol; float: right; } .reveal .reveal-header .leftcol { @extend %hfcol; float: left; } /* L/R columns in footer */ .reveal .footer .leftcol { @extend %hfcol; float: left; margin-left: $footer-margin; } .reveal .footer .rightcol { @extend %hfcol; float: right; margin-right: $footer-margin; }Usage:

## I am a slide with custom headers And With content ::: footer [Left footer]{.leftcol} Center footer [Right footer]{.rightcol} ::: ::: header [Left header]{.leftcol} Center header [Right header]{.rightcol} :::Frontmatter usage works only for footer, likely

headerdoesn’t support markup.footer: "Center footer [right]{.rightcol} [left]{.leftcol}" # CHANGEMEResult:

PROBLEMS:

- asymmetrical if only one of the two is present. Likely fixable, but I don’t want to force any center div.

Footers through qmd cols

This works almost perfectly, including missing values:

::: footer ::: {.columns} :::: {.column width="20%"} left :::: :::: {.column width="50%"} Center footer :::: :::: {.column width="20%"} right :::: ::: :::It even works inside frontmatter as multiline string (not that it’s a good idea):

footer: | ::: {.columns} :::: {.column width="20%"} left :::: :::: {.column width="50%"} FB5 – Informatik und Sprachen: Deep Learning (MDS) :::: :::: {.column width="20%"} :::: :::This removes the margin placing it exactly in the same place as a normal footer:

.reveal .footer p { margin: 0 !important; }(but headers don’t work)

What is a good interface?

A filter that parses frontmatter and puts things in the correct places.

Misc

-

Day 2008 (01 Jul 2024)

NII MRI annotation tools

For later.

- SenteraLLC/ulabel: A browser-based tool for image annotation is used by the KiTS23 | The 2023 Kidney Tumor Segmentation Challenge for annotation.

Downloading single directories from GitHub repo

Download GitHub directory: paste an URI to the directory, get .zip of that directory only. Handy for downloading only parts of datasets

MRI Medical imaging benchmark datasets

- nnU-Net Revisited paper lists the following, in bold the ones it considers the best:

- ACDC, KiTS, AMOS “most suitable for benchmarking”

- BTCV, LiTS, BraTS

Datasets

ACDC

- Deep Learning Techniques for Automatic MRI Cardiac Multi-Structures Segmentation and Diagnosis: Is the Problem Solved? | IEEE Journals & Magazine | IEEE Xplore

- cardiac diagnosis

- 150 (Cardiac) MRI recordings/patients from 5 different diagnosis groups

- 3 classes: LV+RV heart cavities and myocardium

- 4D (3D+time)

- 100/50 patients train/test split

- nifti/.nii

- For each patient:

- raw+ground truth data for two frames

- 4d beating raw heart

- age etc.

- links:

- Website: ACDC Challenge

- Dataset official download link: Human Heart Project

- Their python code to load/save images to nifti (nii) format and compute metrics: https://www.creatis.insa-lyon.fr/Challenge/acdc/code/metrics_acdc.py

- Dataset structure

- nifti (.nii)

- sample: https://humanheart-project.creatis.insa-lyon.fr/database/#collection/637218c173e9f0047faa00fb/folder/6372204873e9f0047faa160b

- folders:

training/patient101/containing- Info.cfg

- metadata about the patient

- MANDATORY_CITATION.md

- patient101_4d.nii.gz

- 3d of the beating heart (+time), viewable animated in the brainbrowser viewer1

- patient101_frame01_gt.nii.gz

- ground truth data only for frame 01

- patient101_frame01.nii.gz

- raw data only for frame 01

- patient101_frame14_gt.nii.gz

- patient101_frame14.nii.gz

- Info.cfg

KiTS-23

- existed in 2019 2021 and 2023

- KiTS23 | The 2023 Kidney Tumor Segmentation Challenge2

- The main proceedings of the conference/challenge: Kidney and Kidney Tumor Segmentation: MICCAI 2023 Challenge, KiTS 2023, Held … - Google Books

- The latest publication is from the 2021 challenge

- // (Interesting anno startegy: professional places markers around the region, non-professional makes it into a pretty segmentation shape)

- KITS2023 dataset repo: neheller/kits23: The official repository of the 2023 Kidney Tumor Segmentation Challenge (KiTS23)

- they use postprocessing after annotations

- Sample: kits23/dataset/case_00194 at main · neheller/kits23

- annotating etc. was done online and the webapp is still live: Annotate | KiTS23

- They used the ulabel anno tool: SenteraLLC/ulabel: A browser-based tool for image annotation

- Structure

- raw images must be separately downloaded from servers!

- nifti/nii

- 489 train set instances released3 — mostly similar to the files from older challenges

- 3 classes: kidney, tumor, cyst

- main metadata for all patients in kits23.json: kits23/dataset/kits23.json at main · neheller/kits23

- directories are case00000-case00588

segmentation.nii.gzis the ground truth as used in the challenge, after postprocessing, the one we need.- ./instances/ has annotations — are the raw things annotated by humans.4

- break brainviewer but not brainbrowser

[kidney|tumor|cyst]_instance-[1|2|..?]_annotation-[1|2|3].nii.gz- N instances — e.g. most people have two kidneys — all annotations done by 3 diff annotators, then merged into the main segmentation file.

-

test set unreleased: How to Obtain Test Data in the KiTS23 Dataset? - KiTS Challenge ↩︎

-

↩︎It’s important to note the distinction between what we call “annotations” and what we call “segmentations”. We use “annotations” to refer to the raw vectorized interactions that the user generates during an annotation session. A “segmentation,” on the other hand, refers to the rasterized output of a postprocessing script that uses “annotations” to define regions of interest.[^kits2023]

-

Day 1998 (21 Jun 2024)

PDF forms in Linux

TL;DR use Chromium

PDF, PS and DjVu - ArchWiki has a table, but it lies, in my tests:

- zathura explicitly no support

- evince didn’t work

- gnome document viewer didn’t work

And for the Nth time, I end up remembering about Chrome/Chromium PDF viewer, that does this reliably.

Fish adventures in noglob, calculators and expressions

TL;DR: fish easy version below works, but needs quotes when expression is complex:

cc 2+2butcc 'floor(2.3)'.I’m continuing to move my useful snippets from zsh to fish (240620-2109 Fish shell bits), and the most challenging one was the CLI python calculator I really love and depend on, since it contained arguments with parentheses (which are fish expressions as well).

Basically:

cc WHATEVERrunsWHATEVERinside python, can do both easy math a la2+2and more casual statistics-ymean([2,33,28]).Before in zsh this was the magic function:

cc() python3 -c "from math import *; from statistics import *; print($*);" alias cc='noglob cc'Fish, easy version:

function cc command python3 -c "from math import *; from statistics import *; print($argv);" endWorks for easy

cc 2+2bits, but as soon as functions and therefore parentheses get involved (cc floor(2.3)) it starts to error out.[I] sh@nebra~/t $ cc mean([2,4]) fish: Unknown command: '[2,4]' in command substitution fish: Unknown command cc mean([2,4]) ^~~~~~^ [I] sh@nebra~/t $ cc mean\([2,4]\) >>> mean([2,4]) 3 [I] sh@nebra~/t $(But I REALLY don’t want to do

cc mean\([2, 3]\))In the zsh snippet,

noglobmeant basically “take this literally w/o expanding anything”, and it passed everything as-is to python, and this is what fails in my fish solution.Noglob in fish is fun:

- The fish language — fish-shell 3.7.0 documentation on escaping characters

- Implement the noglob modifier · Issue #3504 · fish-shell/fish-shell:

If you wish to use arguments that may be expanded somehow literally, quote them. echo ‘’ and echo “” both will print the literal.

- The fish language on quotes:

- single quotes = no expansion of any kind

\'for literals inside single

- double quotes = variable exp. (

$TERM) & command substitution ($(command))\"for literal"s inside double

- within each other, no special meaning

- Let’s test:

echo (ls)= ls output, one lineecho "$(ls)"= ls output, multilineecho '(ls)'=(ls)echo "(ls)"="(ls)"

- single quotes = no expansion of any kind

THEN

-

command python3 -c "from math import *; from statistics import *; print($argv);"cc ceil\(2\)+cc ceil(2)-

-

`command python3 -c “from math import *; from statistics import *; print(’$argv’);”

- literally prints the passed thing w/o python eval, w/ same rules

-

OK can I do a variable then?

set pyc $argv echo $pyc command python3 -c "from math import *; from statistics import *; print($pyc);"nope.

Bruteforcing the solution

(and learning to use fish loops mainly, of course there are better ways to do this.)

# list of simple, brackets, and parentheses + no, single, double quotes # no space between nums in brackets, python interpreter would add them. [2,3] — literal, [2, 3] — parsed by python set cmds \ '2+2' \ '\'2+2\'' \ '"2+2"' \ '[2,3]' \ '\'[2,3]\'' \ '"[2,3]"' \ 'floor(2.3)' \ '\'floor(2.3)\'' \ '"floor(2.3)"' function tcc set pyc $argv # command python3 -c "from math import *; from statistics import *; print" '(' "$pyc" ');' # command python3 -c "from math import *; from statistics import *; print($pyc);" command python3 -c "from math import *; from statistics import *; print($pyc);" end # loop through all test cases to see sth that works for all for i in $cmds echo $i: echo " $(tcc $i)" endAt the end, no additional literal quotes + initial command didn’t error out, and we came full circle:

set cmds \ '2+2' \ '[2,3]' \ 'floor(2.3)' # winner command! function tcc command python3 -c "from math import *; from statistics import *; print($argv);" end[I] sh@nebra~/t $ ./test_cc.sh 2+2: 4 [2,3]: [2, 3] floor(2.3): 2- Double quotes in the python command mean only

$pycgets expanded $pycin the working versions have no hard-coded quotes- in CLI

tcc floor(2.3)still fails — because like that it’s a command, not a string. In the file it was inside single quotes, as a string. So I can do this in the CLI as well.

So simple and logical at the end.

Final solution

function cc echo ">>> $argv" command python3 -c "from math import *; from statistics import *; print($argv);" endWhen using, quotes are needed only for complex bits (parentheses,

*etc.).[I] sh@nebra~/t $ cc 2+2 >>> 2+2 4 [I] sh@nebra~/t $ cc [2,3,4] >>> [2,3,4] [2, 3, 4] # no quotes [I] sh@nebra~/t $ cc mean([2,3,4]) fish: Unknown command: '[2,3,4]' in command substitution fish: Unknown command cc mean([2,3,4]) ^~~~~~~~^ # with quotes [I] sh@nebra~/t $ cc 'mean([2,3,4])' >>> mean([2,3,4]) 3So I literally had to follow the advice from the first link I found and used single quotes in my initial command:

If you wish to use arguments that may be expanded somehow literally, quote them. echo ‘’ and echo “” both will print the literal.

Still, I learned a lot about fish in the process and honestly am loving it.