serhii.net

In the middle of the desert you can say anything you want

-

Day 1538 (18 Mar 2023)

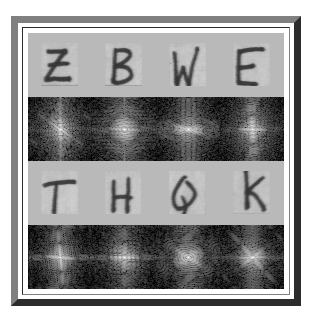

Detecting letters with Fourier transforms

TIL from my wife in the context of checkbox detection! letters detection fourier transform – Google Suche

- Introduction to the Fourier Transform for Image Processing

-

- crowoy/Letter-Recognition: Fourier Feature Analysis - Classifying letters [S,T,V] based on features in the Fourier Space

TL;DR you can use fourier transforms on letters, that then lead to differentiable results! Bright lines perpendicular to lines in the original letter etc.

My link wiki's rebirth into Hugo, final write-up

Good-bye old personal wiki, AKA Fiamma. Here are some screenshots which will soon become old and nostalgic:

I’ve also archived it, hopefully won’t turn out to be a bad idea down the line (but that ship has sailed long ago…):

- archive.is: Fiamma (PKM and links wiki) - Fiamma

- web.archive.org: Fiamma (PKM and links wiki) - Fiamma

Will be using the Links blog from now on: https://serhii.net/links

- Introduction to the Fourier Transform for Image Processing

-

Day 1537 (17 Mar 2023)

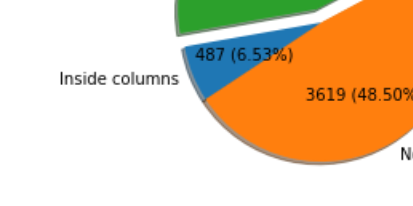

matplotlib labeling pie-charts

python - How to have actual values in matplotlib Pie Chart displayed - Stack Overflow:

def absolute_value(val): a = numpy.round(val/100.*sizes.sum(), 0) return a plt.pie(sizes, labels=labels, colors=colors, autopct=absolute_value, shadow=True)Can be also used to add more complex stuff inside the wedges (apparently the term for parts of the ‘pie’).

I did this:

def absolute_value(val): a = int(np.round(val/100.*np.array(sizes).sum(), 0)) res = f"{a} ({val:.2f}%)" return resfor this:

Notes after writing a paper

Based on feedback on a paper I wrote:

- Finally learn stop using “it’s” instead of “its” for everything and learn possessives and suff

- Don’t use “won’t”, “isn’t” and similar short forms when writing a scientific paper. “Will not”, “is not” etc.

- ‘“Numbered”’ lists with “a), b), c)” exist and can be used along my usual bullet-point-style ones

-

Day 1533 (13 Mar 2023)

micro is a simple single-file CLI text editor

Stumbled upon zyedidia/micro: A modern and intuitive terminal-based text editor. Simple text editor that wants to be the successor of nano, CLI-based. The static .tar.gz contains an executable that can be directly run. Played with it for 30 seconds and it’s really neat**.

(Need something like vim for someone who doesn’t like vim, but wants to edit files on servers in an easy way in case nano isn’t installed and no sudo rights.)

json diff with jq, also: side-by-side output

Websites

There are online resources:

- JSON Diff - Online JSON Compare Diff Finder

- JSON Diff - The semantic JSON compare tool is a bit prettier All similar to each other. I don’t find them intuitive, don’t like copypasting, and privacy/NDAs are important.

CLI

SO thread1 version:

diff <(jq --sort-keys . A.json) <(jq --sort-keys . B.json)Wrapped it into a function in my

.zshrc:jdiff() { diff <(jq --sort-keys . "$1") <(jq --sort-keys . "$2") }Side-by-side output

vimdiffis a thing and does this by default!Otherwise2 diff has the parameters

-y, and--suppress-common-linesis useful.This led to

jdiff’s brotherjdiffy:jdiffy() { diff -y --suppress-common-lines <(jq --sort-keys . "$1") <(jq --sort-keys . "$2") }Other

git diff --no-indexallows to use git diff without the thing needing to be inside a repo. Used it heavily previously for some of its fancier functions. Say hi togdiff:gdiff() { git diff --no-index "$1" "$2" }

xlsxgrep for grepping inside xls files

This is neat: xlsxgrep · PyPI

Supports many grep options.

-

Day 1531 (11 Mar 2023)

Rancher and kubernetes, the very basics

-

Rancher

- most interesting thing to me in the interface is workers->pods

-

Two ways to run stuff

- via yaml

- via kubernetes CLI /

kubectl

-

Via yaml:

- change docker image and pod name

- you can use a command in the yaml syntax run in interactive-ish mode, ignoring the Docker command, to execute stuff inside the running docker image.

- name: podname image: "docker/image" command: - /bin/sh - -c - while true; do echo $(date) >> /tmp/out; sleep 1; done

-

Kubernetes Workloads and Pods | Rancher Manager

- Pods are groups of containrs that share network and storage, usually it’s one container

-

Assigning Pods to Nodes | Kubernetes:

nodeNameis a simple direct waymetadata: name: nginx spec: containers: - name: nginx image: nginx nodeName: kube-01

-

You can set contexts, and then e.g. the same namespace will be applied to all your commands:

k config set-context main --namespace=my-namespace- (you can also shorten

kubectltokin the CLI)

-

k get pods -o wide -wreturns a detailed overview that is live updated (a lawatch)

-

-

Day 1530 (10 Mar 2023)

Things I learned at a hackathon^W onsite working session™

- don’t create branches / merge requests until I start working on the ticket - don’t do many at the same time either, harder and then rebasings needed

- delete branches after they get merged to main (automatically) - sometimes I didn’t to play it safe but never needed it and have them locally regardless

- Most of my code is more complex and more layers of abstraction than actually needed, and gets worse with later fixes. Don’t aim for abstraction before abstraction is needed

- When solving complex-er merge conflicts, sometimes this helps: first leave all imports, merge the rest, and then clean up the remaining imports

-

Day 1527 (07 Mar 2023)

Cleaning printer printheads

TIL - when looking how to clean printer heads - that some printers can do it automatically! Can be started both through the OS GUI or the printer itself (if it has buttons and stuff).

Wikihow (lol) as the first result in Google gave me enough to learn about automatic cleaning being a thing: How to Clean Print Heads: Clogged & Dried Up Print Heads; How to Clean a Printhead for Better Ink Efficiency < Tech Takes - HP.com Singapore +