serhii.net

In the middle of the desert you can say anything you want

-

Day 1342 (04 Sep 2022)

Setting up again Nextcloud, dav, freshRSS sync etc. for Android phone

New phone, need to set up again sync and friends to my VPS - I’ll document it this time.

This is part of the success story of “almost completely de-Google my life” that’s one of the better changes I ever did.

- Port Knocking

- Port Knocker | F-Droid - Free and Open Source Android App Repository

- Settings on server unchanged

- FreshRSS

- FreshRSS - Google Reader compatible API implementation

- Had remote access etc. enabled as described in FreshRSS

- EasyRSS on Android:

- Server: https://my.server/api/greader.php

- Username: the one I use for web login

- Password: the “API password’ I set in profile -> API management -> API password

- Nextcloud

- Nextcloud | F-Droid - Free and Open Source Android App Repository

- No issues logging in and setting up synchronization

- Enabled backing up of my contacts to nextcloud (but not back prolly)

- DavX / dav / calendar / …

- Nextcloud

- Nextcloud — DAVx⁵

- Worked as advertised, the nextcloud app opened the DAVx5 app

- But actually I need Fastmail

- Fastmail — DAVx⁵

- Set up app password, logged it with my fastmail username and it

- Worked

- Nextcloud

- Port Knocking

-

Day 1323 (16 Aug 2022)

Spacy custom tokenizer rules

Problem: tokenizer adds trailing dots to the token in numbers, which I don’t want to. I also want it to split words separated by a dash. Also

p.a.at the end of the sentences always becamep.a.., the end-of-sentence period was glued to the token.100,000,000.00,What-ever,p.a..The default rules for various languages are fun to read:

German:

- spaCy/punctuation.py at master · explosion/spaCy

- spaCy/tokenizer_exceptions.py at master · explosion/spaCy

General for all languages: spaCy/char_classes.py at master · explosion/spaCy

nlp.tokenizer.explain()shows the rules matched when doing tokenization.Docu about customizing tokenizers and adding special rules: Linguistic Features · spaCy Usage Documentation

Solution:

# Period at the end of line/token trailing_period = r"\.$" new_suffixes = [trailing_period] suffixes = list(pipeline.Defaults.suffixes) + new_suffixes suffix_regex = spacy.util.compile_suffix_regex(suffixes) # Add infix dash between words bindestrich_infix = r"(?<=[{a}])-(?=[{a}])".format(a=ALPHA) infixes = list(pipeline.Defaults.infixes) infixes.append(bindestrich_infix) infix_regex = compile_infix_regex(infixes) # Add special rule for "p.a." with trailing period # Usually two traling periods become a suffix and single-token "p.a.." special_case = [{'ORTH': "p.a."}, {'ORTH': "."}] pipeline.tokenizer.add_special_case("p.a..", special_case) pipeline.tokenizer.suffix_search = suffix_regex.search pipeline.tokenizer.infix_finditer = infix_regex.finditerThe

p.a..was interesting -p.a.was an explicit special case for German, but the two trailing dots got parsed asSUFFIXfor some reason (tyexplain()). Still no idea why, but given that special rules override suffixes I added a special rule specifically for that case,p.a..with two periods at the end, it worked.

-

Day 1318 (11 Aug 2022)

Running modules with pdbpp in python

python3 -m pdb your_script.pyis usualFor modules it’s unsurprisingly intuitive:

python3 -m pdb -m your.module.nameFor commands etc:

python3 -m pdb -c 'until 320' -m your.module.name

Python fnmatch glob invalid expressions

Globs

fnmatch — Unix filename pattern matching — Python 3.10.6 documentation:

Similar to Unix shell ones but without special handling of path bits, identical otherwise, and much simpler than regex:

*matches everything?matches any single character[seq]matches any character in seq[!seq]matches any character not in seq

Use case

I have a list of names, I allow the user to select one or more by providing either a single string or a glob and returning what matches.

First it was two parameters and “if both are passed X takes precedence, but if it doesn’t have matches then fallback is used …”.

Realized that a simple string is a glob matching itself - and I can use the same field for both simplifying A LOT. The users who don’t know about globs can just do strings and everything’s fine. Still unsure if it’s a good idea, but nice to have as option.

Then - OK, what happens if his string is an invalid glob? Will this lead to a “invalid regex” type of exception?

Well - couldn’t find info about this, in the source code globs are converted to regexes and I see no exceptions raised, and couldn’t provoke any errors myself.

Globs with only mismatched brackets etc. always match themselves , but the best one:

>>> fnmatch.filter(['aa]ab','bb'],"aa]*a[bc]") ['aa]ab']It ignores the mismatched bracket while correctly interpreting the matched ones!

So - I just have to care that a “name” doesn’t happen to be a correctly formulated glob, like

[this one].- If it’s a string and has a match, return that match

- Anything else is a glob, warn about globs if glob doesn’t have a match either. (Maybe someone wants a name literally containing glob characters, name is not there but either they know about globs and know it’s invalid now, or they don’t know about them - since they seem to use glob special characters, now it’s a good time to find out)

Pycharm shelf and changelists and 'Unshelve silently'

So - shelves! Just found out a really neat way to use them

“Unshelve silently” - never used it and never cared, just now - misclick and I did. It put the content of the shelf in a separate changelist named like the shelf, without changing my active changelist.

This is neat!

One of my main uses for both changelists and shelves are “I need to apply this patch locally but don’t want to commit that”, and this basically automates this behaviour.

-

Day 1317 (10 Aug 2022)

Huggingface utils ExplicitEnum python bits

In the Huggingface source found this bit:

class ExplicitEnum(str, Enum): """ Enum with more explicit error message for missing values. """ @classmethod def _missing_(cls, value): raise ValueError( f"{value} is not a valid {cls.__name__}, please select one of {list(cls._value2member_map_.keys())}" )… wow?

(Pdb++) IntervalStrategy('epoch') <IntervalStrategy.EPOCH: 'epoch'> (Pdb++) IntervalStrategy('whatever') *** ValueError: whatever is not a valid IntervalStrategy, please select one of ['no', 'steps', 'epoch']Was

MyEnum('something')allowed the whole time? God I feel stupid.

-

Day 1316 (09 Aug 2022)

Python sorted sorting with multiple keys

So

sorted()’skey=argument can return a tuple, then the tuple values are interpreted as multiple sorting keys!

Pycharm pytest logging settings

Pytest logging in pycharm

In Pycharm running config, there are options to watch individual log files which is nice.

But the main bit - all my logging issues etc. were the fault of Pycharm’s Settings for pytest that added automatically a

-qflag. Removed that checkmark and now I get standard pytest output that I can modify!And now

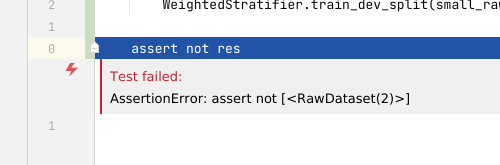

caplog1 works:def test_split_ds(caplog): caplog.set_level(logging.DEBUG, logger="anhaltai_bbk.data.train_dev_splitter.splitter") caplog.set_level(logging.DEBUG) # ...Dropping into debugger on uncaught exception + pytest plugin

So, previously I thought about this here: 220214-1756 python run pdb on exception

Anyway, solution was on pytest level, installing this package was the only thing needed: pytest-pycharm · PyPI

Installed it at the same time as this pycharm plugin, might’ve been either of the two:

pytest imp - IntelliJ IDEA & PyCharm Plugin | Marketplace / theY4Kman/pycharm-pytest-imp: PyCharm pytest improvements plugin

Anyway now life’s good:

Creating representative test sets

Thinking out loud and lab notebook style to help me solve a problem, in this installment - creating representative train/test splits.

Problem

Goal: create a test set that looks like the train set, having about the same distribution of labels.

In my case - classic NER, my training instances are documents whose tokens can be a number of different labels, non-overlapping, and I need to create a test split that’s similar to the train one. Again, splitting happens per-document.

Added complexity - in no case I want tags of a type ending up only in train or only in test. Say, I have 100 docs and 2 ORGANIZATIONs inside them - my 20% test split should have at least one ORGANIZATION.

Which is why random selection doesn’t cut it - I’d end up doing Bogosort more often than not, because I have A LOT of such types.

Simply ignoring them and adding them manually might be a way. Or intuitively - starting with them first as they are the hardest and most likely to fail

Implementation details

My training instance is a document that can have say 1 PEOPLE, 3 ORGANIZATIONS, 0 PLACES.

For each dataset/split/document, I have a dictionary counting how many instances of each entity does it have, then changed it to a ratio “out of the total number of labels”.

{ "O": 0.75, "B-ORGANIZATION": 0.125, "I-ORGANIZATION": 0, "B-NAME": 0, "I-NAME": 0, }I need to create a test dataset with the distribution of these labels as close as the train dataset. In both, say, 3 out of 4 labels should be

"O".So - “which documents do I pick so that when their labels are summed up I get a specific distribution”, or close to it. So “pick the numbers from this list that sum up close to X”, except multidimensional.

Initial algo was “iterate by each training instance and put it in the pile it’ll improve the most”.

Started implementing something to do this in

HuggingFace Datasets , and quickly realized that “add his one training instance to this HFDataset” is not trivial to do, and iterating through examples and adding them to separate datasets is harder than expected.“Reading the literature”

Generally we’re in the area of concepts like Subset sum problem / Optimization problem / Combinatorial optimization

Scikit-learn

More usefully, specifically RE datasets, How to Create a Representative Test Set | by Dimitris Poulopoulos | Towards Data Science mentioned sklearn.model_selection.StratifiedKFold.

Which led me to sklearn’s “model selection” functions that have a lot of functions doing what I need! Or almost

API Reference — scikit-learn 1.1.2 documentation

And the User Guide specifically deals with them: 3.1. Cross-validation: evaluating estimator performance — scikit-learn 1.1.2 documentation

Anyway - StratifiedKFold as implemented is “one training instance has one label”, which doesn’t work in my case.

My training instance is a document that has 1 PEOPLE, 3 ORGANIZATIONS, 0 PLACES.

Other places

Dataset Splitting Best Practices in Python - KDnuggets

Brainstorming

Main problem: I have multiple labels/

ys to optimize for and can’t directly use anything that splits based on a single Y.Can I hack something like sklearn.model_selection.StratifiedGroupKFold for this?

Can I read about how they do it and see if I can generalize it? (Open source FTW!) scikit-learn/_split.py at 17df37aee774720212c27dbc34e6f1feef0e2482 · scikit-learn/scikit-learn

Can I look at the functions they use to hack something together?

… why can’t I use the initial apporach of adding and then measuring?

Where can I do this in the pipeline? In the beginning on document level, or maybe I can drop the requirement of doing it per-document and do it at the very end on split tokenized training instances? Which is easier?

Can I do a random sample and then add what’s missing?

Will going back to numbers and “in this train set I need 2 ORGANIZATIONS” help me reason about it differently than the current “20% of labels should be ORGANIZATION”?

Looking at vanilla StratifiedKFold

scikit-learn/_split.py at 17df37aee774720212c27dbc34e6f1feef0e2482 · scikit-learn/scikit-learn

They sort the labels and that way get +/- the number of items needed. Neat but quite hard for me to adapt to my use case.

OK, NEXT

Can I think of this as something like a sort with multiple keys?..

Can I use the rarity of a type as something like a class weight? Ha, that might work. Assign weights in such a way that each type is 100 and

This feels relevant. Stratified sampling - Wikipedia

Can I chunk them in small pieces and accumulate them based on the pieces, might be faster than by using examples?

THIS looked like something REALLY close to what I need, multiple category names for each example, but ended up being the usual stratified option I think:

This suggests to multiply the criteria and get a lot of bins - not what I need but I keep moving

Can I stratify by multiple characteristics at once?

I think “stratification of multilabel data” is close to what I need

Found some papers, yes this is the correct term I think

scikit-multilearn

YES! scikit-multilearn: Multi-Label Classification in Python — Multi-Label Classification for Python

scikit-multilearn: Multi-Label Classification in Python — Multi-Label Classification for Python

scikit-multilearn: Multi-Label Classification in Python — Multi-Label Classification for Python:

In multi-label classification one can assign more than one label/class out of the available n_labels to a given object.

This is really interesting, still not EXACTLY what I need but a whole new avenue of stuff to look at

scikit-multilearn: Multi-Label Classification in Python — Multi-Label Classification for Python

The idea behind this stratification method is to assign label combinations to folds based on how much a given combination is desired by a given fold, as more and more assignments are made, some folds are filled and positive evidence is directed into other folds, in the end negative evidence is distributed based on a folds desirability of size.

Yep back to the first method!

They link this lecture explaining the algo: On the Stratification of Multi-Label Data - VideoLectures.NET

That video was basically what I needed

Less the video than the slides, didn’t watch the video and hope I won’t have to - the slides make it clear enough.

Yes, reframing that as “number of instances of this class that are still needed by this fold” was a better option. And here binary matrices nicely expand to weighted stratification if I have multiple examples of a class in a document. And my initial intuition of starting with the least-represented class first was correct

Basic algorithm:

- Get class with smallest number of instances in the dataset

- Get all training examples with that class and distribute them first

- Go to next class

Not sure if I can use the source of the implementation: scikit-multilearn: Multi-Label Classification in Python — Multi-Label Classification for Python

I don’t have a good intuition of what they mean by “order”, for now “try to keep labels that hang out together in the same fold”? Can I hack it to

I still have the issue I tried to avoid with needing to add examples to a fold/

Dataset, but that’s not the problem here.Generally - is this better than my initial approach?

What happens if I don’t modify my initial approach, just the order in which I give it the training examples?

Can I find any other source code for these things? Ones easier to adapt?

Anyway

I’ll implement the algo myself based on the presentation and video according to my understanding.

The main result of this session was finding more related terminology and a good explanation of the algo I’ll be implementing, with my changes.

I’m surprised I haven’t found anything NER-specific about creating representative test sets based on the distribution of multiple labels in the test instances. Might become a blog post or something sometime.jj

-

Day 1315 (08 Aug 2022)

Huggingface datasets set_transform

Previously: 220601-1707 Huggingface HF Custom NER with BERT

So you have the various mapping functions, but there’s a

set_transformwhich executes a transform whengetitem()is called.